Work Collection

Blending aesthetics and technology

to create immersive experiences.

All Works ‧ Artwork ‧ Entertaiment ‧ Communication ‧ ServiceBlending aesthetics and technology

to create immersive experiences.

Project Type: Artwork

Category: Art

Client: 傳音控股

Year: 2024

Location: 傳音總部大樓

Category: Art

Client: 傳音控股

Year: 2024

Location: 傳音總部大樓

Timescape

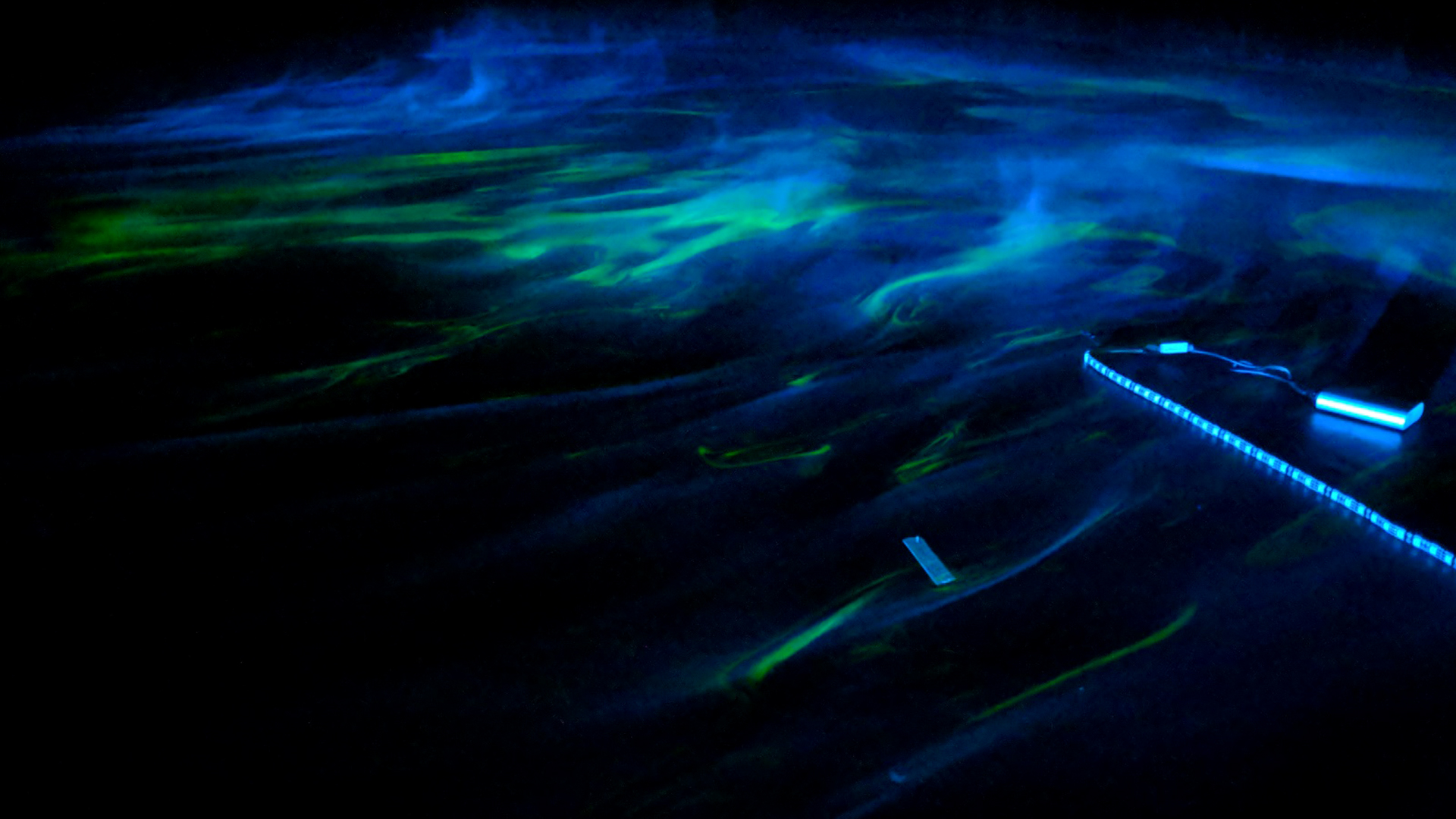

Homo sapiens emerged three hundred thousand years ago, and today they say we have entered a geological chapter called the Anthropocene. We hope that human actions, technological advancements, and natural processes can intertwine peacefully and intricately. Whether viewed with the perspective of millennia or a fraction of a second, we can see beautiful landscapes.

This is a poem written in digital form—

Temperature and latitude are the rhetoric; artificial intelligence shapes the landscape, becoming the meter. Poetic works located in the north and south, with micro and macro perspectives, tell day after day, forming a long, continuous poem about the new nature.

This is a poem written in digital form

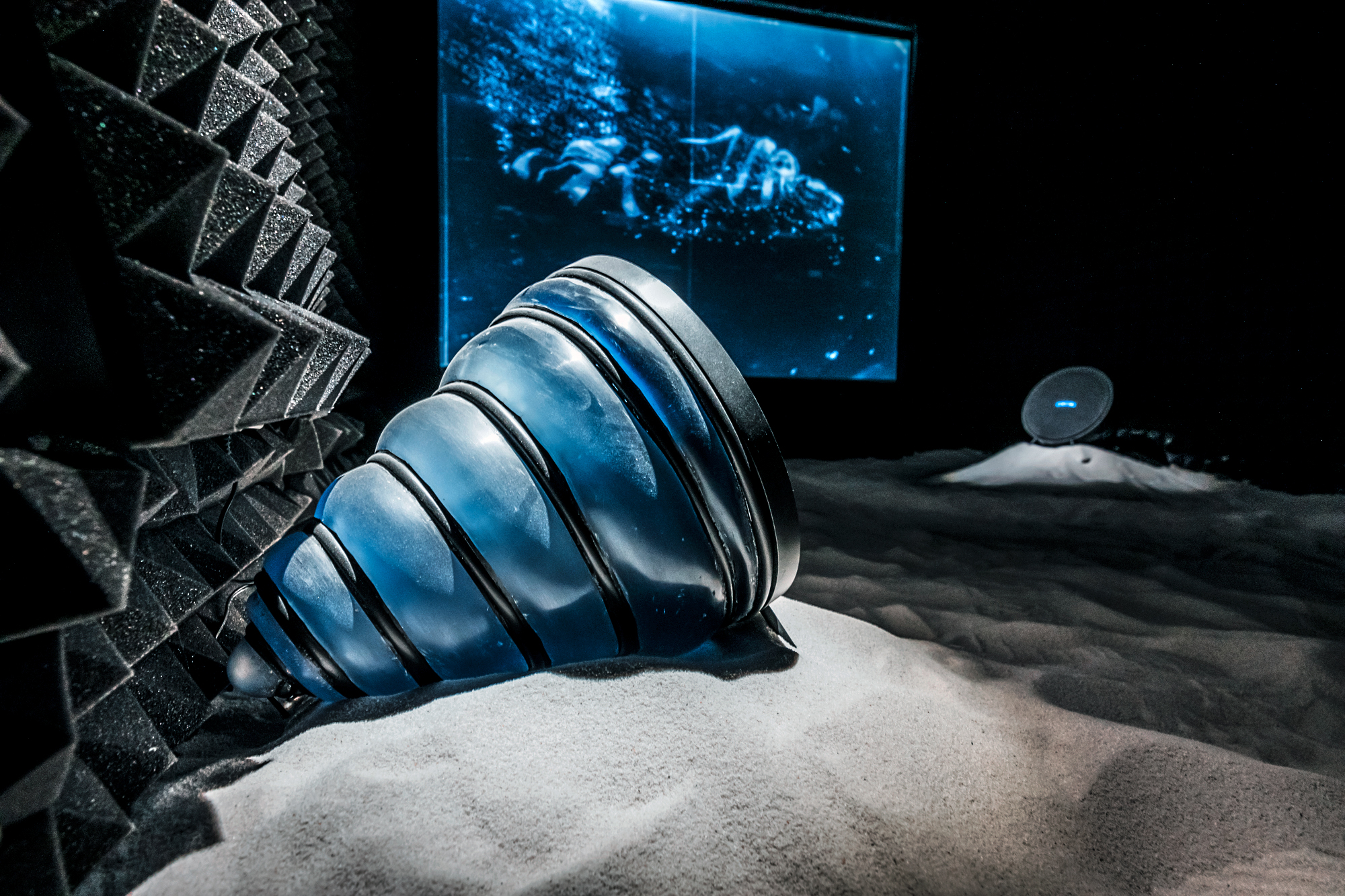

“Timescape”is an artistic creation commissioned by Transsion Holdings. Transsion is a technology consumer electronics company focused on environmental protection and sustainable development, with its primary consumer base located on the African continent. With the completion of its new headquarters building, Transsion commissioned our team to create an installation art piece at its entrance.

We hope that this artwork can convey the feeling of time through a landscape form, drawing inspiration from the natural scenery and seasonal changes of the original African continent, integrating the passage of time into it. Therefore, we named this piece "Natural Time Poem" and divided it into two parts, placed at the south and north entrances respectively.

Through these two interrelated pieces at the south and north entrances, we hope that viewers can easily experience the beauty of technology and imagine what "nature" might look like in this era. This is a natural time poem for everyone, telling the story of the integration between humans and nature, technology and art.

We hope that this artwork can convey the feeling of time through a landscape form, drawing inspiration from the natural scenery and seasonal changes of the original African continent, integrating the passage of time into it. Therefore, we named this piece "Natural Time Poem" and divided it into two parts, placed at the south and north entrances respectively.

Through these two interrelated pieces at the south and north entrances, we hope that viewers can easily experience the beauty of technology and imagine what "nature" might look like in this era. This is a natural time poem for everyone, telling the story of the integration between humans and nature, technology and art.

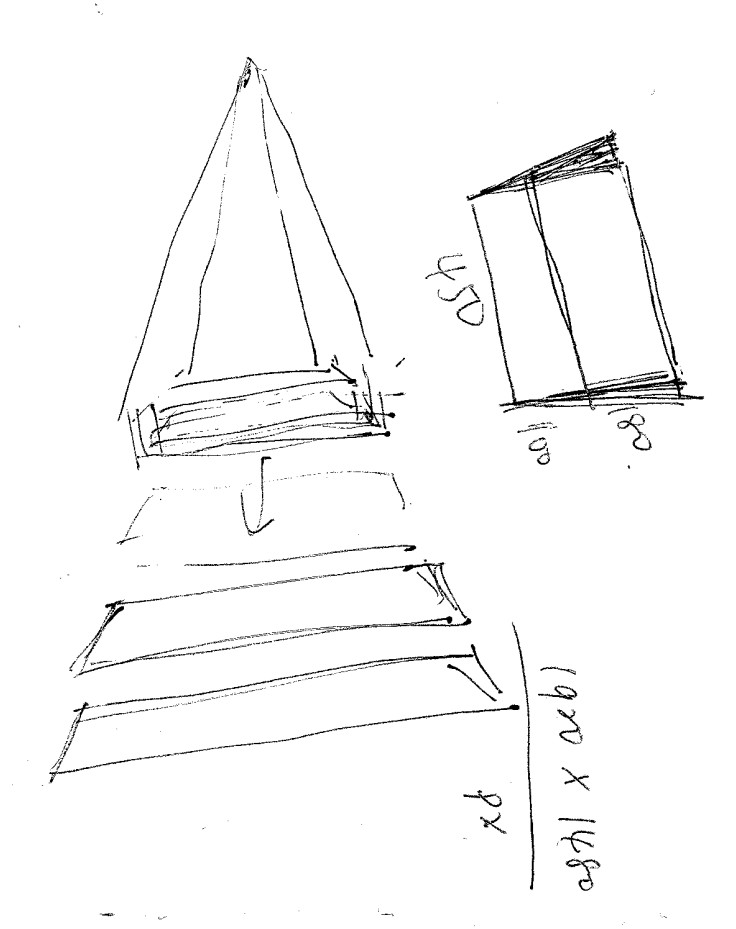

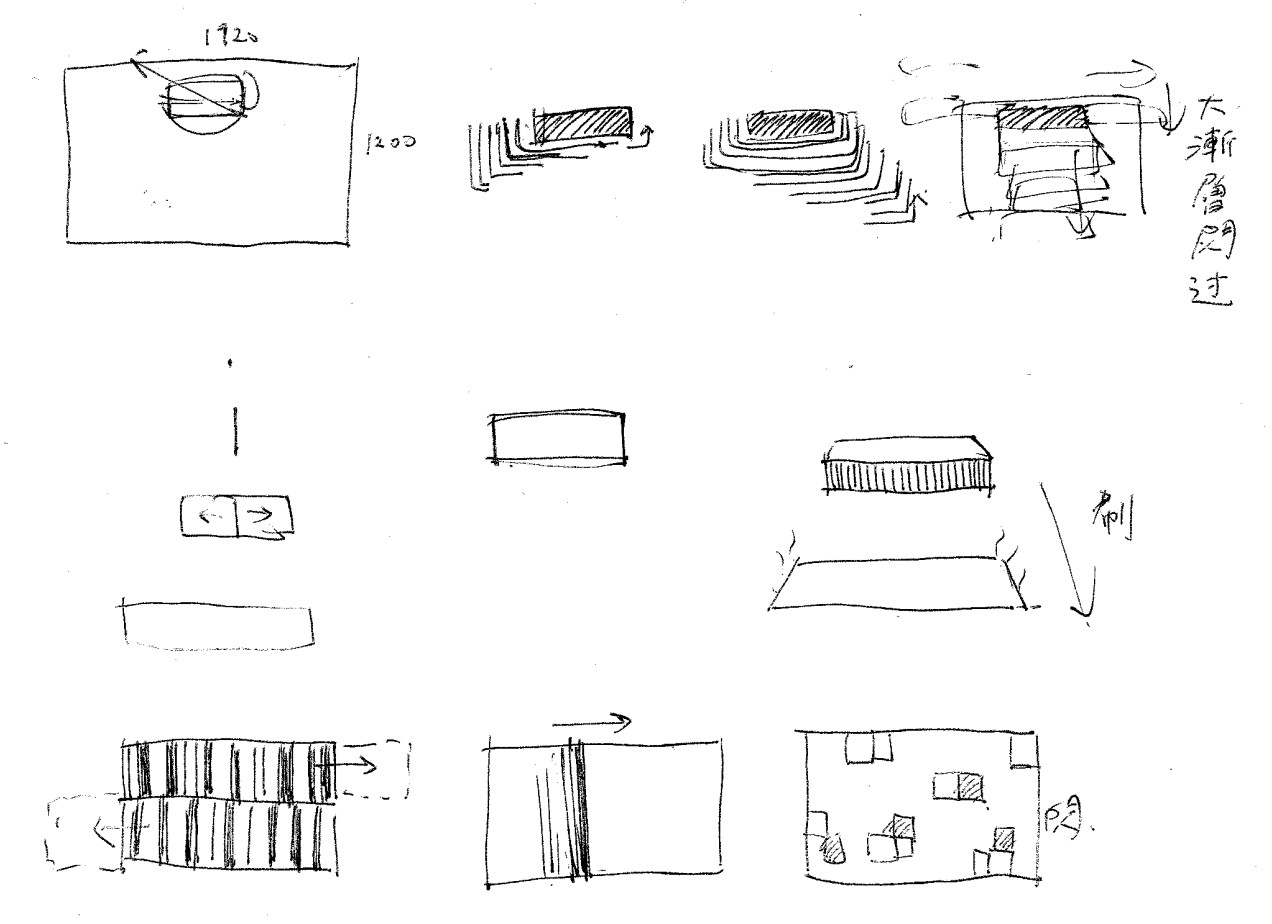

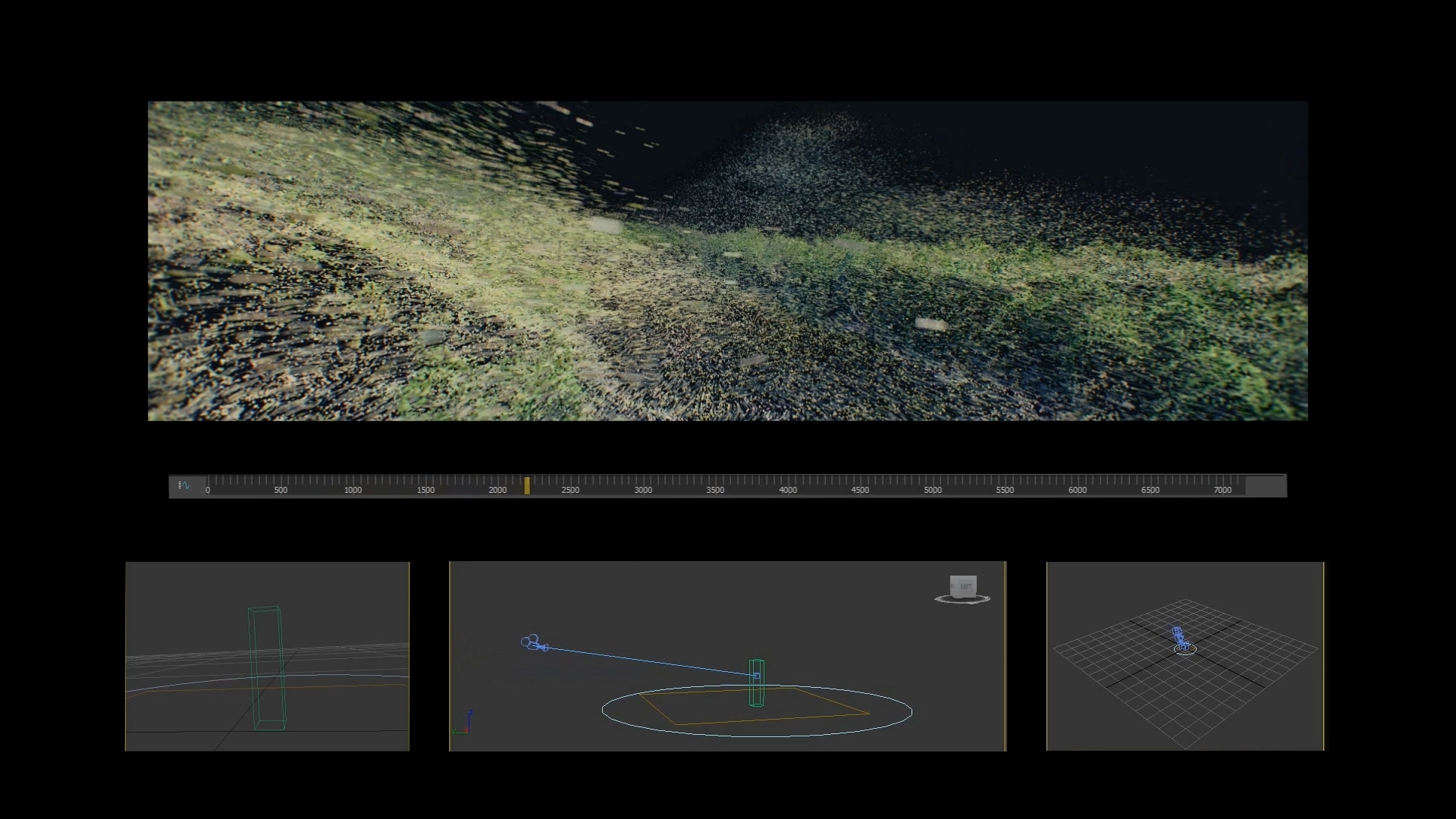

Work Developement

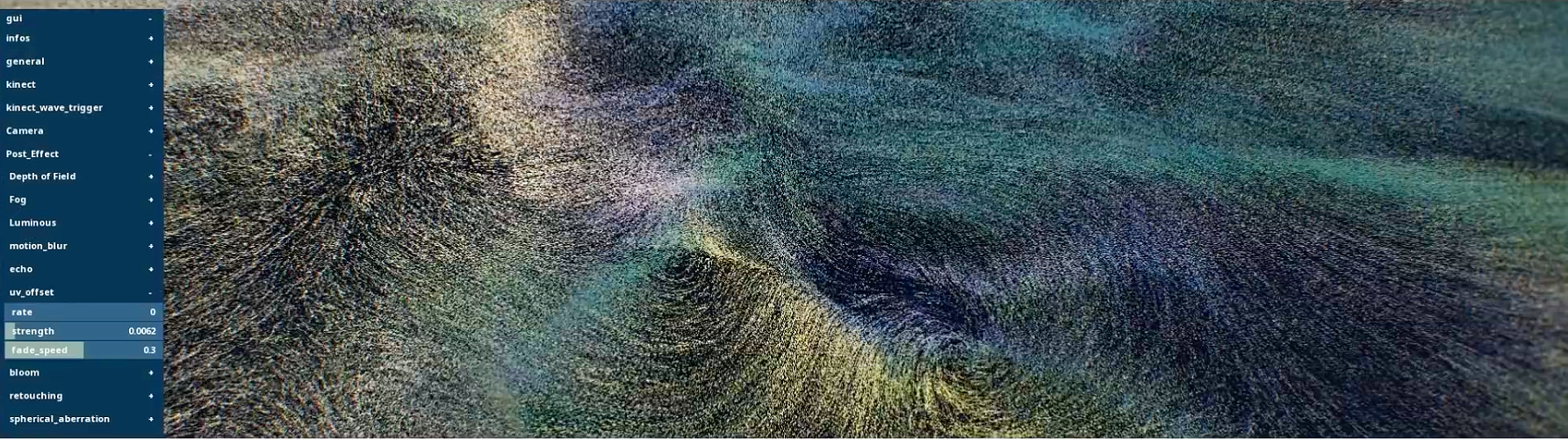

Verse I: North Halls

Spring / Summer

The first chapter of this poem presents a macroscopic perspective. Here, we have created a large digital art piece that showcases the seasonal climate and forest landscapes in real time. Using detection technology, it interacts with people entering the building. Depending on the local time and season, the artwork sometimes displays a vibrant landscape, sometimes a desolate scene, sometimes flowing water, and sometimes a gentle breeze. Tropical rainforests and savannas also appear, depicting the diverse landscapes of the African continent throughout the year.

Fall / Winter

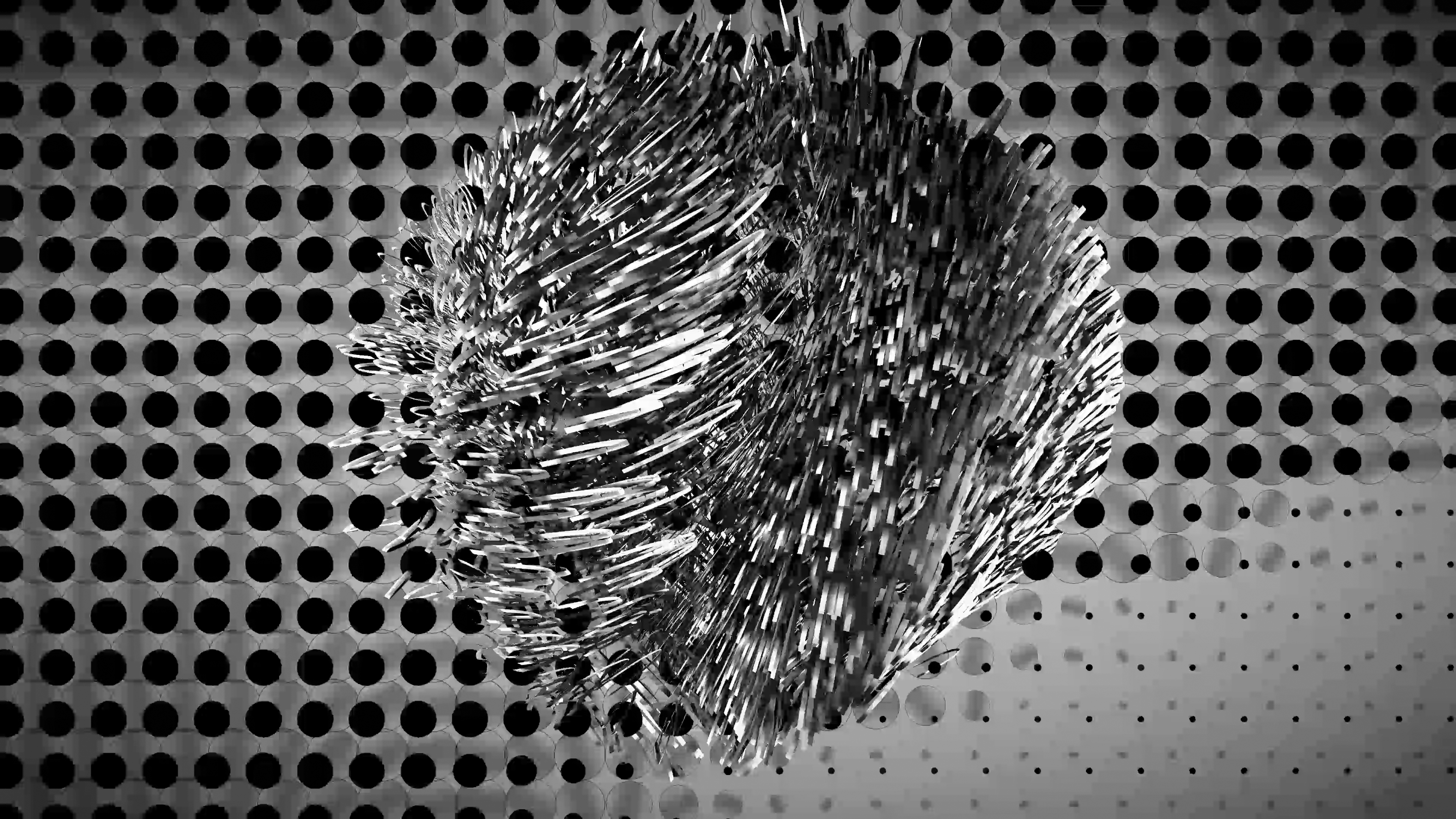

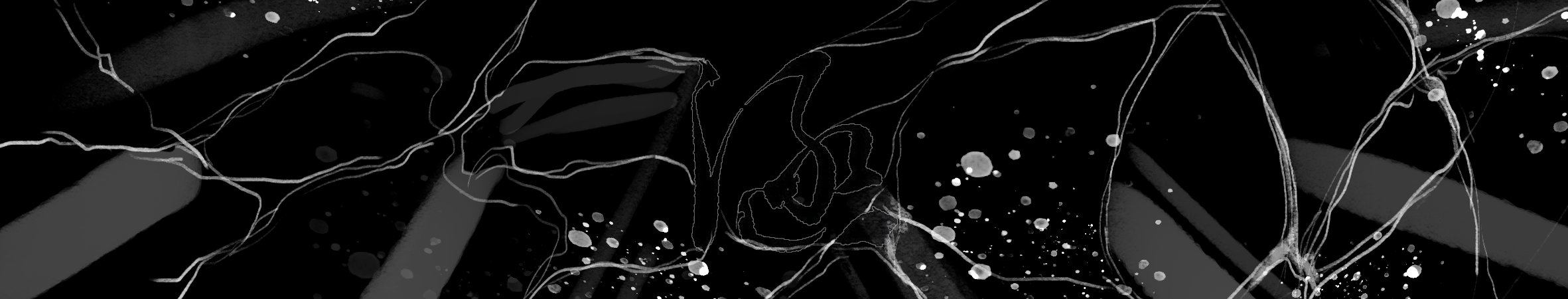

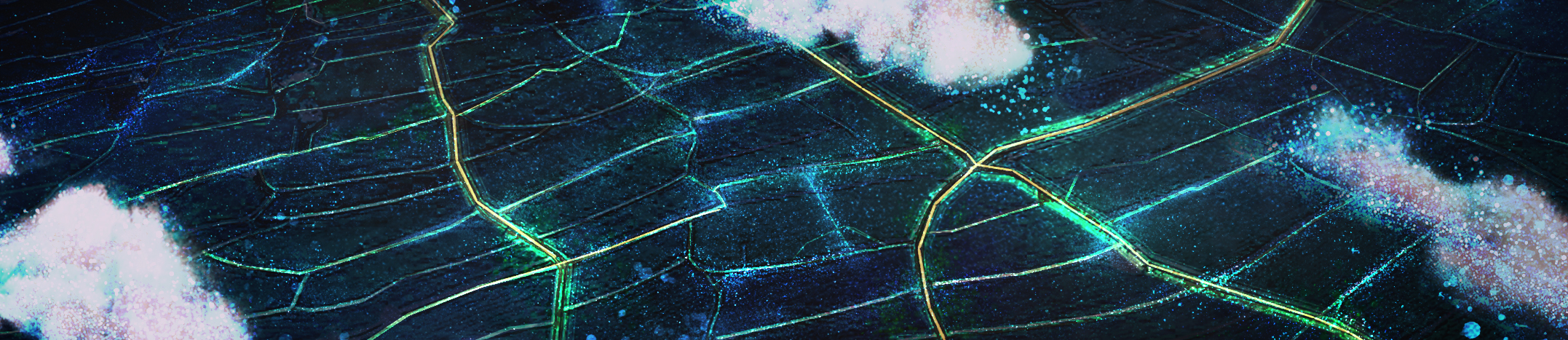

Verse II: South Hall

The second chapter of this poem represents the microscopic view. We designed a digital window that extracts a portion of the northern entrance artwork as its color palette. People can use an interface to draw different colors and textures on a tablet, which will instantly affect the display on the window. It simulates the relationship between humans and the grasses, branches, and dust, observing, exploring, and touching with the innocence of a child. Therefore, this is an interactive digital art canvas, where people can ultimately save their creations and consider them as their own "Timescape."

![]()

Through these two interrelated pieces at the south and north entrances, we hope that viewers can easily experience the beauty of technology and imagine what "nature" might look like in this era. This is a natural time poem for everyone, telling the story of the integration between humans and nature, technology and art.

Timescape

Client: Shenzhen Transsion Holdings Co., Ltd.

Artwork by ULTRACOMBOS

Production Team: Ting-An Ho, KeJyun Wu, Nate Wu, TingKai Chen, Tim Chen, XiaoRu Kuo, Jay Tseng, Herry Chang, Hoba Yang, Reng Tsai

Photography: YHLAA

Filming: BOLE studio

Year: 2024

Related Works:

Project Type: Communication, Entertainment

Category: Event, Exhibition

Year: 2022

Location: 高雄駁二藝術特區 Kaohsiung Pier-2 Art Center

Category: Event, Exhibition

Year: 2022

Location: 高雄駁二藝術特區 Kaohsiung Pier-2 Art Center

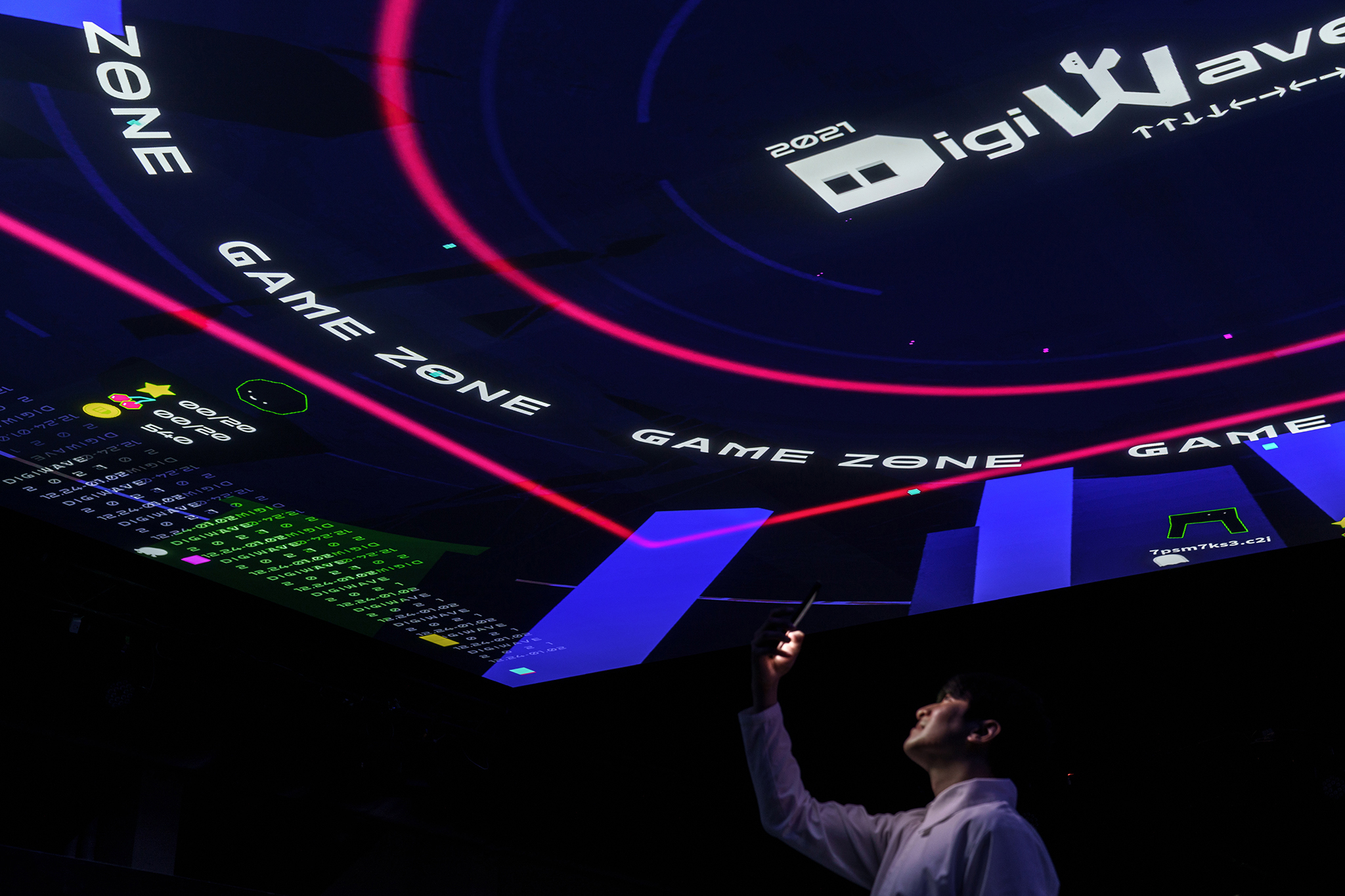

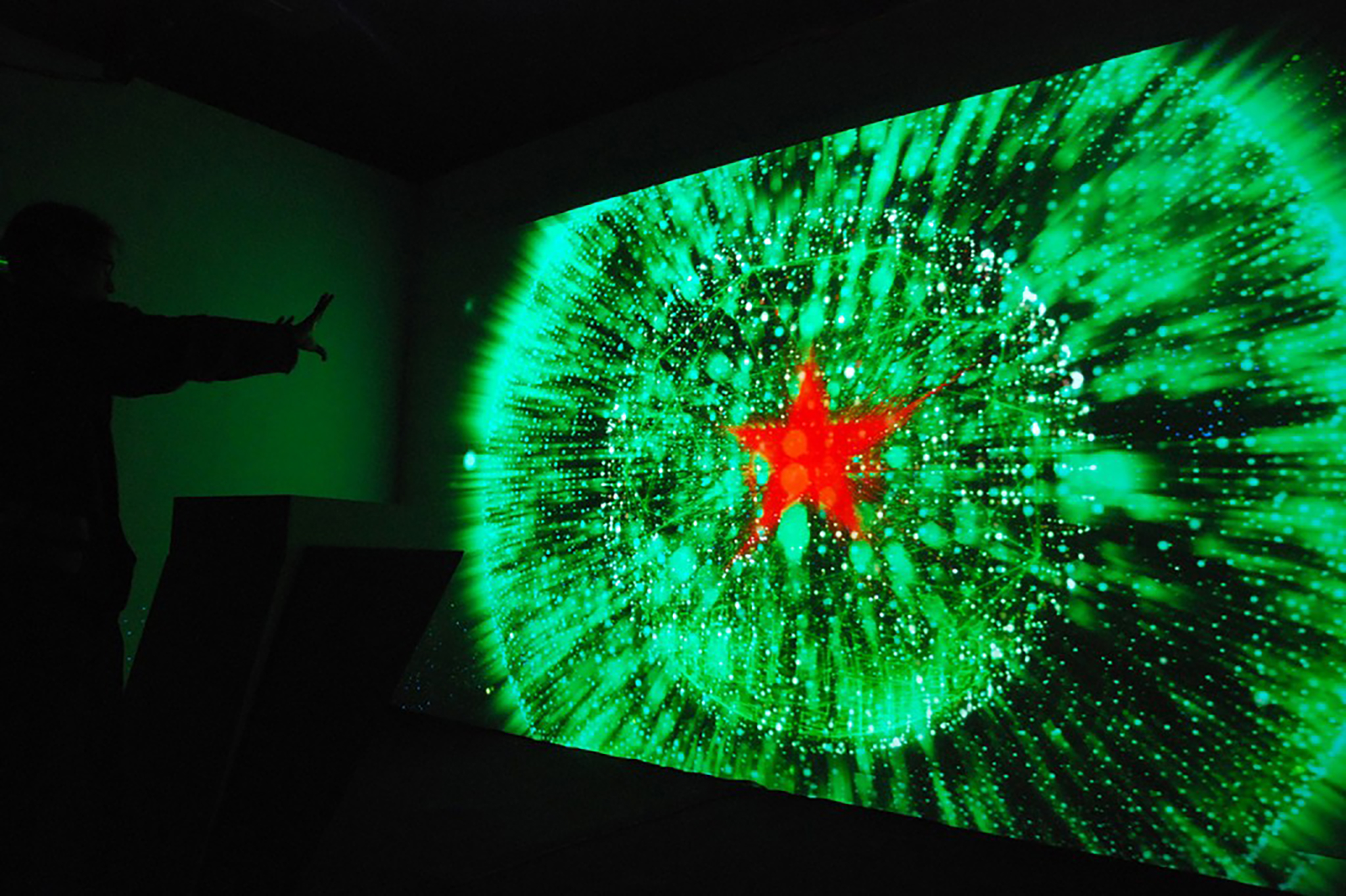

2021 DigiWave

︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎ⒷⒶ

Digi is the means, while Wave is the trend of thought, flow, and also communication. We hope to raise a big question on a major issue in life each year at DigiWave. Using technology to communicate, and presenting in the forms of exhibitions and events, will bring about the next wave that will push our lives forward.

Those Virtual Worlds We Can’t Wait to Enter

In 1986, it is uncertain whether during the process of porting the game “Gradius,” Kazuhisa Hashimoto, a program engineer of the video game company KONAMI, felt the game was too difficult or he had rather weak skills, he added a secret command "↑↑↓↓←→←→ⒷⒶ" in the game, and then further enhanced in another game "Contra," thus becoming the Konami Command that many people are familiar with.We once used only six buttons, "↑," "↓," "←," "→," "Ⓑ," and "Ⓐ," to let our consciousness roam and wander through various electronic worlds in a rectangular frame. This rectangular frame is everywhere, in the living room, on the desktop, on the side of the streets, or even in your pocket. In the past few decades, it has evolved from entertainment for a minority of people to phenomenon-level culture, becoming the common language and memory of modern times.Sometimes it’s one person, sometimes it’s a group of people, sometimes it’s with strangers.It's laughter, surprise, frustration, emotional, rage, achievement, reluctance, or enlightenment.Every time we enter and leave, what do we leave behind? What do we take away?

And why are we always so thrilled and unable to wait?—— Main Discourse

As a continuation from 2020 DigiWave, the team is honored to be invited again to curate the Exhibition in 2021. This is a technology-oriented celebration. We chose “video games” as the proposition this year, and to enter this from the perspectives of carriers, players, and game designers. We hope that under our guidance, visitors can see the relationship between video games and us.

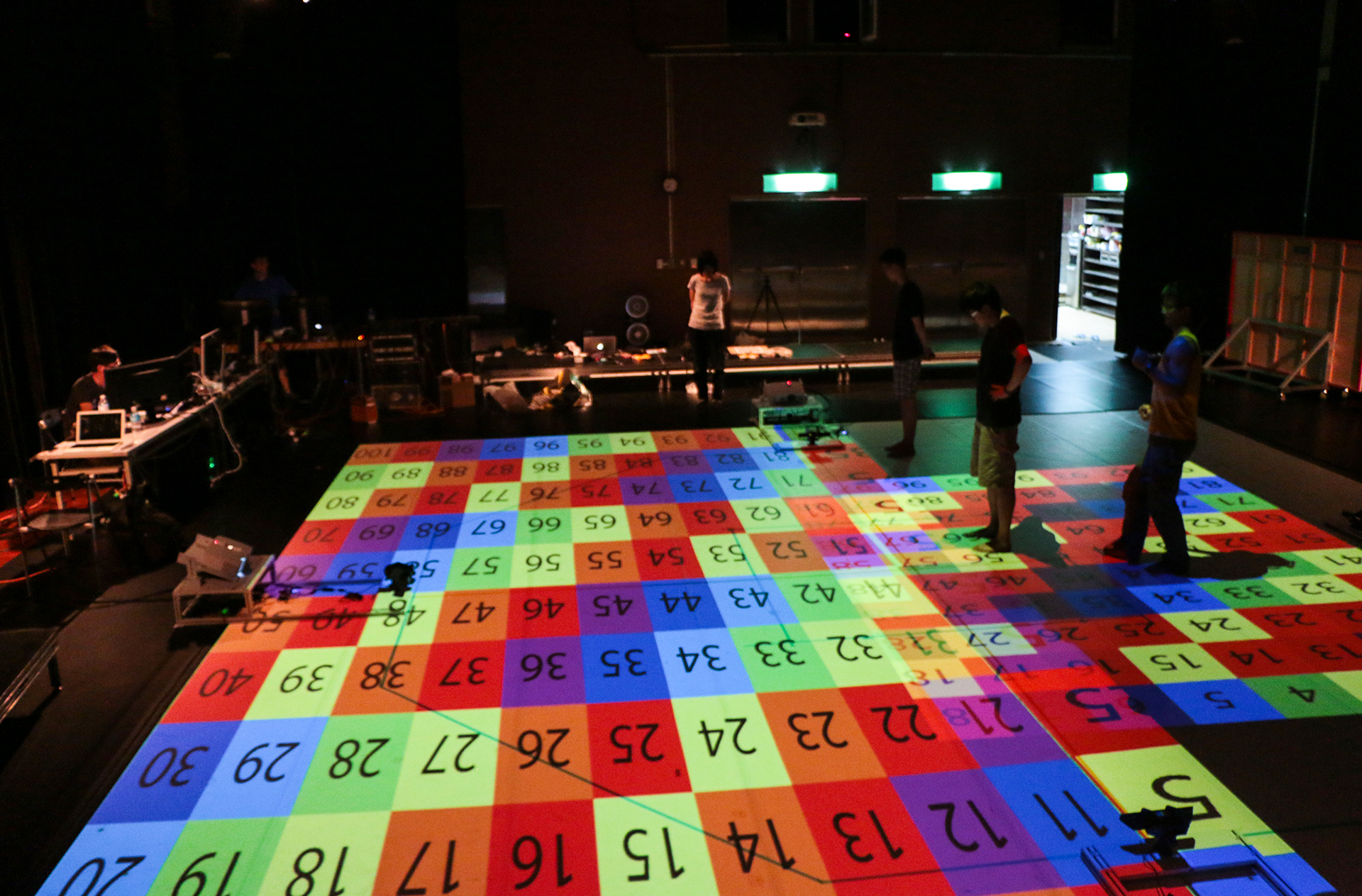

Experiencing Video Games in Video Games

"Actual experience is the best way to understand something." Because our theme is video games, in addition to the usual exhibitions and activities, we attempted to create an exhibition experience that is very much like a video game. Therefore, we set out to design an experience system, where we scattered the mechanics of video games in the real and virtual spectrum, extending from the exhibition content to the exploration of urban areas, and expanding the scale from a warehouse to the entire Yancheng District. Visitors become players, and the exhibition area becomes scenes in the game. Through actually being inside and experiencing the game, we can reflect on the philosophy of video games, players, game designers, and even the philosophy between real and virtual, and transform the exhibition into a sympathetic scene where mutual relationships and behaviors are constantly flowing.

The concept of games has always existed in human civilization and is an activity that is entertainment by nature.

Digital technologies, such as transistors and electronic calculators, were produced as technological innovations after World War II,

which were then applied to games and gave birth to "video games."

This has caused a dramatic and far-reaching effect on today's culture.

Recall the memory of the first time we played video games,

which carrier did we use to “START” and which video game world did we “SELECT” to enter?

Why did we “START” the game? And why did we “SELECT” that game?

—— Area Discourse

Digital technologies, such as transistors and electronic calculators, were produced as technological innovations after World War II,

which were then applied to games and gave birth to "video games."

This has caused a dramatic and far-reaching effect on today's culture.

Recall the memory of the first time we played video games,

which carrier did we use to “START” and which video game world did we “SELECT” to enter?

Why did we “START” the game? And why did we “SELECT” that game?

—— Area Discourse

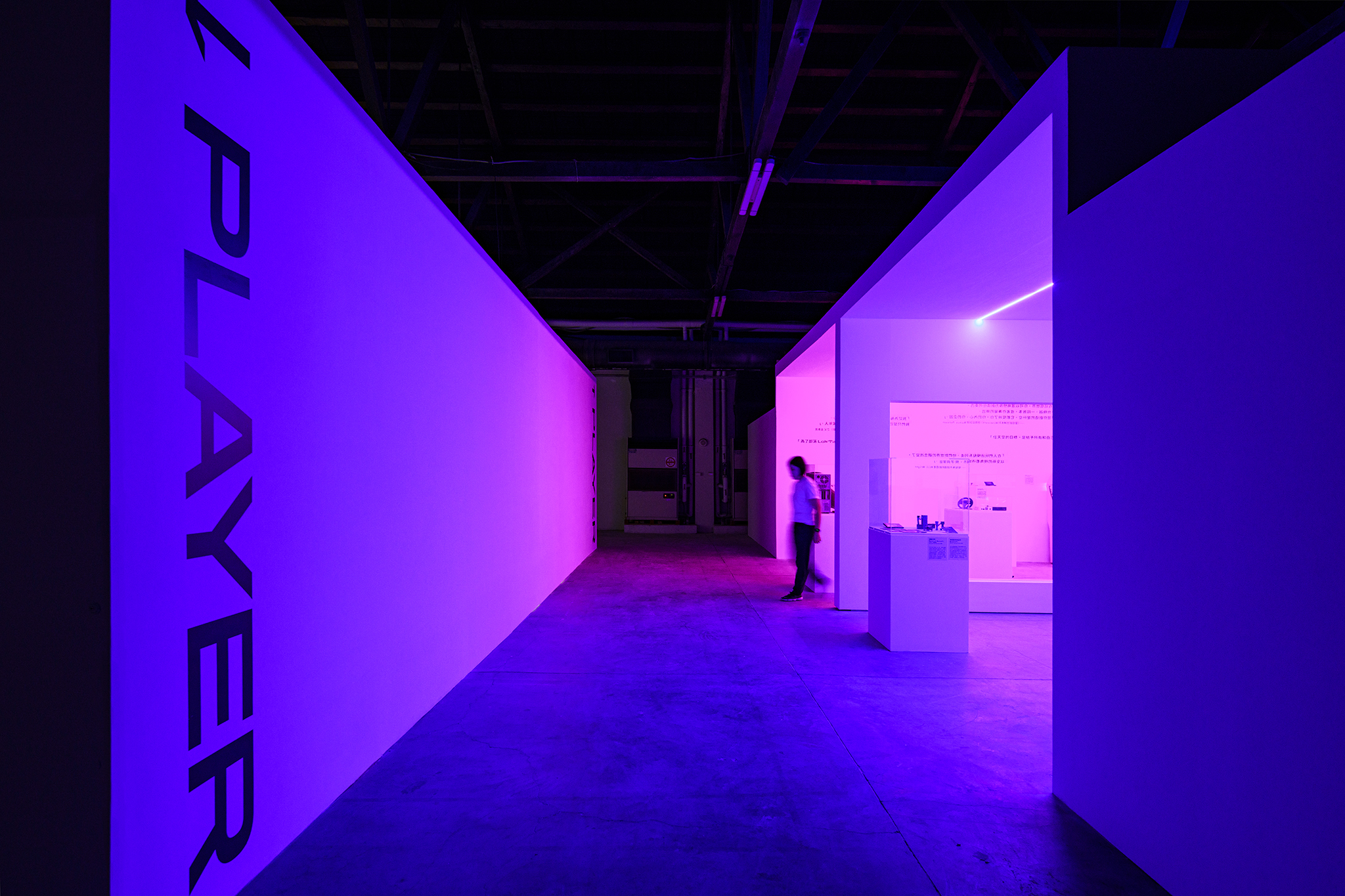

START / SELECT

Paying tribute to the two buttons in the center of the FAMICOM (Family Computer) controller, choosing “SELECT” as the "START,” and the two doors that first catch our eyes are the entrances, which immediately give the visitors the right to select. This is also an important and unique core in video games. Visitors are no longer watching passively, but are being actively involved.

Behind the doors, there are two spaces that simulate mirroring. On one end, ten classic game consoles in video game history are displayed, while on the other end of the mirroring, the ten most famous games corresponding to the game consoles are displayed. As a prelude, we cut to the chase with “Which game console did we "START" with, and which game did we "SELECT?" which will then trigger memories and discussions about video game experiences.

Echoing the key visual, we used "red and blue", which are often used in video games or when discussing virtual and real issues, as the main colors of the two spaces respectively. Objects are spread out along the time axis on the visitation traffic flow, supplemented by famous quotes and their mirror images scattered on the wall. We integrated video game-related memories, sense of the times, symbols, allusions, and anecdotes into a mirrored space filled with metaphors (red and blue pills in The Matrix and revolving door spaces in Tenet).

What type of game do we love to play?

In which games did we get some fun and deep memories?

What type of player are you?

—— Area Discourse

In which games did we get some fun and deep memories?

What type of player are you?

—— Area Discourse

PLAYER

Continuing the video game consoles and games from the previous area, the focus is shifted from the object to the actual player. Richard Bartle, also known as professor Richard, the co-creator of the world’s first MUD (Multi-User Dungeon), observed and studied player behaviors in the game, and divided the behaviors into four types:

Killers

Achievers

Socializers

Explorers

Eight celebrities from different occupations were invited. We first classified them and then carried out a series of interrogations. The relationships between these people and video games are presented in a large area of backlit films. They are lined up on both sides, forming a corridor. When walking by them, it also helps us to reflect on the relationship between ourselves and video games, as well as our other self after entering the virtual world.

The 1990s was the praised golden decade of domestic games. During that period, game companies, such as Softstar Entertainment, Soft-World International, etc, were established and launched many classic games, such as “The Legend of the Sword and Fairy,” “Richman,” and “Heroes of Jin Yong,” etc., and these games are still talked about today.

Due to the migration of the market and industry in the later years, Taiwan’s domestic games no longer had as much influence as they had in the past. In recent years, with the rise of mobile games and the Steam platform, in addition to game companies, more and more independent development teams have also delved into the development of games, and, hence, have successively launched excellent and diverse games, which have given the game industry a new outlook.

Actually purchasing and playing these excellent games is the most substantial support you can give to the game developers.

What is the next domestic game you plan to play?

—— Area Discourse

Due to the migration of the market and industry in the later years, Taiwan’s domestic games no longer had as much influence as they had in the past. In recent years, with the rise of mobile games and the Steam platform, in addition to game companies, more and more independent development teams have also delved into the development of games, and, hence, have successively launched excellent and diverse games, which have given the game industry a new outlook.

Actually purchasing and playing these excellent games is the most substantial support you can give to the game developers.

What is the next domestic game you plan to play?

—— Area Discourse

MADE IN TAIWAN

We selected eight recently released domestic games, ranging from student works, independent productions, to commercial masterpieces. These are all unique and extraordinary choices. Through the clips of game play, visitors can understand the uniqueness of these games and feel the abundant creative energy of the Taiwanese game developers.

CHATROOM

As its name suggests, this is an area to exchange ideas. We planned a series of film screenings, lectures, and talks, and invited esports players, game designers, YouTubers, and Podcasters to lead the visitors in delving into a more in-depth and speculative video game journey from different perspectives.

When the video game starts, the consciousness traverses into the virtual world.

Time passes slowly and reality changes without realization.

And the creators of this world - game designers -

takes on the lingering of players as their own responsibility, pondering deeply on the philosophy between aesthetics, mechanism, and text.

With the development of technology and the evolution of society, and through continuous iterations and changes, new worlds have appeared.

We are caught up in how these worlds are made.

What types of designs make people yearn and also fascinated?

Why are we always so thrilled and unable to wait?

—— Area Discourse

Time passes slowly and reality changes without realization.

And the creators of this world - game designers -

takes on the lingering of players as their own responsibility, pondering deeply on the philosophy between aesthetics, mechanism, and text.

With the development of technology and the evolution of society, and through continuous iterations and changes, new worlds have appeared.

We are caught up in how these worlds are made.

What types of designs make people yearn and also fascinated?

Why are we always so thrilled and unable to wait?

—— Area Discourse

GAME ON

At the other end of the passage, there is a doorway where light shines through, becoming a tangent plane of the exhibition space and thoughts. And behind the door is the world inside video games. Pushing open the door in the dark, we are greeted by a bright and white corridor called Loading. The contrast in extreme brightness brings about a perceptual impact of instant pupil contraction. This is used as the opening ceremony of entering into the virtual world.

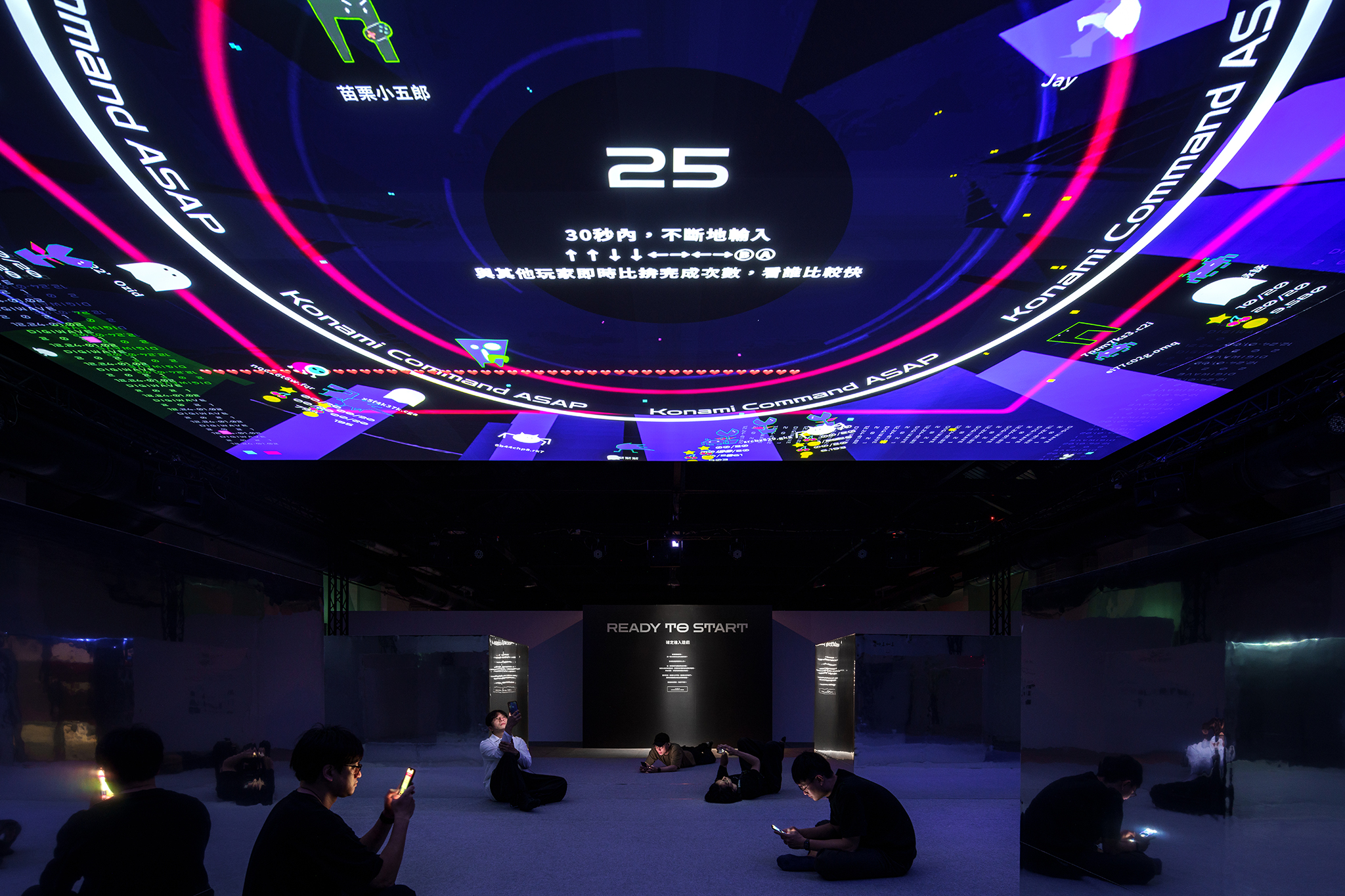

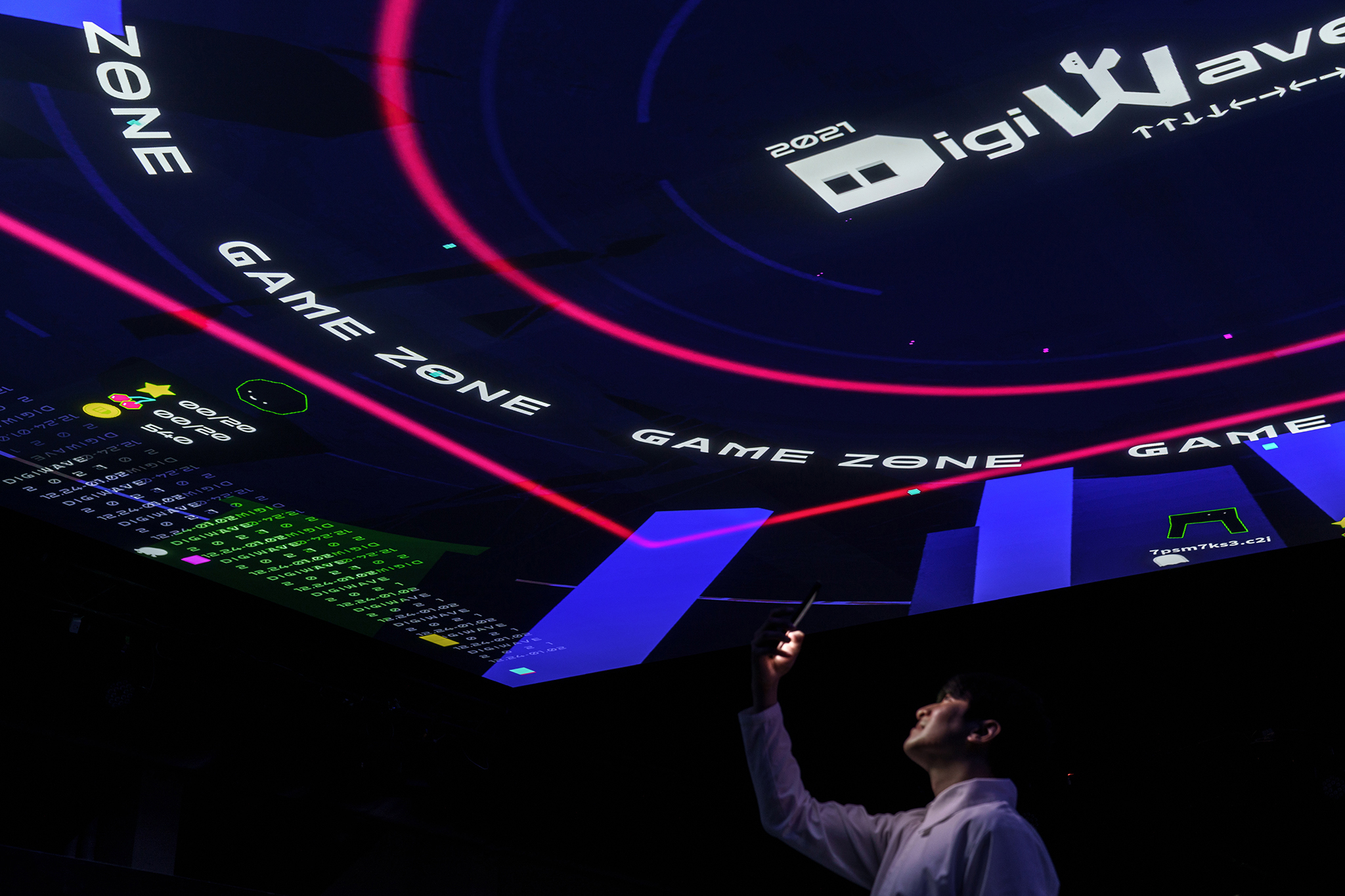

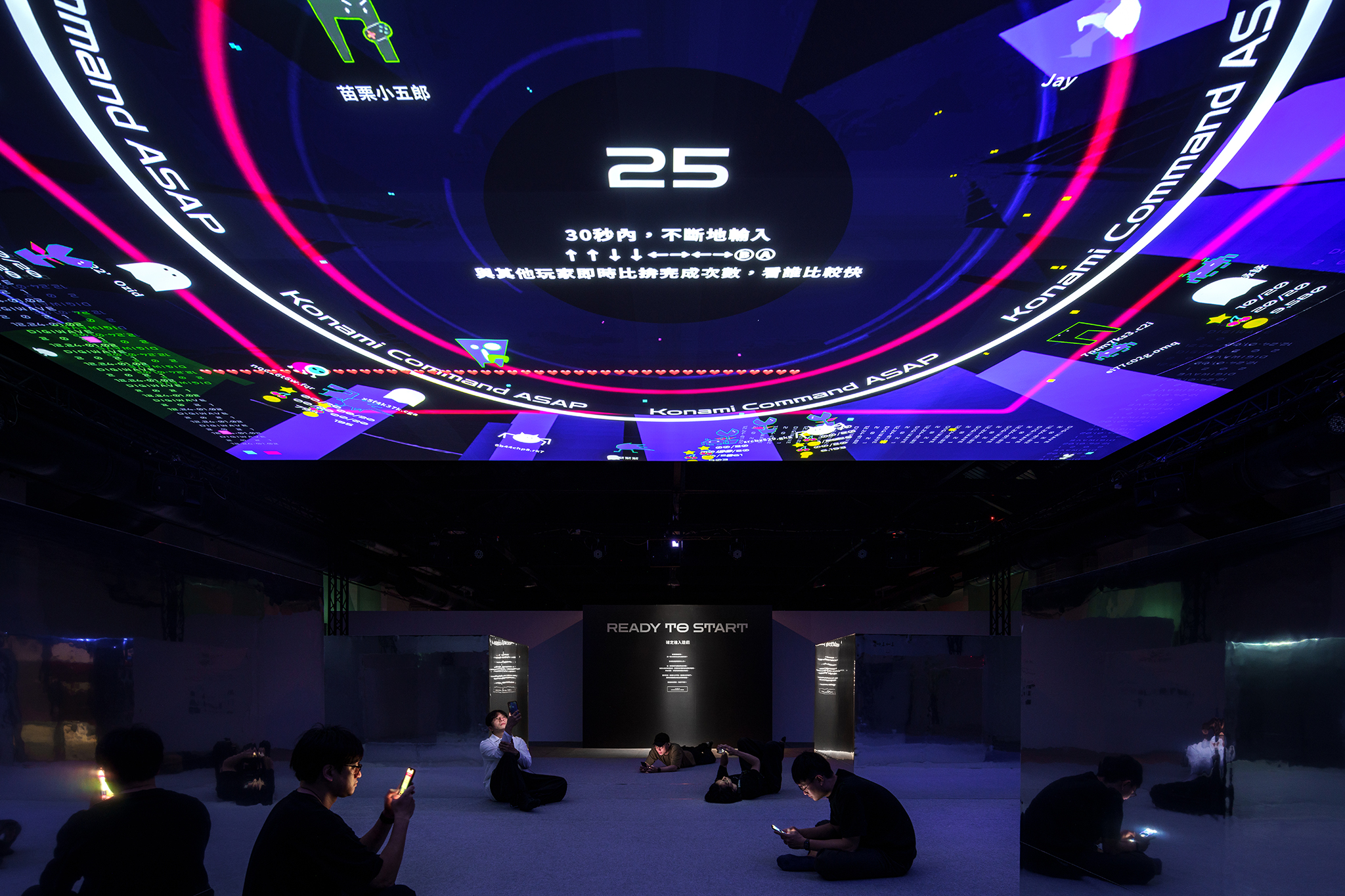

In this transition corridor, visitors log in to the experience interface created by LINE APP, and create their virtual character, as well as understand the process and mechanism. Turing around and leaving the corridor, visitors enter the Game On main area, which is a video-based virtual-real integration site. In terms of space, various mechanical elements that constitute video games are displayed on the front and rear featured walls, and a large 12 meters squared sky screen is placed on top, symbolizing that this is a virtual world under the monitor screen.

The experience consists of 20 stages, some of which is a four-digit code consisting of ↑↓←→ⒶⒷ that must be entered on the cellphone. With various difficulties, these codes are scattered in the exhibition area. After entering, the content is designed in response to the game mechanism, and visitors can understand the video game itself by actually experiencing it. Some of the stages can be completed independently, while some are multi-player competitions, or need social means in order to reproduce the relationship between players in different types of games. This video game-like structure stacked with mechanisms maximizes the visitors’ right to choose and randomly reestablishes relationships with on-site participants. The exhibition is transformed into an organism, staging a unique journey scene after scene. Many people indulge in the experience and forget about the time, going into a state of total FLOW.

Behind the monitor screen,

is a virtual city-state that is known for its entertainment.

Here is the entrance to escape from reality.

However, as various worlds become more mature and complete,

what was once thought to be a fictional setting actually has precise perceptions and experiences,

and inside, there are real relationships and memories.

We suddenly awake and realize that this entrance is not a door that switches worlds,

but a passage that connects the two ends, which affect each other.

The two ends gradually blur and become inseparable.

—— Ready to Start Discourse

is a virtual city-state that is known for its entertainment.

Here is the entrance to escape from reality.

However, as various worlds become more mature and complete,

what was once thought to be a fictional setting actually has precise perceptions and experiences,

and inside, there are real relationships and memories.

We suddenly awake and realize that this entrance is not a door that switches worlds,

but a passage that connects the two ends, which affect each other.

The two ends gradually blur and become inseparable.

—— Ready to Start Discourse

READY TO START

The video Ready to Start, which is broadcasted on the sky screen every half hour, connects the context of the exhibition and opens up various visual memories of video games. Watching the video by looking upward, along with surround sound, has become a ceremony of perception that is filled with emotion.

Branch of Exhibition - Yancheng 20 in 1

Different from the main exhibition hall, which progressively leads the visitors to the core of the video game layer by layer, we extended and planned a gamified real-world experience at various scattered locations. Assembling 20 featured locations in Yancheng District, just like an all-in-one video game, each location has become a unique mini-game through the integration of LINE APP. During the process, visitors will explore the details and elegance of this old district, as well as the reconstructed site. What is even more fascinating is the various exchanges between the residents of the area and the players.

Ⓑeat Ⓑit Ⓑeat

Leo Wang Feat PUZZLEMAN

Using the unique atmosphere of Yancheng First Public Retail Market as the stage, Leo Wang, Golden Melody Award singer, and PUZZLEMAN, the representative of Finger Drumming in Taiwan, worked together to reorganize and restructure classic “video game” theme songs and perform and play with these using game consoles as instruments. Coupled with the introduction of the experimental application of “Co-Presence” in 5G technology, we connected different Kaohsiung scenarios.

2021 DigiWave ︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎ⒷⒶ

2021 DigiWave - ︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎︎ⒷⒶ

指導單位:高雄市政府、經濟部工業局

主辦單位: 高雄市政府經濟發展局

策展單位:臺灣產學策進會、叁式

協辦單位:叁捌地方生活

指定投影機品牌:Panasonic

參展單位:Team9、智崴資訊、 赤燭遊戲、信仰遊戲、柒伍壹遊戲、SIGONO、 南瓜虛擬科技、 宇峻奧汀、 中華網龍、華泰行、舊遊戲時代、Giloo 紀實影音、BLINKWORKS LTD、啾啾鞋

對談講者:台灣通勤第一品牌、張志祺、GamerBee、施俞如、李勇霆、程思源、陳威帆、林業軒

贊助單位:Giloo 紀實影音、LINE、冠嘉系統科技有限公司、VR 體感劇院、華泰行、舊遊戲時代

品牌統籌:趙逸秋、吳淑靖

專案經理:陳威廷

藝術總監:何庭安

視覺設計:何庭安、顏晧真、江家伶、黃偉哲、李若菩、黃群傑

介面設計:江家伶、何庭安、黃偉哲

技術總監:楊家豪

程式開發設計:蔡佳礽、楊家豪、張存宇

硬體總監:呂承翰

硬體執行:呂承翰、張存宇

沈浸式音響系統設計及執行:鐵吹製作

硬體建置工程:冠嘉系統科技有限公司

空間設計:山陽山陰

展場工務統籌:有用設計

執行企劃:曾煒傑、曹家寧、張存宇、山陽山陰、陳子昀、張楷翊

內容諮詢:Game 話不加醬、陳威帆、張志祺、吳彥鋒

行銷統籌:蔡宜伶、莊虎牙

社群行銷:蔡宜伶、莊虎牙、蘇心怡

公關統籌:紀婉婷、張文殊

展務營運:陳威廷、林家蔚、張楷翊、黃薇如

展務執行:林家蔚、林慧婷、李燕萍、李佳蓉、黃品瑜、謝承芳、汪乃心、陳佳玉、鄭伃涵

展場靜態影像紀錄:李易暹 、顏歸真、曾國書、郭濬緯

展場動態影像紀錄:李金勳、陳弘軒、陳俊甫、高偉鳴

影像剪接:李金勳

特別感謝:陳其邁、廖泰翔、迪拉胖、Ru味春捲、張志祺、詹朴、貝莉莓、鄭宜農、劉真蓉、辜達齊、劉威利、陳韋安、王宏煒、賴柏榕、王瀚宇、湯鈞傑、楊適維、楊曜任、陳廷愷、吳思慧、余欣蓬、陳炯廷、董哲延、語聲者王喬蕎、嚴堯瀚、張家兄弟、張漢杰

顧展人員:黃若嘉 、陳玟寧 、李虹蒲 、戴瑋庭、羅政樺 、陳嘉妤、陳姿妤 、黃宜家、王渝茜、張家禎、蘇子雯、蘇子雯、陳彥宇、李鈺慈、王紫縈、吳沛璇、侯宇哲、郭岱昀、黃鈺雯、洪楦宜、黃琪崴、黃勝崴、紀萱、蕭玉欣、江珮綺、鍾佳穎、王可媗、范原銓、詹貞蘭、蔡品萱、何盈柔、劉千嘉、陳采伶、李燕如、徐如盈、吳文瑄、林意然、賴韻芮、郭晴欣、徐子濯、劉思霈、張芝維、張秀榕、鄭偉婷、張瑜、熊翊廷、呂家宜、鄭美儀、陳涵娟、陳仲孝、王冠詠、高志豪、黃宜家、戴瑋庭、陳嘉妤、陳玟寧、黃若嘉、劉逸涵

Ready to Start

製作人:何庭安

創意總監:曾煒傑

藝術總監:何庭安

技術總監:吳克軍

執行導演:紀昀

動態影像設計:吳克軍、何庭安、顏晧真、黃偉哲

編曲/混音:黃鎮洋

360 攝影:溫崇涵

位元嘻哈 Ⓑeat Ⓑit Ⓑeat

製作人:呂承翰

藝術總監:何庭安

創意總監:曾煒傑

演出藝人:Leo王、PUZZLEMAN

動態影像設計:柚子、顏晧真、李若菩

視覺現場演出:柚子

技術總監:吳思蔚

舞台硬體統籌與執行:鐵吹製作

程式開發設計:吳思蔚、蔡佳礽、楊家豪

硬體總監:呂承翰

系統規劃:呂承翰、張存宇

網路工程:洋洋實業有限公司

鹽埕 20 合 1

策展單位:叁捌地方生活

策展顧問:叁式 Ultra Combos

策展人:邱承漢

初期發想:盧映竹

創意設計:曾國鈞

企劃執行:蘇眉桂

程式開發設計:陳廷愷、蔡佳礽、楊家豪

店家洽談:曾國鈞、蘇眉桂、羅文昕

NPC協力:林杰慷

特別感謝擔任NPC的鹽埕鄉親們與店家:

鹽埕第一公有市場所有攤商與店家、Booking、廢墟 bar、高鈺鈕釦、泰昌西服、金樹帽蓆行、三山國王廟、老耄、超級鳥百貨、沙多宮、信東食品行、 佬掉牙、正麗髮型、空腹虫大酒家、銀座聚場、新樂里廖明烈里長

特別感謝提供玩家回血回魂的補給站店家:

阿貴虱目魚店、姊妹老五冷飲早餐店、山壹旗魚食製所、小堤咖啡

港園牛肉麵、崛江麵、阿英排骨飯、永和小籠包、香茗茶行

李家圓仔冰、阿男燒烤、大胖豬油拌麵、酒場清志郎

喵姨蛋餅、50年杏仁茶、高雄婆婆冰、阿綿手工麻糬

老屁股音樂屋、純愛氷菓室 PUPPY LOVE、牛津啤酒屋

Related Works:

Project Type: Entertainment

Category: Event

Client: Gogoro

Year: 2021

Location: App Store / Pop up store

App download link: Gogoro MixDance (iOS only)

#AR

#App #Kiosk

#BodyTracking

#MixDance

#GogoroVIVAMIX

#隨變我都行

Category: Event

Client: Gogoro

Year: 2021

Location: App Store / Pop up store

App download link: Gogoro MixDance (iOS only)

#AR

#App #Kiosk

#BodyTracking

#MixDance

#GogoroVIVAMIX

#隨變我都行

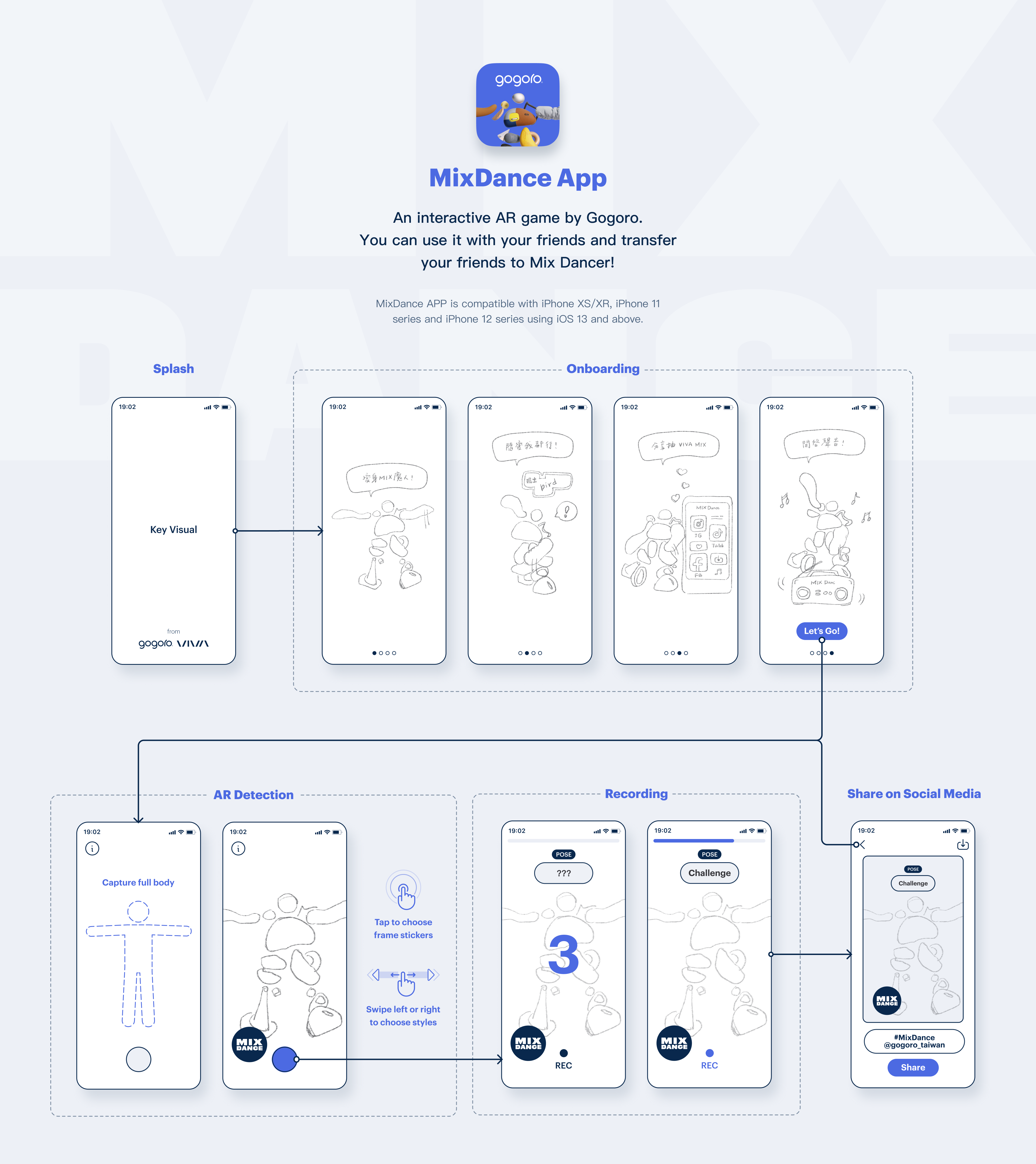

Gogoro MixDance

In the first quarter of 2021, Gogoro announced its newest model – VIVA MIX.

This model, with its “mixing” style core, offers six model colors, exclusive music, and personalized accessories, and makes riding a motorcycle become an extension of our lifestyle. It even created the character “Mix Anthropomorphism,” where the spirit of the motorcycle model is personified, so that as the public gets into the feel of the changing dance moves of the character, it also deepens their impression of the model.

-

Personality of the Model Becomes a Digital Fun Experience

Transform through AR and Dance to the Music

Interface Design

In order to present the key feature of “fun,” the most important task is for users to intuitively complete challenges. Therefore, we adopted the operating habits of the existing recording interface to simplify the experience process and reduce the learning curve for first-time users, which helps them quickly and intuitively participate in the activities and challenges.

User Flow

Filter Style

Instructional Page

The instructional page is the user’s first impression of the App. The rhythmic Mix Anthropomorphism along with the dialog box, and the vivid introduction method allow users to quickly and easily blend in with what they are experiencing. When trying to switch pages, in order to smoothly present continuous movements, the program automatically calculates the internal difference between the two actions, which allows the character to wander through each screen at will, and can switch pages any way the user desires!

Style Components

In its style, the Mix Anthropomorphism, which is composed of random parts from the Gogoro vehicle, must establish a corresponding relationship between “vehicle parts” and “human body structure.” After setting the style for the main body structure and the limbs, some geometric shapes are then added to randomly fill the gaps in the main structure. The colors of the components will also change by taking in the colors of the individual’s clothing to make the components attached to the body even more personalized.

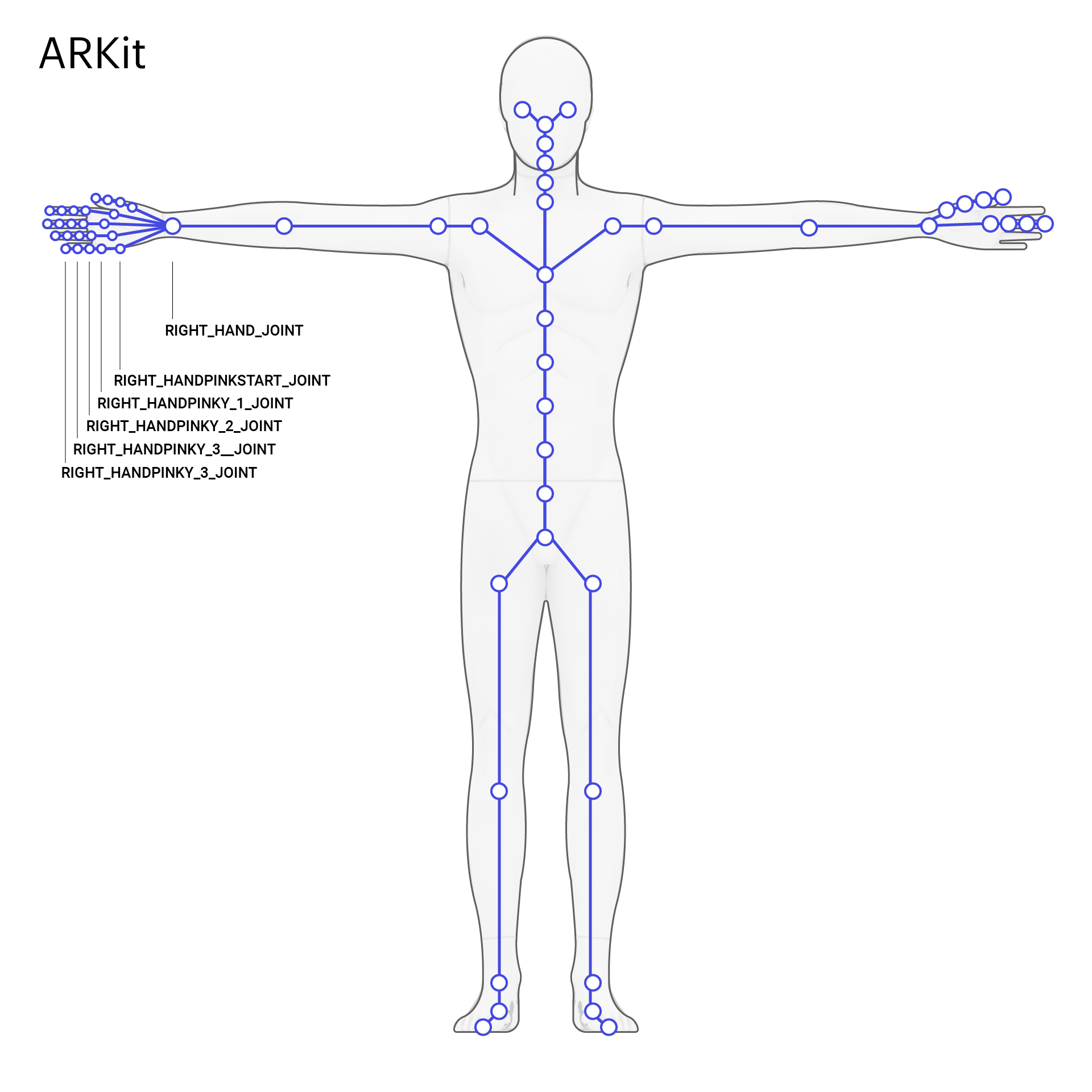

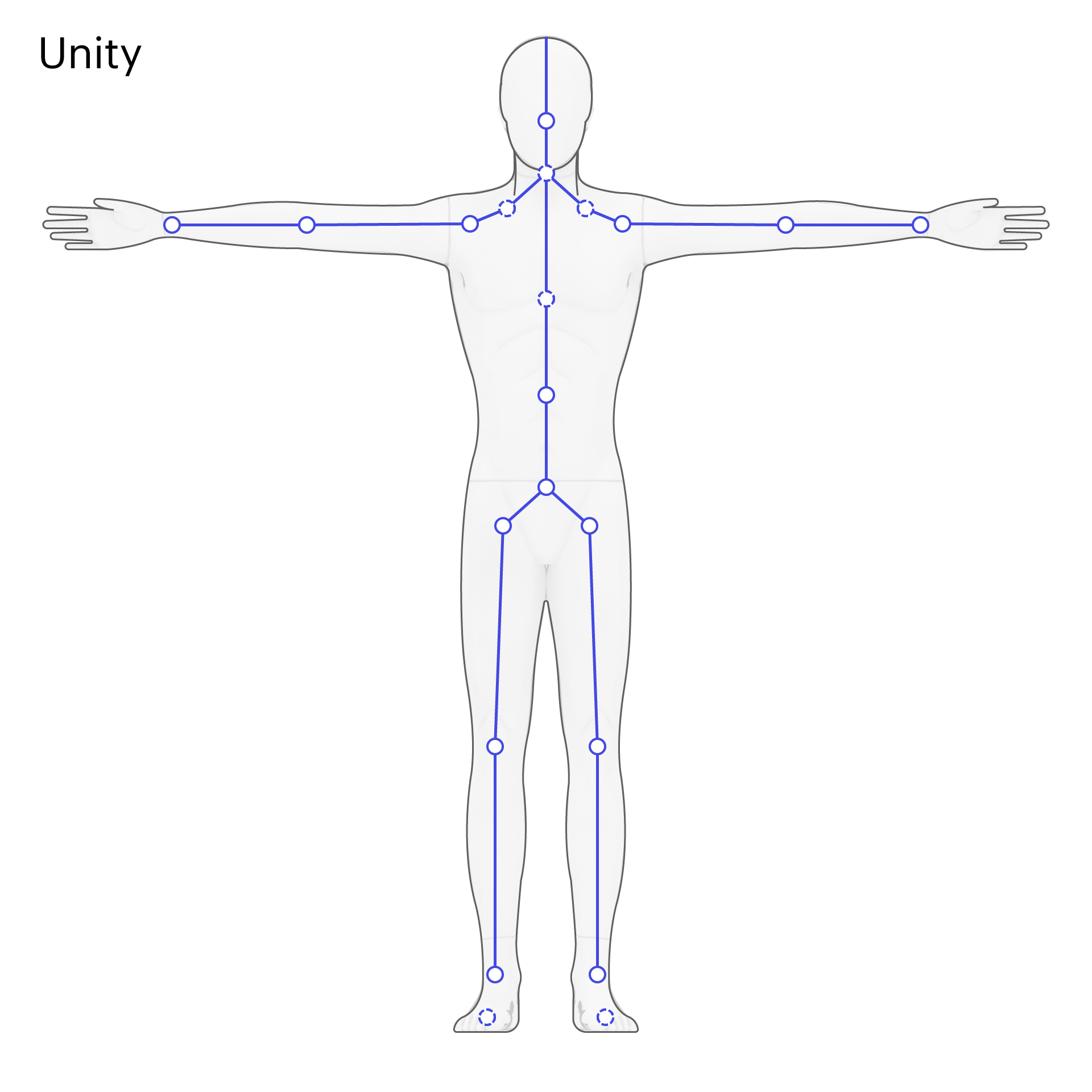

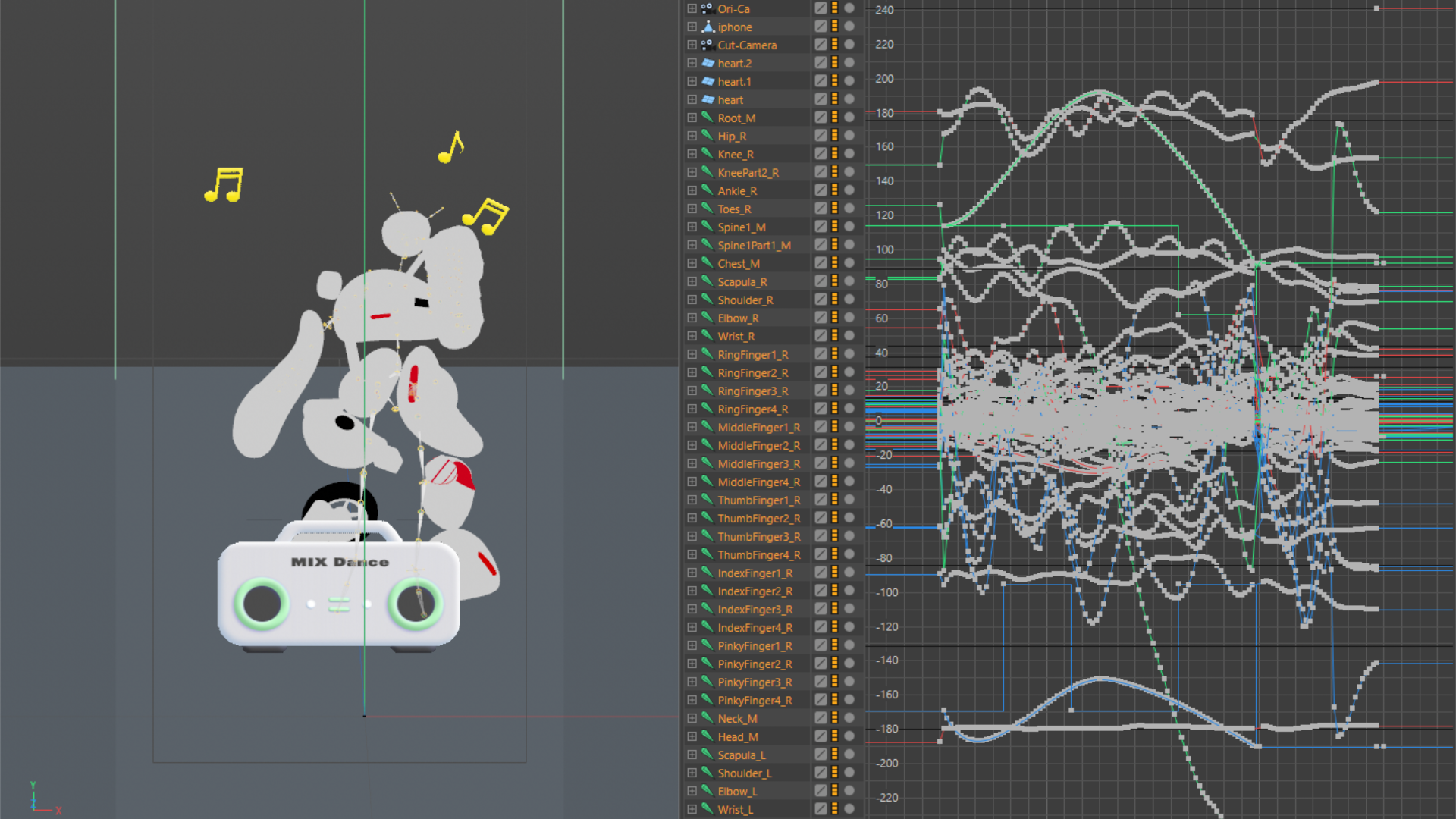

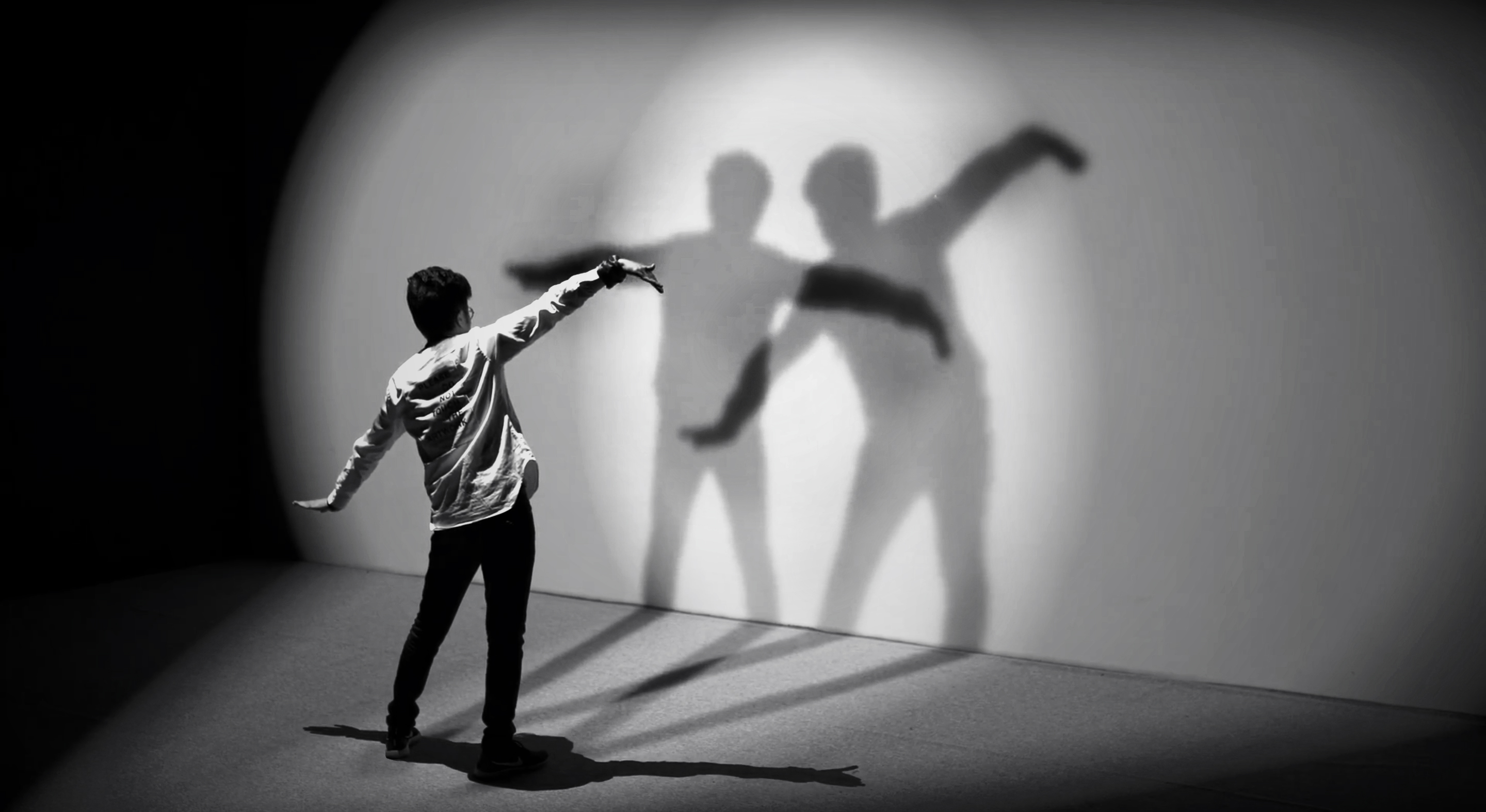

Movement Detection

We used the latest body tracking technology to present the AR effects. In the design of the movements, we made the “composition” process livelier, when the screen detects the human framework, the components scattered in the air will quickly gather. After the user leaves the screen, the components will also lose gravity, be bounced back into the air, and float slowly.

AR Technology

In order to allow more people to experience it, two types of carriers, App and Kiosk, are provided.

The difficulty in making the character fit the user in the process is that the positions and numbers of nodes provided by the framework information on each platform are different, and the rigging methods on different models are also different.

The iOS App uses the framework information provided by ARKit to control the character, where through Meshing and Light Estimation, the virtual character is more so integrated with reality and the sense of immersion is enhanced. We have chosen Kinect V2 as the framework detection sensor for the kiosk, and the built-in Gesture for users to intuitively start the game with their body movements.

The difficulty in making the character fit the user in the process is that the positions and numbers of nodes provided by the framework information on each platform are different, and the rigging methods on different models are also different.

The iOS App uses the framework information provided by ARKit to control the character, where through Meshing and Light Estimation, the virtual character is more so integrated with reality and the sense of immersion is enhanced. We have chosen Kinect V2 as the framework detection sensor for the kiosk, and the built-in Gesture for users to intuitively start the game with their body movements.

Visual Gesture Build training

Body Tracking

Image Credit: Apple

Making of

Gogoro MixDance

Client:Gogoro

Chief Director:Gogoro Creative Team

Executive:Ultra Combos

Project Manager:Prolong Lai

Producer:Hoba Yang

Creative Director:Chianing Cao

Art Director:Lynn Chiang

Technology Director:Hoba Yang

Hardware Director:Prolong Lai

Programmer:Hoba Yang, Ke Jyun Wu

UI Designer:Lynn Chiang, Choong-Wei Ng

3D Object & Animator:Hauzhen Yen

Technical Assistant:Chia-Yun Song

Character Design:MIXCODE / Tim Tseng, Neil Lin

Music Design:YUNG BAE

Music Editor & Design:HYPERLUNG STUDIO / PONGO

Exhibition Design:KY-POST

Related Works:

Project Type: Communication / Entertainment

Category: Branding / Event

Client: Gogoro

Year: 2021

Location: Taipei Nangan Exhibition Center

#GenerativeArt

#Installation

#Projection Mapping

#AGV #Stage Design

#Motion Graphics

#Motion Capture

#Lighting Design

Category: Branding / Event

Client: Gogoro

Year: 2021

Location: Taipei Nangan Exhibition Center

#GenerativeArt

#Installation

#Projection Mapping

#AGV #Stage Design

#Motion Graphics

#Motion Capture

#Lighting Design

Gogoro VIVA MIX Smart Runway Show

In the first quarter of 2021, Gogoro announced its newest model – VIVA MIX.

As the name suggests, it emphasizes the spirit of mixing. It combines the characteristics of previous models and continues to reach new technological breakthroughs in its riding experience. It even added a POP personalized accessory system, and along with a variety of motorcycle colors that combine musical themed elements, it makes the unique personalized style even more vivid. VIVA MIX is Gogoro’s recent pinnacle product that balances market demand, user experience, and brand value. And Gogoro invited the team to jointly plan the debut of this motorcycle.

A Fashion Show with the Motorcycle as the Main Subject – Where “Wisdom” and “Variability” are Displayed Together

In order to present the key characteristic of “Wisdom,” the team worked together with Gogoro and used the AGV (Automated Guided Vehicle) from the factory’s production line as a vehicle to accurately guide the new motorcycle into and out of the venue according to the scheduled directional flow, just like a model on a runway.

For “Variability,” Gogoro’s design department specially came up with four main themes: “Rainbow,” “Earthy,” “Grey,” and “Alive.” They customized 24 special motorcycle model colors that are different from those that are mass-produced to highlight the diversity and possibility of the performance of the VIVA MIX series.

For “Variability,” Gogoro’s design department specially came up with four main themes: “Rainbow,” “Earthy,” “Grey,” and “Alive.” They customized 24 special motorcycle model colors that are different from those that are mass-produced to highlight the diversity and possibility of the performance of the VIVA MIX series.

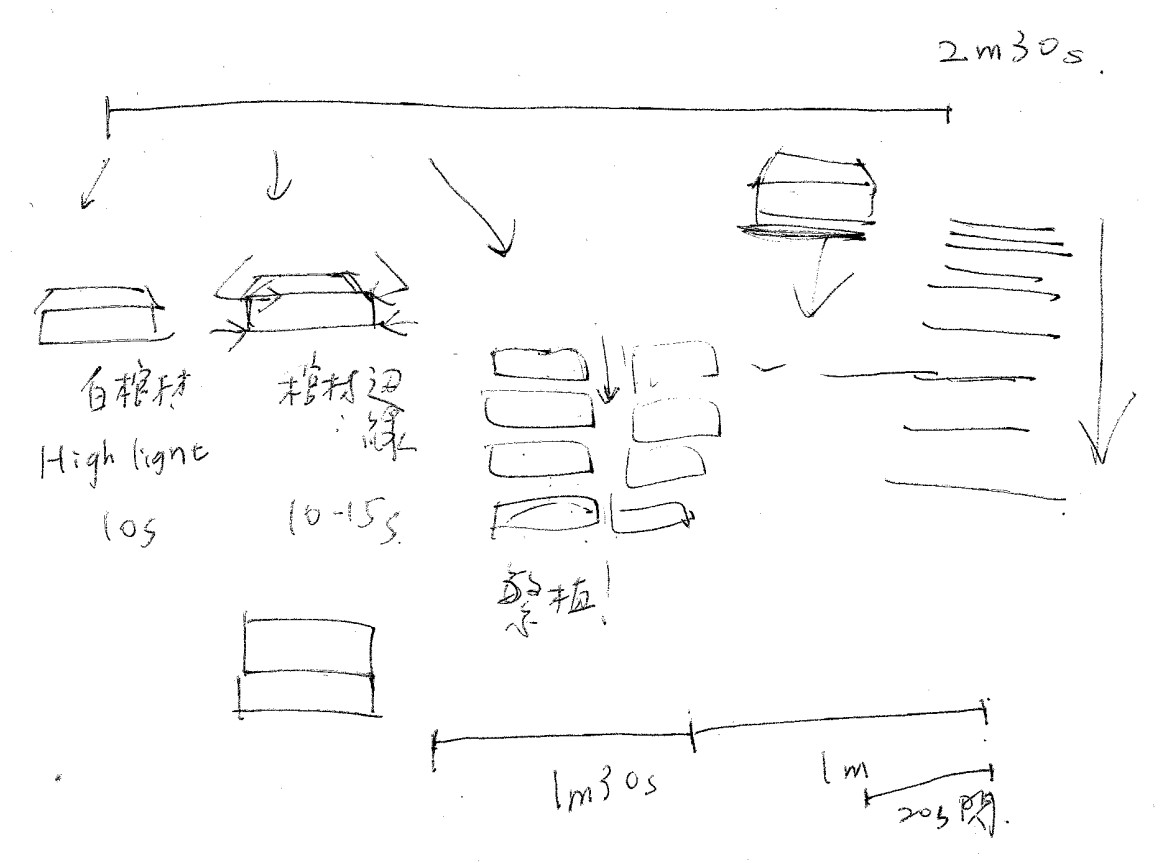

Early Design Sketchs

Dancing Mojo

Visual ID

- Acappella White

- Rap Red

- Reggae Hazel

- Beatbox Grey

- Funk Purple

- Techno Blue

Rainbow

Earthy

Grey

![]()

![]()

![]()

Alive

![]()

![]()

![]()

Grey

Alive

![]()

![]()

![]()

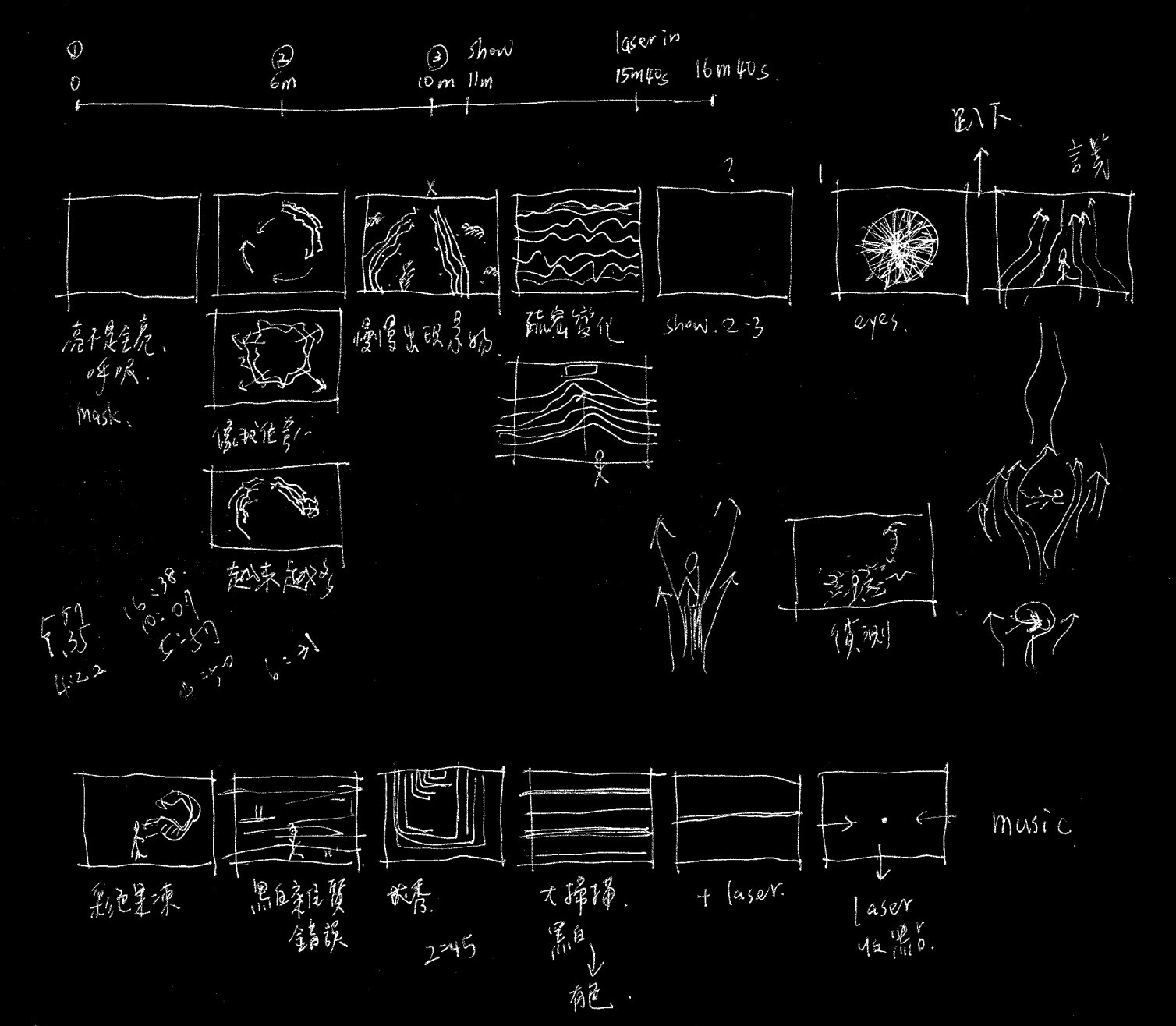

From Mysterious Sci-Fi to Gorgeous Colors

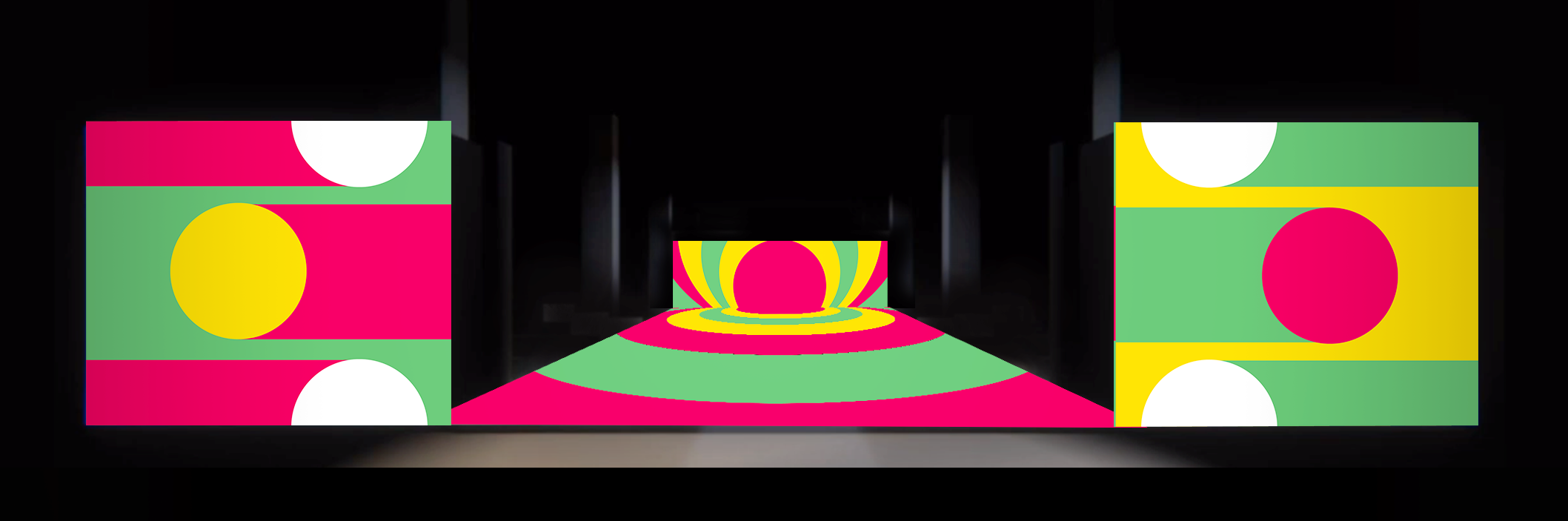

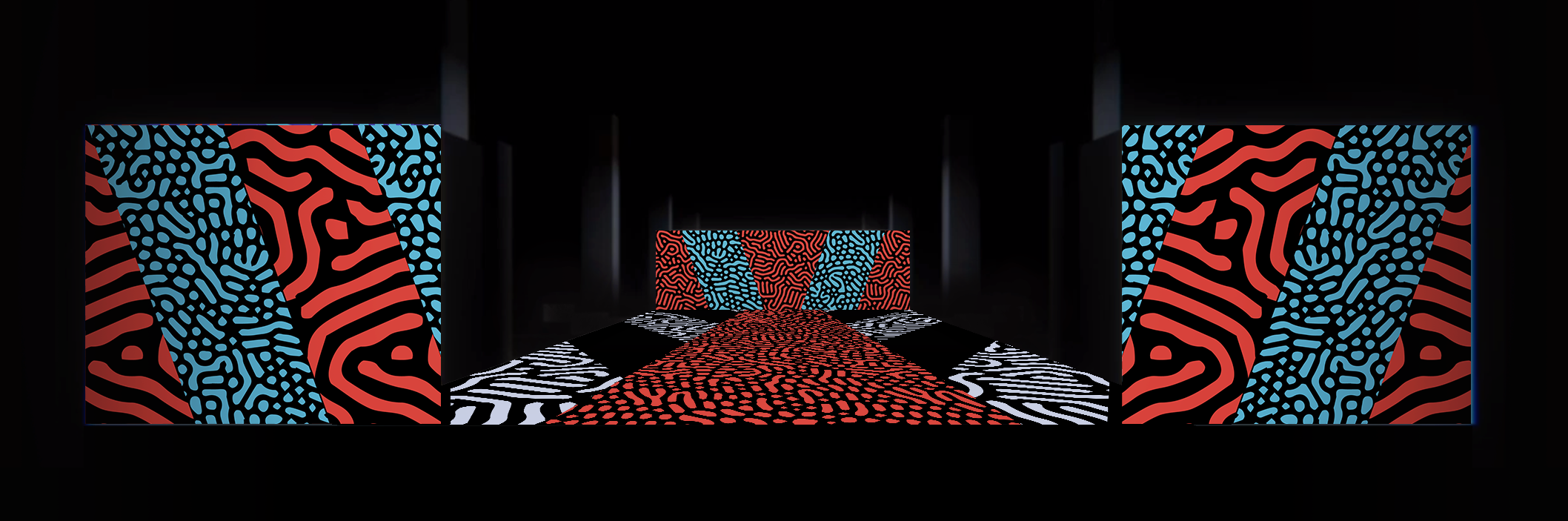

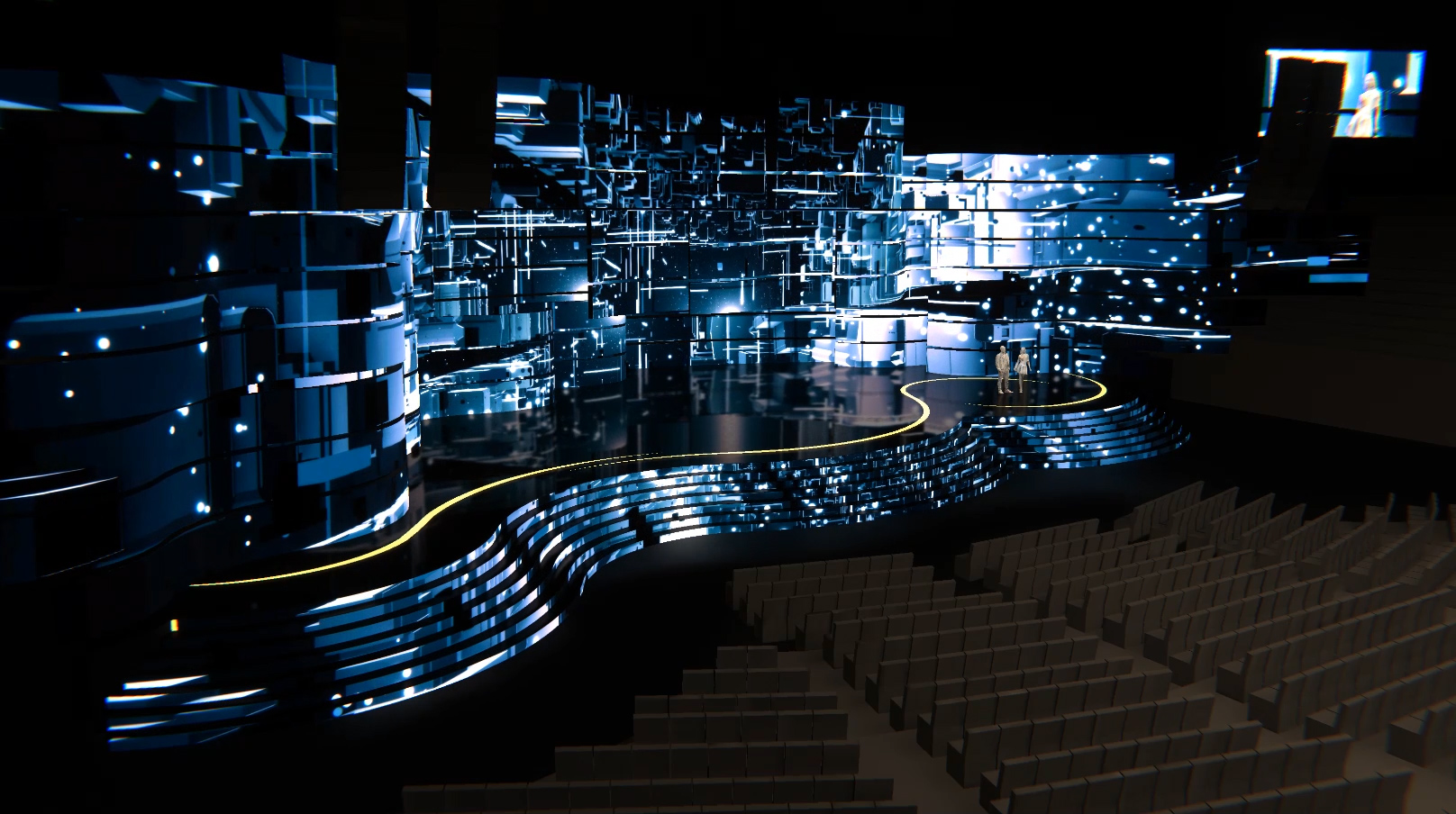

In response to the brand’s existing image and the characteristics of this newest released model, we designed the mood of the debut show to start from a more mysterious sci-fi feel and move towards brilliant thematic colors.

The performance starts out with partial lighting, gradually revealing the depth and breadth of the space, and continues to extend into a virtual (3D Wireframe) space through the screen, stacking the sense of technology and the feeling of expectation. As the rhythm intensifies, the colors invade and are disassembled and restructured into a world with colors. Then, the motorcycle models enter the runway, where the various color-themed VIVA MIX models appear with the music. The performance will end with a party-like celebratory atmosphere.

The performance starts out with partial lighting, gradually revealing the depth and breadth of the space, and continues to extend into a virtual (3D Wireframe) space through the screen, stacking the sense of technology and the feeling of expectation. As the rhythm intensifies, the colors invade and are disassembled and restructured into a world with colors. Then, the motorcycle models enter the runway, where the various color-themed VIVA MIX models appear with the music. The performance will end with a party-like celebratory atmosphere.

Design Tools for Near On-Site Experience

This is built from scratch in a space with an area of 7,560 square meters. In order to piece together the correspondence between the audience, AGV, VIVA MIX, lighting, LED, projection. and other special media within the space, we created a simulator using the 3D game engine Unity and tried our best to restore the scene for the reification of the imagination.

Through a series of experiments and statistics, the physical characteristics and parameters of the AGV in the real world are restored in the simulator. Through the simulator, visual workers are able to fine-tune the rhythm and balance between each medium from the perspective of the audience. During the two-month production process, the different units relied on the close-to-real simulator images to communicate and focus on what is being imagined with each other.

Through a series of experiments and statistics, the physical characteristics and parameters of the AGV in the real world are restored in the simulator. Through the simulator, visual workers are able to fine-tune the rhythm and balance between each medium from the perspective of the audience. During the two-month production process, the different units relied on the close-to-real simulator images to communicate and focus on what is being imagined with each other.

Magical Space Rendering Experience

At the venue, besides getting the feel of controlling the motorcycle and its comfort when riding, we created an interactive riding experience with the “Total Magic” concept. We simplified the six color systems of VIVA MIX into vertical and horizontal lines, where they interlace to form extremely pure color blocks. When the different VIVA MIX color systems shift around, the sensor will detect color changes and will trigger the projected color blocks to flip and turn the entire space, creating a gradient spectrum, and breaking the boundary between digital and physical realms.

Co-Creation Model

During our cooperation process, we each expressed our points of views regarding “Technological Experience” and “Brand Aesthetics” and let these ideas bounce around for in-depth co-creation and collaboration, thus enabling the presented media and themes to align with the brand’s communication strategy and lexicon.

Gogoro VIVA MIX Smart Runway Show

Client:Gogoro

Chief Director:Gogoro Creative Team

Director & Executive:Ultra Combos

Project Manager:William Liu

Producer:Nate Wu

Creative Director:Jay Tseng

Art Director:Ting-An Ho

Motion Designer:Ting-An Ho, Hauzhen Yen, Lynn Chiang, Group.G, 1000 Cheng

Generative Designer:Ke Jyun Wu

Character Design:Mix Code

Motion Capture Operator:MoonShine Animation

Choreographer:Les Petites Choses Production

Technology Director:Nate Wu

Programmer:Nate Wu, Hoba Yang, Wei-An Chen, Reng Tsai

Hardware Director:Herry Chang

Hardware Engineering:Herry Chang, Alex Lu, Chia-Yun Song, Chia-Wei Lin

Executor Team:Prolong Lai, Joyce Huang, Hsin Chen, Chi-feng Ying, Choong-Wei Ng

AGV Trackway Design:Glenn Huang

AGV Programming:Reng Tsai, Wei-An Chen, Chia-Yun Song, Nate Wu

AGV Technology Director:ECON Robot

Spatial Design & Construction:Event Design

Lighting Designer:Rokerfly Design

Music Designer:DJ QuestionMark(Chi-Shuan Ying)

Network Engineer:KlickKlack Communications

Stage Manager:Hsu Cheng Lei

Timecode Programming:Wei-An Chen

Visual Operator:Wei-An Chen

Rider Casting:OOAD

Program Director:YAHOO TV

Filming Team:Asking Gee

Editor:Asking Gee, Nanez Chen

Related Works:

Project Type: Artwork, Entertainment

Category: Event, Exhibition

Year: 2020

Veneu: Kaohsiung Pier-2 Art Center

.

#Laser

#GenerativeArt

#Installation

Category: Event, Exhibition

Year: 2020

Veneu: Kaohsiung Pier-2 Art Center

.

#Laser

#GenerativeArt

#Installation

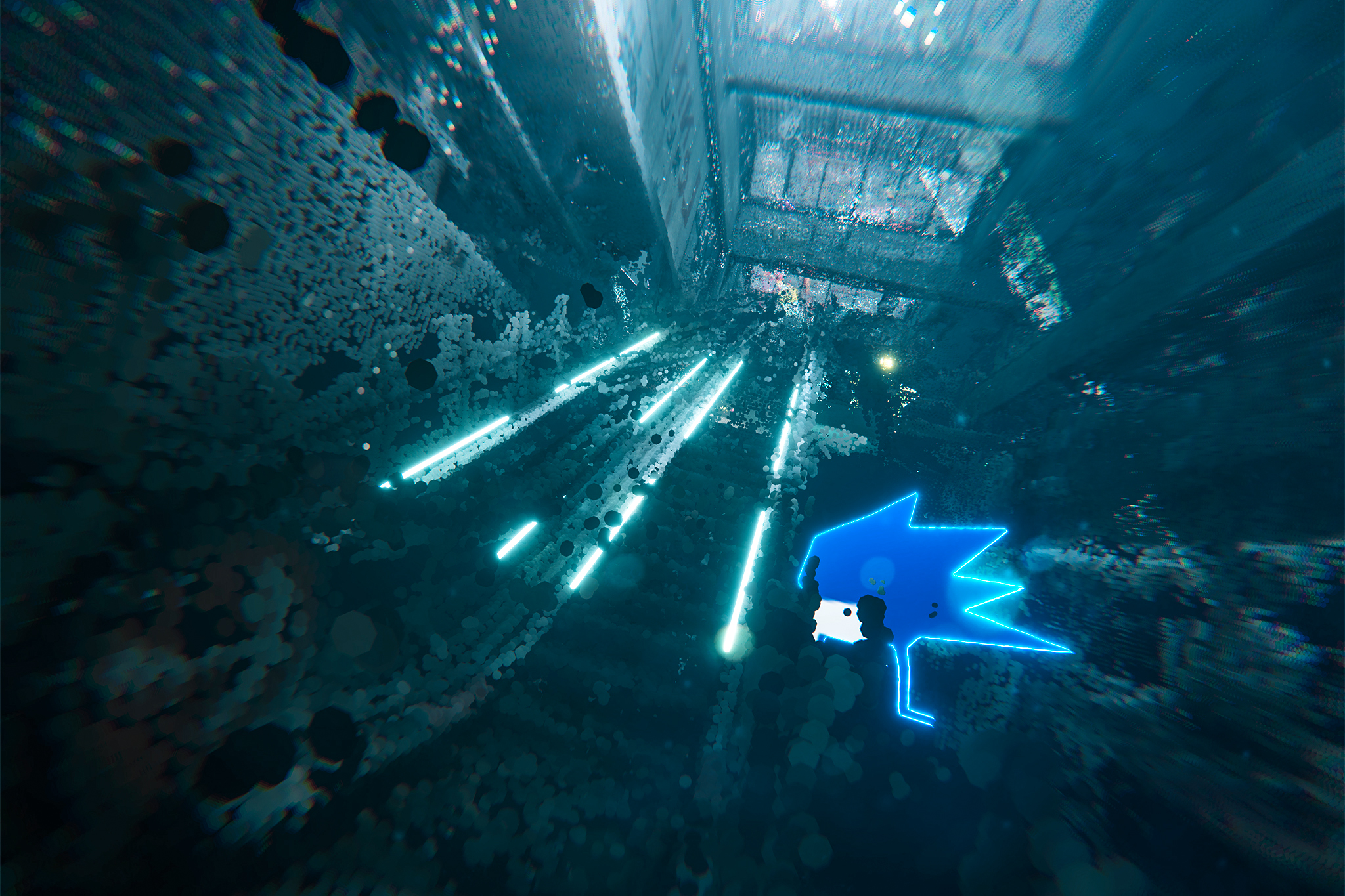

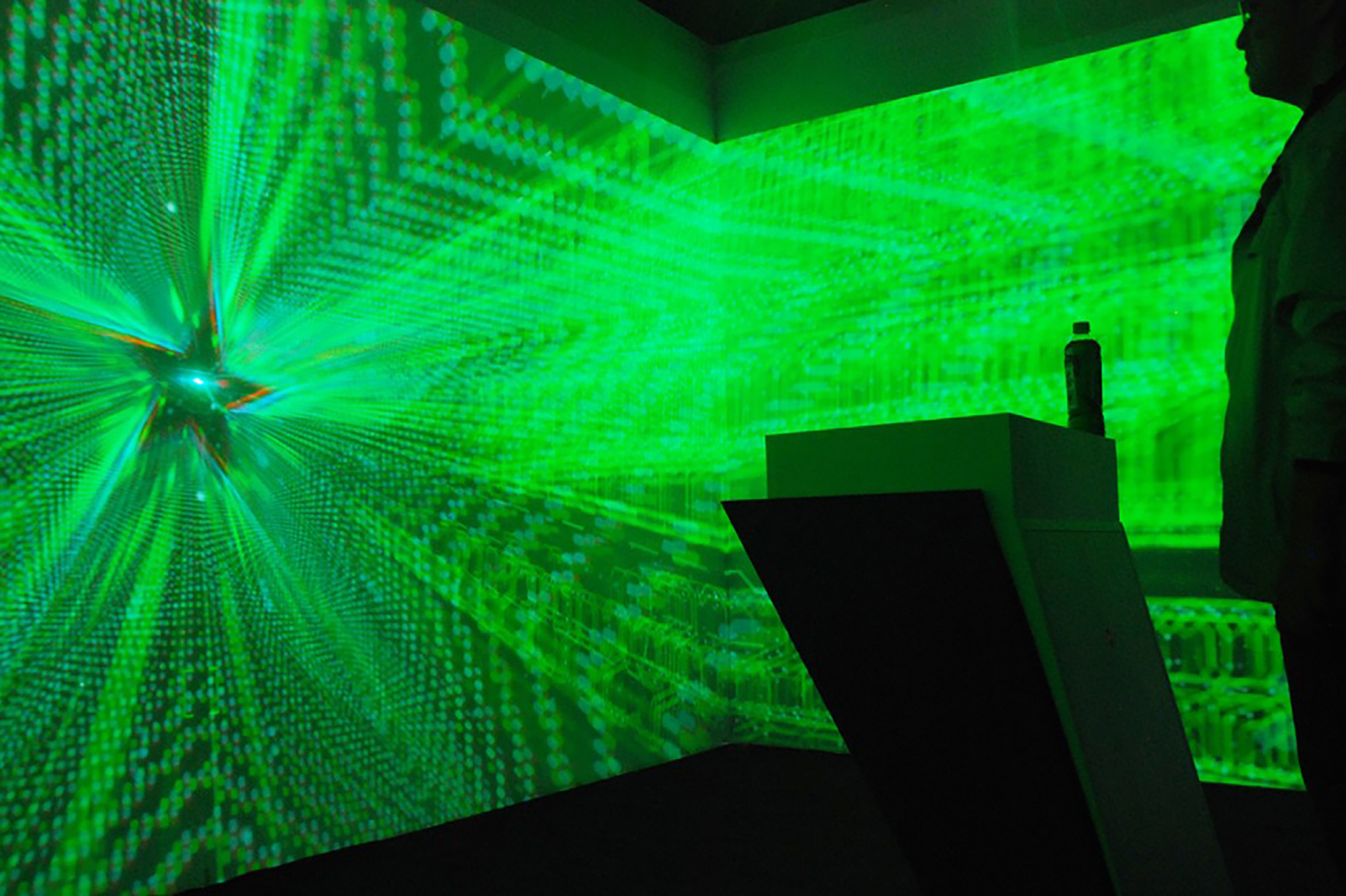

2020 DigiWave - CeilToInt();

The team is lucky to be invited to plan the 2020 DigiWave CeilToInt(), which is a celebration focused on technology. We usually decline the commission to plan activities, hoping to focus our energy on creation and production. However, this event wanted to use the connection between “technology” and “celebration,” so we were tempted to take on this project. “Technology,” in all its aspects, has always been something the team paid a lot of attention to. Therefore, we were even more certain that the core momentum for the continuous advancement of technology is the imagination of the future. So, we proposed the concept of “CeilToInt()” as a tribute to the creators and practitioners who have never hesitated to take the step into their imagined future. We also hope to lead the visitors in experiencing the wonderful moments of stepping into the sci-fi worldview.

Regarding this concept, we raised four future events as questions:

“The moment when we are unable to recognize a machine from a person”;

“The moment when we can arbitrarily define what a body is” ;

“The moment when we cannot distinguish what is real and what is virtual”;

“The moment when we discover other civilizations in the universe”

and assumed that certain events or situations are caused by technology, and with that, we invited many outstanding works to be exhibited, while we executed a new workpiece called “Clairvoyance,” which connects the entire exhibition space, and is dedicated to all those who are passionate and who fantasize about the future.

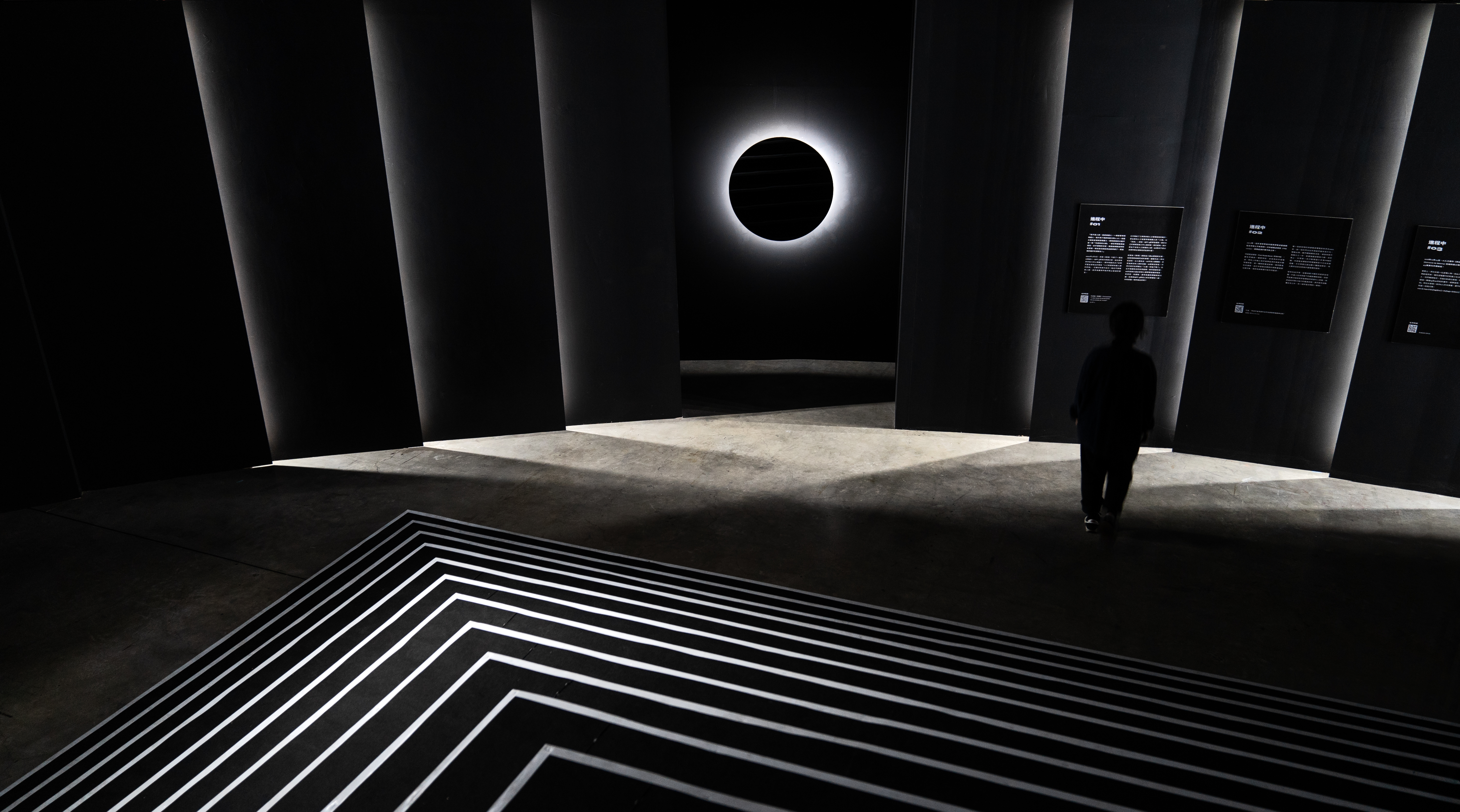

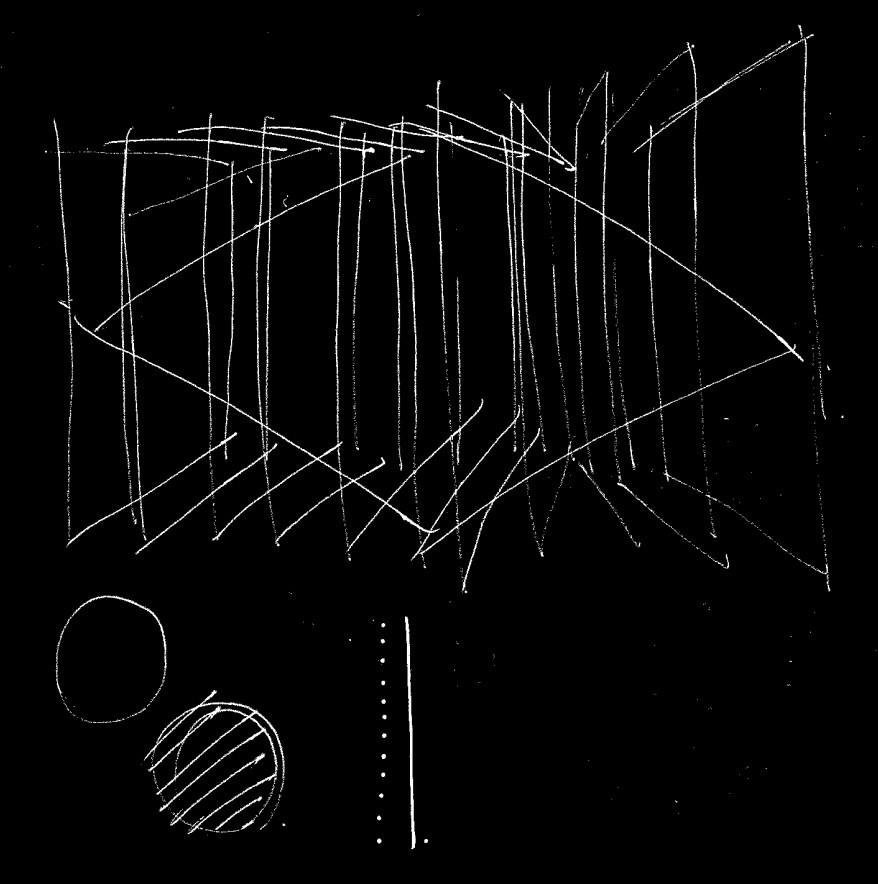

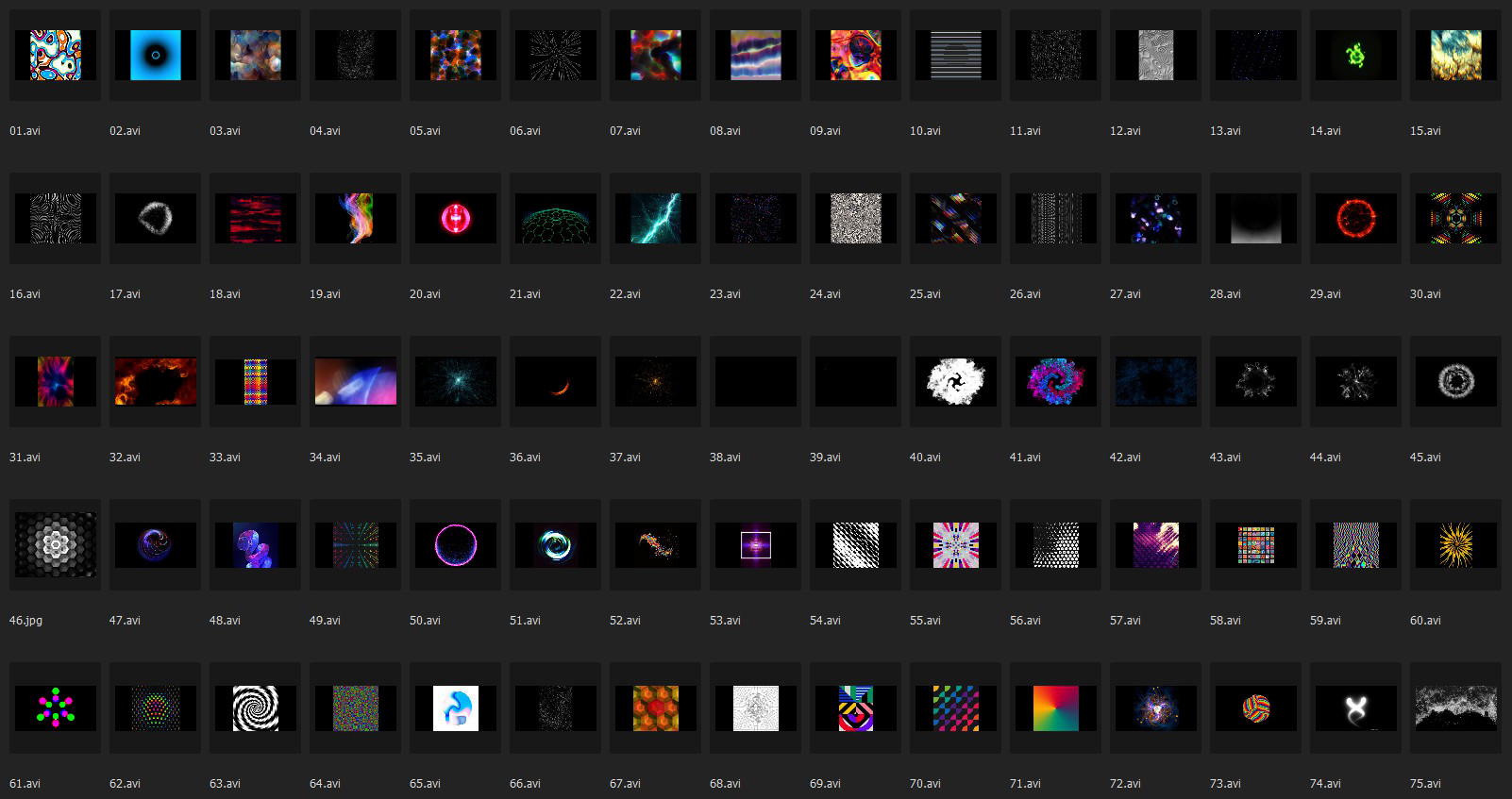

Clairvoyance

In order to match the on-site temperament with our set topic “Sci-Fi,” we created a workpiece that pays tribute to all those with no hesitation about the future: Clairvoyance.

The term “clairvoyance” is a technical term, which refers to a special phenomenon where one can see some place far away, or even something in a different space and time, beyond our normal vision. We feel that this meaning appropriately describes all who have an endless curiosity about the distant future, and are constantly pushing the world forward with their imagination and technology. That’s why we used this gigantic video workpiece with this as the theme.

Clairvoyance is portrayed using the composition of images on the wide walls and the extra-long floors. The far side of the wall is the place far away where we yearn to see and the ground below our feet is the path on which we are traveling along. Through quickly jumping in magnitude in different spaces, times, and even dimensions, all the perceptions of the viewers are pulled into the experience of “rounding up,” and this workpiece is used to pay tribute to the dreamers.

To define the spirit of this piece of work, we wrote a few words:

There is a box up front. There is no time and space limitations in the box, just let your mind drift. What will appear is that other side or that unsolved mystery which you have never seen. Of course, there are also those known past or unknown future about yourself. In the end, when the image needs to stop, which moment would you choose to stay at?

The term “clairvoyance” is a technical term, which refers to a special phenomenon where one can see some place far away, or even something in a different space and time, beyond our normal vision. We feel that this meaning appropriately describes all who have an endless curiosity about the distant future, and are constantly pushing the world forward with their imagination and technology. That’s why we used this gigantic video workpiece with this as the theme.

Clairvoyance is portrayed using the composition of images on the wide walls and the extra-long floors. The far side of the wall is the place far away where we yearn to see and the ground below our feet is the path on which we are traveling along. Through quickly jumping in magnitude in different spaces, times, and even dimensions, all the perceptions of the viewers are pulled into the experience of “rounding up,” and this workpiece is used to pay tribute to the dreamers.

To define the spirit of this piece of work, we wrote a few words:

There is a box up front. There is no time and space limitations in the box, just let your mind drift. What will appear is that other side or that unsolved mystery which you have never seen. Of course, there are also those known past or unknown future about yourself. In the end, when the image needs to stop, which moment would you choose to stay at?

Space Design

After stepping into the entrance of “CeilToInt()” and passing through a dark corridor, what awaits the visitors is a dark circle with no reflection at all. With nothing surrounding it, the circle, which has a mirror-like smoothness, floats right in the center up front of the visitors. From time to time, records of major technological advancements and events will be displayed on the mirror. This dark, black circle symbolizes the “singularity” of technology. What it declares is: from this current step onward, the viewer is about to take a leap away from the present time and space and start exploring the sci-fi space that continuously rounds up.

After passing through the corridor of technological singularity, the first thing that the visitors see up ahead is a vast and huge interactive workpiece: Clairvoyance.

The moment visitors enter the exhibition site, they will be detected by several infrared cameras that are set up on-site. At the same time, an image will be projected on the huge ground fused by 16 projectors. Numerous light-emitting detection symbols will quickly approach visitors’ feet, surrounding them. This creates a bizarre experience of being detected and locked instantly after “logging in” to the exhibition site, which breaks the visitors’ expectations of an ordinary exhibition from the very beginning.

Exhibitors

Through the spatial planning co-created with Atelier Let’s for this exhibition, we hope to expand the structure and experience of clairvoyance to the extreme. For this reason, CeilToInt() has broken the most commonly-seen configurations in exhibitions and, therefore, the huge main path in the center of the exhibition site has been left empty, to let clairvoyance perform its immersive experience function. We used huge metal steel plates to enclose the four major areas around the exhibition, separating the four future event concepts, and respectively invited outstanding art exhibits and important items throughout the history of technological development from at home and abroad.

The moment when we are unable to recognize a machine from a person

For “The moment when we are unable to recognize a machine from a person,” we invited the popular Japanese virtual model “imma,” and the artist Zan-Lun Huang, who has been creating works on the subject of “The Mixture/Hybrid of Biology and Machine” for many years. His artwork “The Coin Operated Rocking Horse” is a metaphor of the huge destructive power of man-made objects.

The moment when we can arbitrarily define what a body is

“The moment when we can arbitrarily define what a body is” is a metaphor stating that when consciousness is the thing-in-itself, clothing and musical instruments can be regarded as extensions of the body. This area gathered together Bisiugroup’s self-made experimental musical instrument “Super Power Rocket,” as well as “The Cloud-Making Factory,” which is clothing designer APUJAN’s fantasy about fashion.

The moment when we cannot distinguish what is real and what is virtual

“The moment when we cannot distinguish what is real and what is virtual,” Brogent Global Inc. brought its immersive motion-sensing theater “XR Cinema,” breaking through the time and space limitations of the exhibition area with the VR equipment. And

The moment when we discover other civilizations in the universe “The moment when we discover other civilizations in the universe” is the remote thinking of the romance of space exploration. The workpiece “The Voyager Golden Record” in this area included the recordings of various sounds and images representing the culture and life on Earth that were sent to space by NASA in 1976. The “Escape to Earth: 100 Ways of Surviving on Earth,” by artist Teng-Yuan Chang, uses an animation installation where 100 segments are used as a guide-to explain the survival on Earth, while the “Core Sample” of OVERGROWN, a cross-disciplinary art team, acquires an ecological sample from the future and explores the potential energy that it generates.

Through such planning of the movement direction, the visitors will develop a traffic flow movement on their own. On the way to the four exhibition areas, they will inevitably pass through the central Clairvoyance again, and with their every step, they will construct a more flexible figure-eight traffic flow. This openly invites the visitors to stop and witness the depth of technology in images, arts, space, and even experience.

Through the spatial planning co-created with Atelier Let’s for this exhibition, we hope to expand the structure and experience of clairvoyance to the extreme. For this reason, CeilToInt() has broken the most commonly-seen configurations in exhibitions and, therefore, the huge main path in the center of the exhibition site has been left empty, to let clairvoyance perform its immersive experience function. We used huge metal steel plates to enclose the four major areas around the exhibition, separating the four future event concepts, and respectively invited outstanding art exhibits and important items throughout the history of technological development from at home and abroad.

The moment when we are unable to recognize a machine from a person

For “The moment when we are unable to recognize a machine from a person,” we invited the popular Japanese virtual model “imma,” and the artist Zan-Lun Huang, who has been creating works on the subject of “The Mixture/Hybrid of Biology and Machine” for many years. His artwork “The Coin Operated Rocking Horse” is a metaphor of the huge destructive power of man-made objects.

The moment when we can arbitrarily define what a body is

“The moment when we can arbitrarily define what a body is” is a metaphor stating that when consciousness is the thing-in-itself, clothing and musical instruments can be regarded as extensions of the body. This area gathered together Bisiugroup’s self-made experimental musical instrument “Super Power Rocket,” as well as “The Cloud-Making Factory,” which is clothing designer APUJAN’s fantasy about fashion.

The moment when we cannot distinguish what is real and what is virtual

“The moment when we cannot distinguish what is real and what is virtual,” Brogent Global Inc. brought its immersive motion-sensing theater “XR Cinema,” breaking through the time and space limitations of the exhibition area with the VR equipment. And

The moment when we discover other civilizations in the universe “The moment when we discover other civilizations in the universe” is the remote thinking of the romance of space exploration. The workpiece “The Voyager Golden Record” in this area included the recordings of various sounds and images representing the culture and life on Earth that were sent to space by NASA in 1976. The “Escape to Earth: 100 Ways of Surviving on Earth,” by artist Teng-Yuan Chang, uses an animation installation where 100 segments are used as a guide-to explain the survival on Earth, while the “Core Sample” of OVERGROWN, a cross-disciplinary art team, acquires an ecological sample from the future and explores the potential energy that it generates.

Through such planning of the movement direction, the visitors will develop a traffic flow movement on their own. On the way to the four exhibition areas, they will inevitably pass through the central Clairvoyance again, and with their every step, they will construct a more flexible figure-eight traffic flow. This openly invites the visitors to stop and witness the depth of technology in images, arts, space, and even experience.

Surroundings

As a special exhibition for the public, suddenly having an in-depth discussion about sci-fi might seem somewhat distant for the general public. But in fact, people often come in contact with such themes through media, such as film and television, books, and comics, etc. Therefore, we cooperated with the Conservation Comics Shop “Booking” and filled the site with Sleepy Tofu mattresses to create a “Chill Study Room” that is full of sci-fi classics, allowing everyone to sit or lie down and enjoy the sci-fi imagination in the most comfortable positions. After a period of time, the light in the study room will dim, and sci-fi movies carefully selected by Giloo will be played. After each movie, post-screening forums are arranged, so that the audience will be able to absorb the sci-fi imagination from various cultures through the different types of media.

2020 DigiWave

|

CeilToInt();

Adviser: Kaohsiung City Government, Industrial Development Bureau

Organizer: Economic Development Bureau, Kaohsiung City Government

Executive Organizer: Taiwan Academia Industry Consortium, Ultra Combos

Co-Organizer: FIRE ON MUSIC, LA RUE Cultural and Creative

Projector Sponsor : EPSON

Exhibitors : APUJAN, Amazing Show - Gobo, BROGENTGLOBAL, Chang Teng-Yuan, Huang Zan Lun, imma, OVERGROWN Group, Ultra Combos

Speaker : Chien-Hung Huang, Commute For Me, Godkidlla, Pi-Gang Luan, Ruru Shih, TENG Chao-Ming

Performer : Bisiugroup AmazingShow, , Elephant Gym, Fire EX., Kid King, Sorry Youth, The Fur.

Sponsors : BOOKING, Giloo, Gramovox, Sleepy Tofu, UGLY HALF BEER

Curator : Jay Tseng

Project Manager : Tim Chen

Marketing & PR Coordinator : Ichiu Chao, Elma Wu

Copywriting Coordinator : Jay Tseng

Art Director : Ting-An Ho

Visual Design : A Step Studio, ACnD, Chianning Cao, Chia-Yun, Song, MS YU

Spatial Design : Atelier Let's - Ta-Chi Ku, Yi-Shan Hsieh

Spatial Construction : U.U DESIGN - Ya-Pei Chang, Chun-Chih Yang

Exhibition Planning, Copywriting and Editing : Jay Tseng, Chianning Cao, Stella Tsai, Chia-Yun Song

Curator Personnel : Isa Sung, Myling Ho, Wanting Chi

Administration & Operation : Joyce Huang

Exhibition Executor Team : Chianning Cao, Myling Ho, Isa Sung, Chia-Yun Song, Chia-Wei Lin, Cheng-Fang Hsieh, Olivia Yu, Nancy Wang, Sunny Tseng, Annie Chen, Nina Chen

Technology Director : Herry Chang, Alex Lu

Programming : Herry Chang

Hardware Engineering : Rayme Solution, FEBLOW PRODUCTION, XiaoXiao Laser

Exhibition Photographer : Yi-Hsien Lee, Guei-Jhen Yan, Lilly Hsu

Exhibition Videographer : M.Synchrony, Ting-Yi Chuang

Special Thanks : Chiu ChengHan, Jia-Lun Chang, William Liu, Wayne Wang, Prolong Lai, Hung-Pei-Shan, Patty Lu, Miao Lu, Syu Fang-Jing

Clairvoyance

Producer : Ke Jyun Wu

Art Director : Ting-An Ho

Motion Graphics : Ting-An Ho, Ke Jyun Wu, 1000 Cheng, Mal Liu

Interaction System Design, Execution : @chwan1

Programming : Chia Reng Tsai

Sound Design : Zhen-Yang Huang (Triodust)

Strings : Chun-Yu Lung (Joy Music)

Related Works:

Project Type: Communication

Category: Exhibition, Event

Client: Ministry of Economic Affairs, Hsinchu City Government

Year: 2020

Location/Venue: HSINCHU / Hsinchu Municipal Stadium

#AR

#AI #YOLO

#AirBox #GFS

#Installation

Category: Exhibition, Event

Client: Ministry of Economic Affairs, Hsinchu City Government

Year: 2020

Location/Venue: HSINCHU / Hsinchu Municipal Stadium

#AR

#AI #YOLO

#AirBox #GFS

#Installation

Taiwan Design Expo -

The Terminal

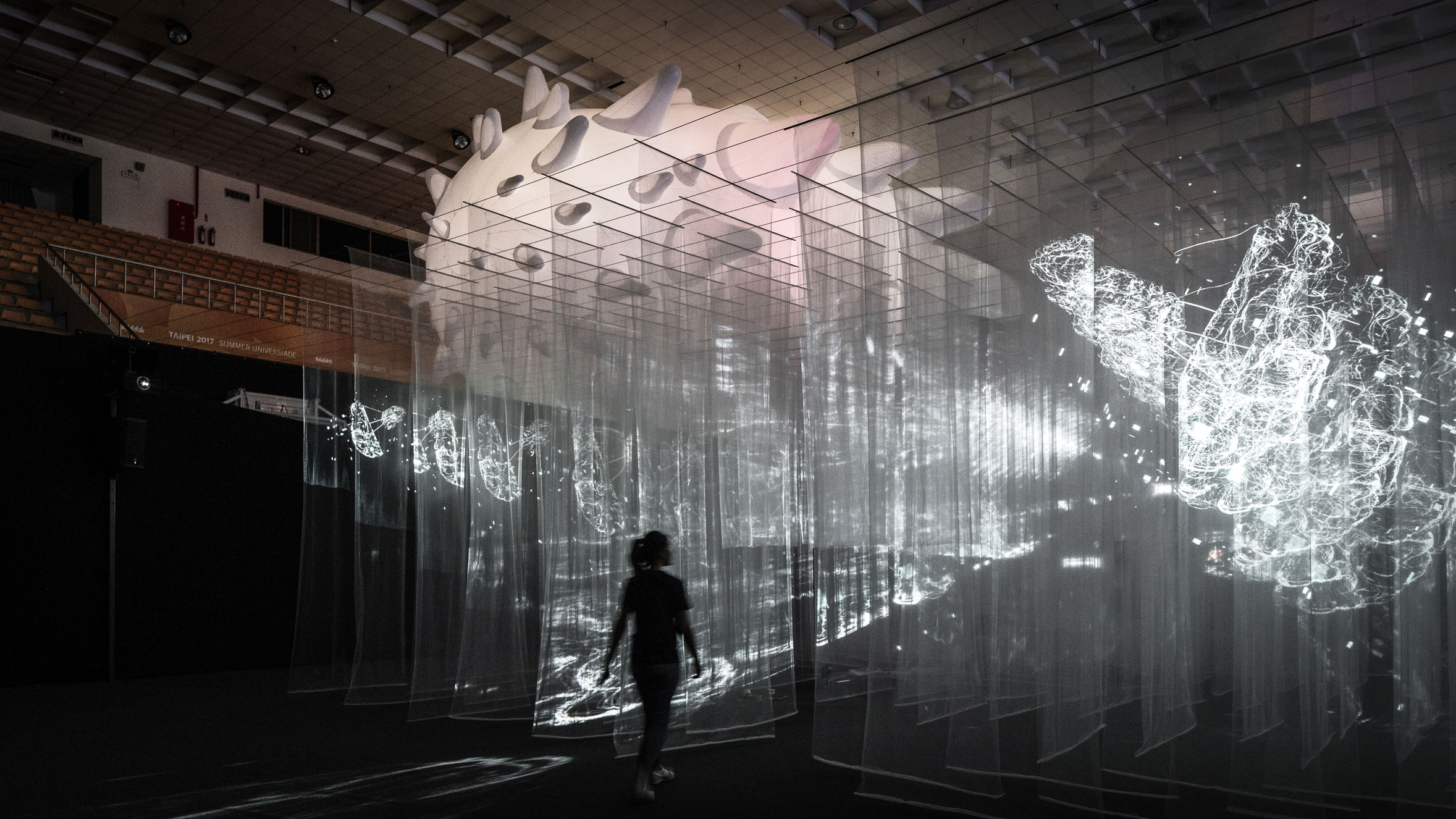

Located at the Hsinchu Municipal Stadium, “The Terminal” is the main venue of the Taiwan Design Expo 2020. We were invited by BIAS Architects & Associates to co-plan this venue. As the name suggests, from a digital perspective, we will dig deep into the intricate relationships between people, cities, technology, and design, to help you understand the invisible urban wisdom.

Virtual City Hidden within the Stadium

Starting from the research and investigation of this concept with the Hsinchu City Government, we consulted many workers who are behind-the-scenes driving forces on many related issues along the way. And we compiled a series of documents to reconstruct how this theme looks at this moment in theory and also based on the current situation. Deconstructing and restructuring this information so that it becomes a perspective-based space and content, we lead the audience to see and feel with their bodies as to how digital technology intervenes and changes the appearance of the society as a whole.Spatially, we used the original structure of the stadium, which is its upper and lower levels (spectator’s seating and athletic field), to disassemble the exhibition content through a translation of form many segments within two large areas. And through the arrangement of visitor flow and spatial context, we reconnect and combine these to become a real and existing digital world. Visitors will follow the locations we have arranged to complete a journey into the virtual city.

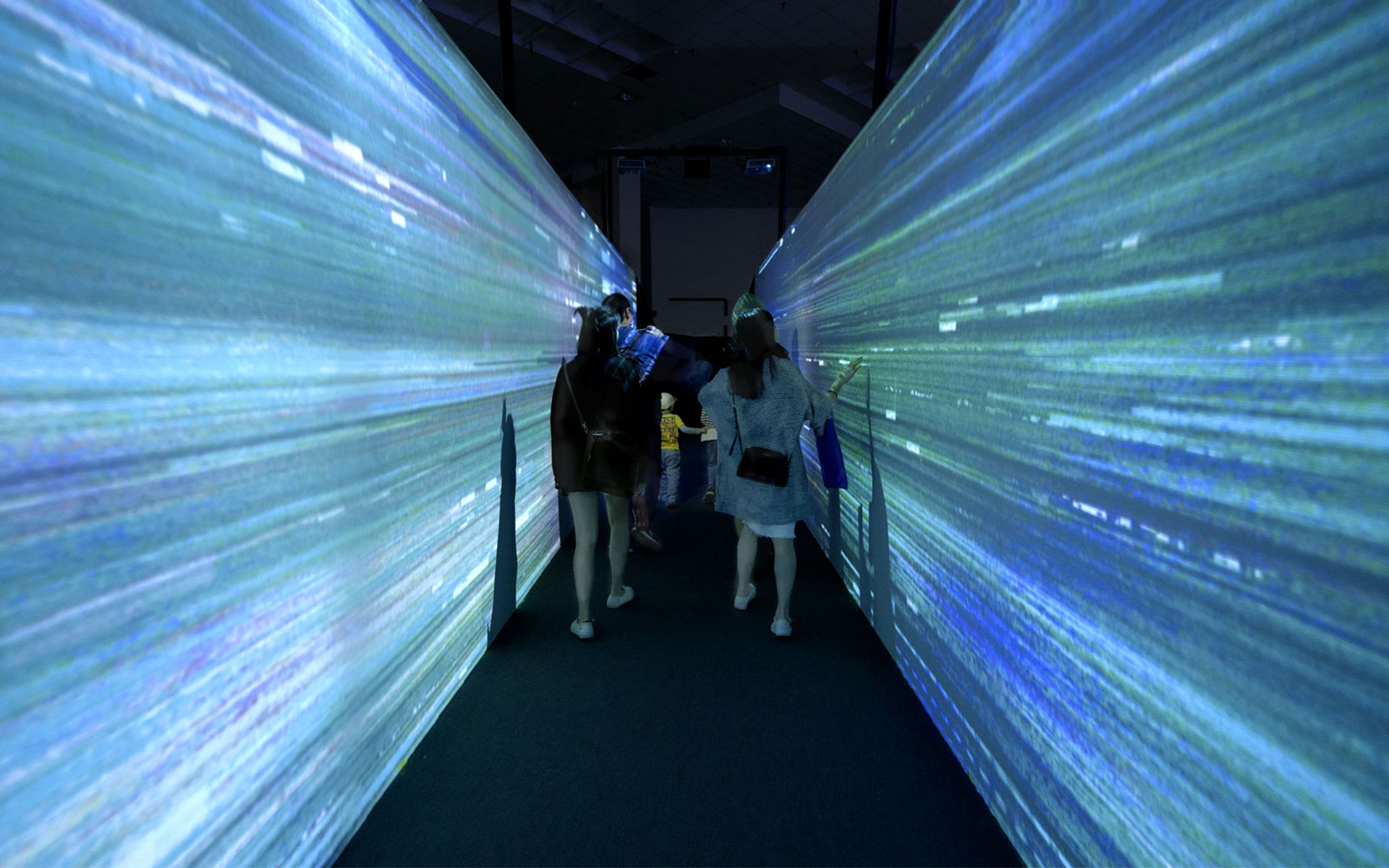

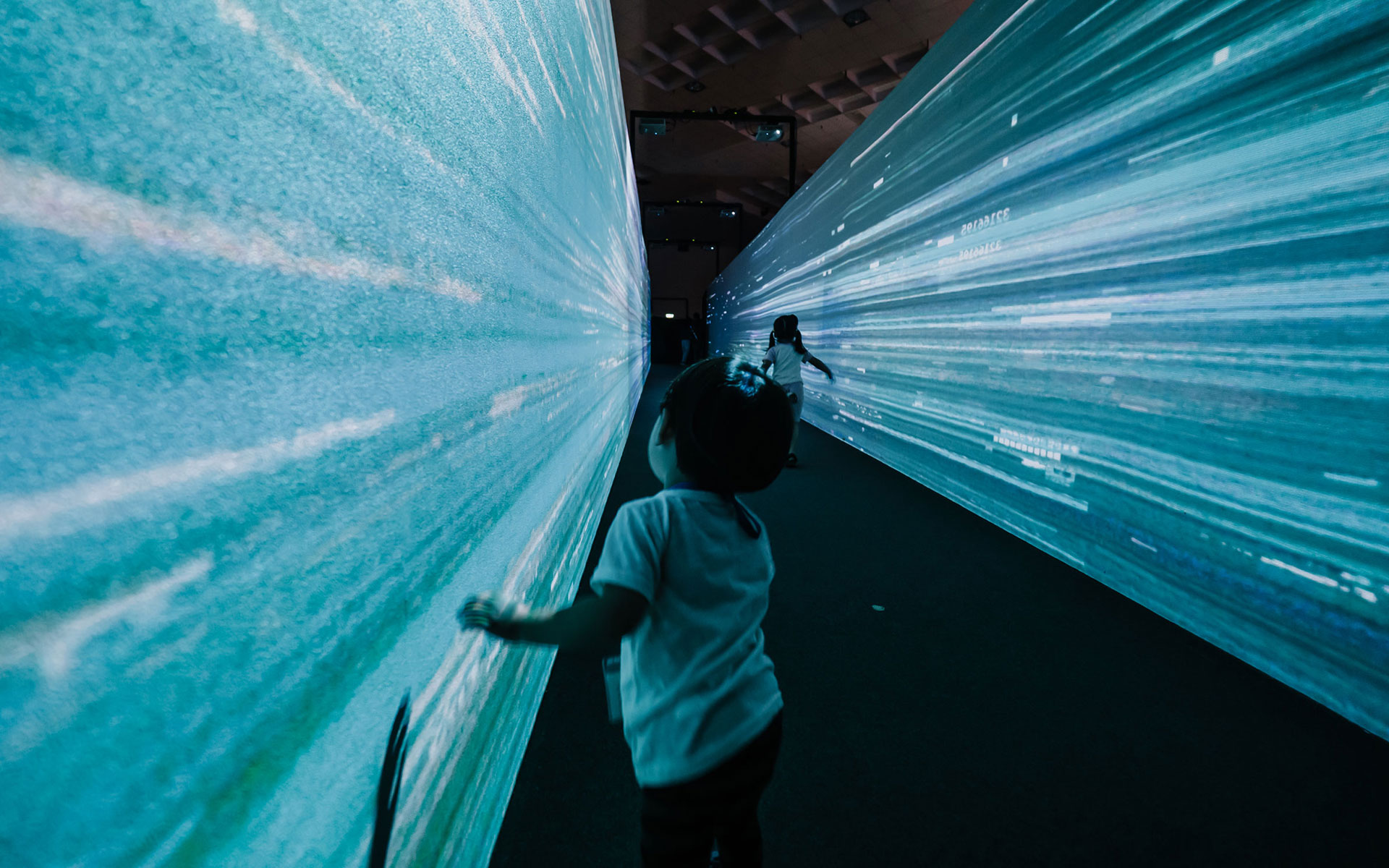

Electric Tunnel

This is the ramp entering the spectator’s seating area, and will be used as a corridor into the digital world. This straight, long, narrow, and upward pathway, along with the large flowing images on both sides, leads the audience gradually forward with the bits. An analogy is drawn between the audience and information packet, where the audience becomes the “digital technology” itself, where they also become compressed and streamed to the digital world.

Portal to the Digital World

At the end of the ramp, a visual ritual is built, which virtualizes the body. Using the characteristics of a partial mirror, an interface with a superimposed switch between being virtual and being real is constructed. When the audience passes through, the body will instantly be deconstructed into a digital image. To describe this straightforwardly, this is the portal to the digital world.

Hsinchu Monster Incubator / Monitor

The Hsinchu Monster symbolizes the power of the advancement of the entire city, while citizen participation is an important driving force in making the city smarter. Through design translation, the “collective intelligence that drives the city" is made abstract and becomes the “Heart of the Hsinchu Monster” located within the venue. The audience's choices in the six major aspects of life are collected. And using AR technology along with lighting connections, these choices are used as nutrients to feed the heart of Hsinchu monster at the other end of the venue.

The Hsinchu Monster Monitor, which is set up on the side, displays the attribute proportions of participants. This is hinting that the city is composed and operated by people. Through their joint participation in public issues and bringing together everyone’s strengths, urban development, progress, and common prosperity are promoted.

The Hsinchu Monster Monitor, which is set up on the side, displays the attribute proportions of participants. This is hinting that the city is composed and operated by people. Through their joint participation in public issues and bringing together everyone’s strengths, urban development, progress, and common prosperity are promoted.

Background Database for Life

Using large-area splicing screens as image carriers, through generalization, various information distributions are organized, where the visualization of geographic information, such as transportation, AI, and weather is included. Various appearances of Hsinchu is displayed, simulating the scenario of situation room, where we can imagine how city managers pay attention to the city’s operation and assist in its progress.

Walk into Hsinchu Terminal - Data Forests

“A concrete terminal” is the spatial and conceptual core of the entire venue. The design attempts to create a huge mass, where people can view it from a distance or enter within it. Diamond gauzes with balanced transparency were selected and imported, where we then staggered and stacked them as a screen where images can be projected upon. The content is the process of collecting, importing, sorting, analyzing, and applying the visual data. The image increases progressively in layers within the space, forming a light box where the information is flowing in a three-dimensional space.

Taiwan Design Expo - The Terminal

Organizer : Ministry of Economic Affairs, Hsinchu City Government

Executive Organizer : Industrial Development Bureau, MOEA

Executive Team : Taiwan Design Research Institute, BIAS Architects & Associates, Grand view Culture & Art Foundation

Co-Organizer : Taiwan Power Company

Curator : Tammy Liu

Curator Team:BIAS Architects & Associates, Ultra Combos

Interactive Design:Ultra Combos

Project Manager : William Liu

Producer : Hoba Yang

Creative Director : Jay Tseng

Art Director : Glenn Huang

Technical Director : Hoba Yang

Project Assistant : Miao Lu

Electric Tunnel

Visual Production : Glenn Huang

Portal to the Digital World

Generative Art Designer : Hoba Yang, Tz Peng

Visual Production : Hauzhen Yen

Hsinchu Monster Incubator / Monitor

Programmer : Nate Wu

UI Designer : Lynn Chiang

UX Designer : Lynn Chiang, Nate Wu

Motion Designer : Kei Zheng, Hauzhen Yen

Lighting Design : TING HAO TSU LIGHTING DESIGN STUDIO, ACROPRO

Background Database for Life - Data Monitor

Programmer : Hoba Yang

Visual Production : Mal Liu, Glenn Huang

Collaborative Content : 2019 SP Option Studio GIA NCTU, Traffic Police Brigade. Hsinchu City Police Bureau, Hsinchu City Government Department of General Affairs, Information Management Section, PM2.5 Open Data Portal, National Oceanic and Atmospheric Administration

AI Video Analytics : ioNetworks INC.

Walk into Hsinchu Terminal - Data Forests

Visual Production : Glenn Huang, Hauzhen Yen, Ting-An Ho, Chris Lee

Music Design : Sincerely Music

Photographic Record : Yi-Hsien Lee and Associates YHLAA, Lambda Film

Hardware Testing : Herry Chang, Alex Lu, Chia-Wei Lin

Special Thanks : TonyQ Wang, Shuyang Lin, Fang-Jui Chang,

Wei-An Zheng, Chi-Kai Lu, Shermy Liu,

Related Works:

Project Type:

Artwork

Category: Theatre

Client: Anarchy Dance Theatre

Year: 2019

Location: National Kaohsiung Center for the Arts (Weiwuying)

#Performing Art

#New Media Art

#Stage Design

#Smoke

#Generative Art

#Optical Flow

Category: Theatre

Client: Anarchy Dance Theatre

Year: 2019

Location: National Kaohsiung Center for the Arts (Weiwuying)

#Performing Art

#New Media Art

#Stage Design

#Smoke

#Generative Art

#Optical Flow

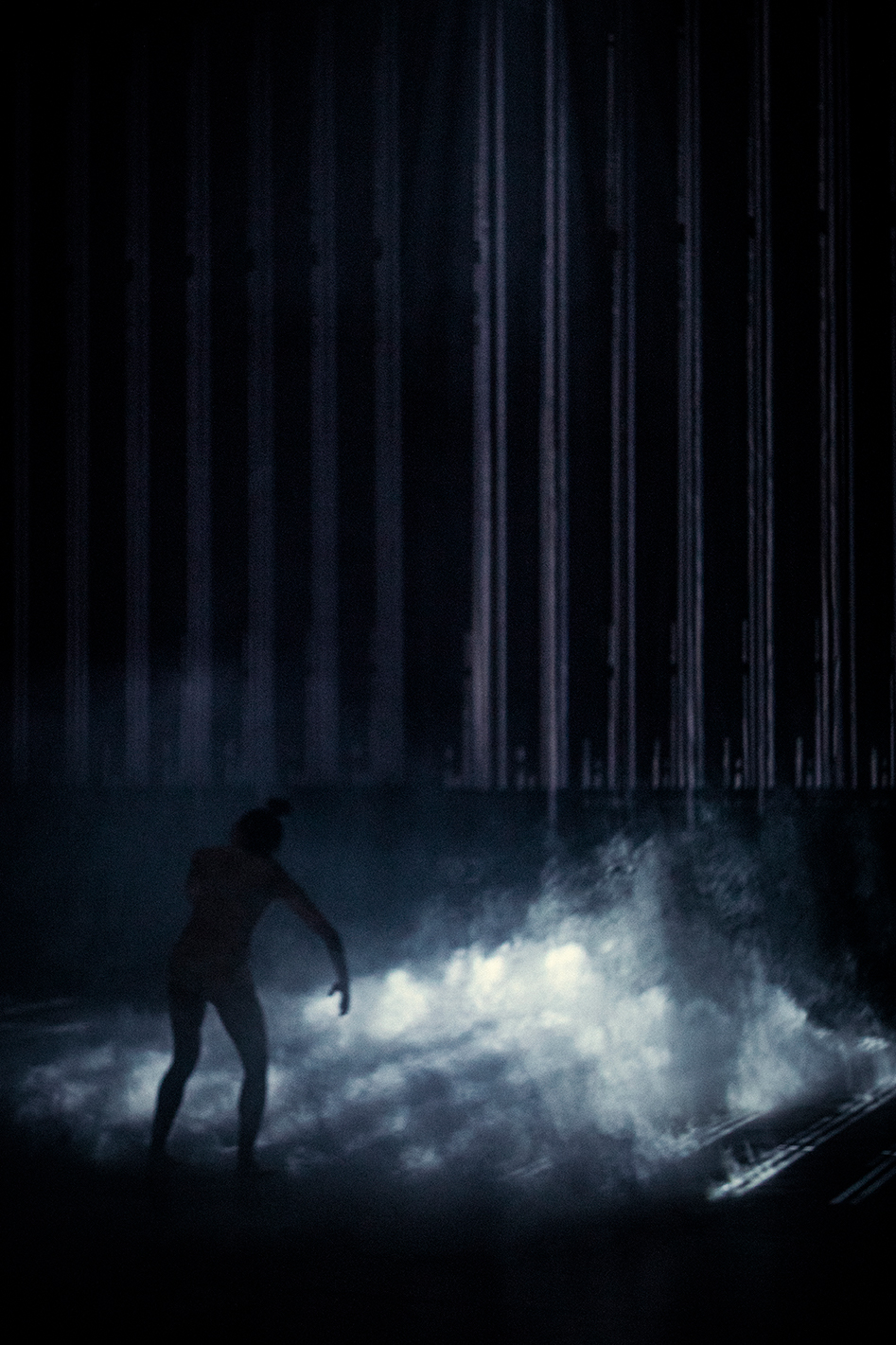

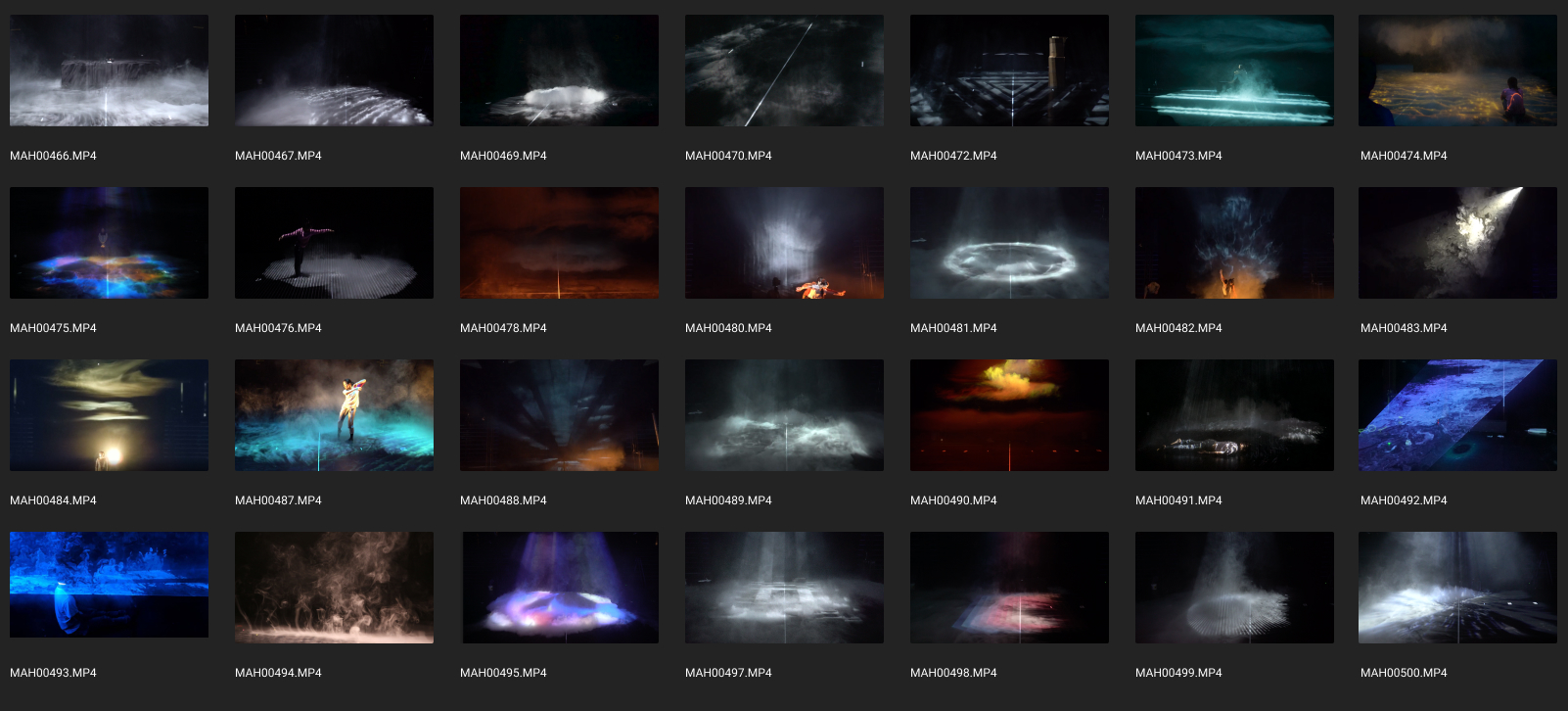

The Eternal Straight Line

This is the third piece of artwork that Anarchy Dance Theatre collaborated with Ultra Combos.

Just as what dance choreographer Jeff Hsieh wrote, “What’s the end of death? It is like a lake, where people always look to the bottom of the lake, trying to comfort the wandering souls in the spiritual realm, but forget that the human world is the spiritual realm. So, is the final wandering soul a living person or a spirit of the dead? How far can death go? It is also like a black hole, an eternity where light cannot enter, guarding the end of time. That is the place where the most inferior equality of humanity exists.

If one day, one rides the light of technology and crosses the border of death, then, over there, what kind of place will “life” be? Being born from death and being thrown into the fire and ashes. In the unnamed habitat where living creatures linger like smoke, the appearance of the existence of all things is reflected. After the fog clears, the lake also becomes clear, and life and death will then be redefined.”

This time we tried to let the models be composed of smoke, combining light, laser, projection, and sound, and together with the dancers, and we constructed a flowing scene that discussed the proposition of life and death.

The Other Place – Visual Design

“The Other Place” is the original name of “The Eternal Straight Line.” “The Other Place” is a situation, while “The Eternal Straight Line” is a question to the audience. The development of the concept of this artwork is just like its name change. The entire team started out with the situation, and with dialectic and reflection, the team ended up taking it down a notch and headed towards a metaphysical question.

The development process can be sorted into three stages, “the process of physical (self) death,” “watching the process of death,” and “the meaning of death after the emergence of technology.” In the end, these also became the core vocabulary in the bare bones of the text and visual development.

The development process can be sorted into three stages, “the process of physical (self) death,” “watching the process of death,” and “the meaning of death after the emergence of technology.” In the end, these also became the core vocabulary in the bare bones of the text and visual development.

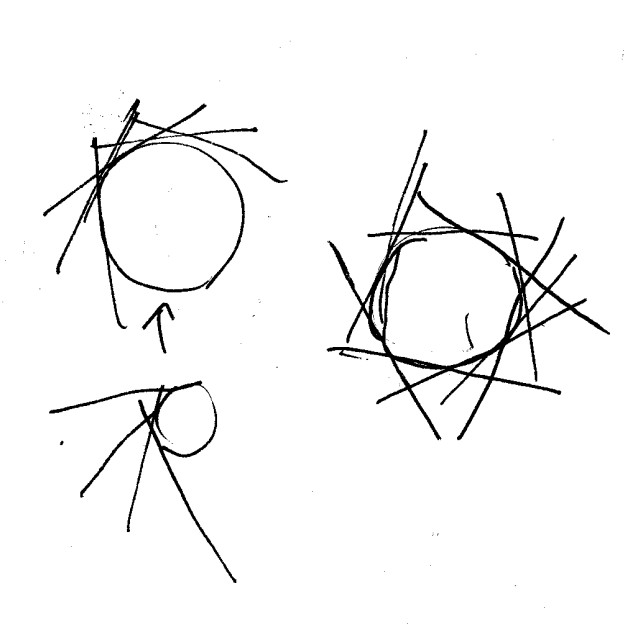

Another Dancer – “Smoke”

With the theme of life and death, the characteristic and image of having faintly uncertain movements made “smoke” the most suitable medium, and, thus, smoke naturally became “another dancer” on the stage.

- The first issue is “controlling the form and movement of smoke.”

Even though it’s a short sentence, it is actually a project with quite a threshold, because the different variables that may change the smoke include being at different venues, humidity, temperature, temperature differences, and invisible convections. Shu-Yu drew up a sound experiment to test the process, completing many structural smoke controls.

- The second issue is “how to use light to shape the various forms of smoke.”

There are many possibilities with light, we also tried as many kinds of light-generating media as possible, such as traditional lights, computer lights, projection, and laser. The collaboration of the various light sources with smoke all have completely different textures, so there was no need to choose, they just need to be placed in the sections that fit the text.

-

For the projection

Optical Flow

Generative Art

Building of Theater Worker-Oriented System

In the past, when we collaborated with theater workers, we always each used our own systems to process content at the same time. After understanding the concepts (cue, fixture, patch, and channel) and operation (interface arrangement, logic of control, and hardware operation) of the lighting system, we realized that integrating the system into the lighting control platform grandMA2 is a feasible method with significant benefits.

After a series of evaluations and implementations, the team successfully integrated and interlocked Unity and grandMA2 by using the OLA program framework to convert Art-Net signals to OSC signals. After Unity receives the OSC signal, it can be bound to any internal parameters. We, then, discussed the suitable binding method with the lighting control personnel according to the needs.

Under this framework, the logic of controlling the projection visual is consistent with that of computer lights and smoke. The lighting control personnel and stage managers can use the same system to complete all adjustments, scheduling, and performances.

After a series of evaluations and implementations, the team successfully integrated and interlocked Unity and grandMA2 by using the OLA program framework to convert Art-Net signals to OSC signals. After Unity receives the OSC signal, it can be bound to any internal parameters. We, then, discussed the suitable binding method with the lighting control personnel according to the needs.

Under this framework, the logic of controlling the projection visual is consistent with that of computer lights and smoke. The lighting control personnel and stage managers can use the same system to complete all adjustments, scheduling, and performances.

Creative and Production Team

Production:Anarchy Dance Theatre

Director / Choreographer:Jeff Chieh-Hua Hsieh

Production Consultant:Jessie Lin, PROJECT ZERO Performing Arts Management

Dance dramaturg:Xiang-jun Fan

Dancer:Shao-Ching Hung, Chun-Te Liu, Han-Hsing Kan, Hsiao-Tzu Tien, Kuan-Jou Chou, Viktorija Semakaitė

Technology Coordination / Visual Design:Ultra Combos

- Project Manager:Jay Tseng

- Project Producer:Wei-An Chen (@chwan1)

- Art Director:Lynn Chiang

- Generative Art Designer:Ke-Jyun Wu

- Visual Production:Hauzhen Yen, Ting-An Ho, Ayuan Deep

- Programmer:Ke-Jyun Wu, Nate Wu

- Technical Assistant:Herry Chang, Syu, Fang Jing, Chianing Cao, Alex Lu

Technical Coordination:We Do Group

- Lighting Design:Dazai Chen, Yi-Hsin Chen

- Technical Director:Jheng-kuan Lee

- Audio Technical Director:CHOU Wen-Ming

Cloud Design:Shu-Yu Lin

Cloud Design Assistant:Wei Huang, Yu-Liang Lin

Sound Design:Yannick Dauby

Sound Operation:Nigel Brown

Costume Design:JUBY CHIU

Stage Manager:Hsiang-Ting Teng

Production Coordinator:Hsiao-Fan Tai

International Programs Coordinator & Marketing Promotion:AxE Arts Management

Key Visual Design:Shiun-Huan Lee

Video Record:Chen Yishu, Zheng Jingru, Zhang Borui, Foufa Studio (Cai Bingxiao, Zheng Manzi, Wu Zhaochen)

PV:Foufa Studio, Ting-An Ho

List of thanks:Antari Yu'an Lighting Enterprise Co., Ltd., He Youying, Wu Hongyu, Li Jiaru, Li Tingyu, Du Yingying (Qianmo Institute), Director Lin Zhengxun (Taipei Hakka Music and Drama Center), Professor Lin Zhaoan, Lin Zheli, Lin Mi, Bingguan Co., Ltd., Zhang Shanting, Zhang Junhe, Zhang Zheji, Xu Shangyuan (Minwei Video Engineering Co., Ltd.), Xu Youwei, Chen Jialing, Chen Xi, Chen Yanrong, Peng Jiajie, Yang Yixuan, Wen Sini, Wan Yuren, Zhan Haoyu, Liao Yuanyu, Guan Yixiang, Spotlight Workshop, Xie Youcheng, Yan Yining, Luo Yishan

Instructor:Ministry of Culture

Creative support:National Kaohsiung Center for the Arts (Weiwuying), TAIWAN TOP Performing Arts Group

Venue Cooperation:Taipei Hakka Cultural Foundation, Taipei Art Sound Space Network, New Beitou 71 Park, Performance Arts School 36

Sponsors:ChinLin Foundation for Culture and Arts, Interplan Group

Makeup sponsor:Laura Mercier

Related Works:

Project Type: Entertainment, Artwork

Category: Event, Exhibition

Year: 2019

Veneu: Kaohsiung Music Center

.

#AudioVisual

#GeneraltiiveArt

#Installation

Category: Event, Exhibition

Year: 2019

Veneu: Kaohsiung Music Center

.

#AudioVisual

#GeneraltiiveArt

#Installation

2019 DigiWave - Treasure

The team received an invitation to participate in the DigiWave event and to work together with musician Lim Giong to create an installation and a performance.

DigiWave is a digital trend that talks about the development trend of digital industries in Kaohsiung City. Digi is the means, and Wave is the ideological trend, flow, and also communication. We hope that through DigiWave each year, we are able to ask the crucial question to an important issue in our lives. Communicating through technology and presenting through the forms of exhibitions and events, we hope to bright about the next wave in the progression of life.

This year, with ocean as our proposition, we introduced two exhibitions, two performances, and two themed markets. This series of performances and events include the themed exhibition 《大海裡的迴旋踢》 of the same name, which lets people experience the future sea world.-

GitHub Link ︎

Concept

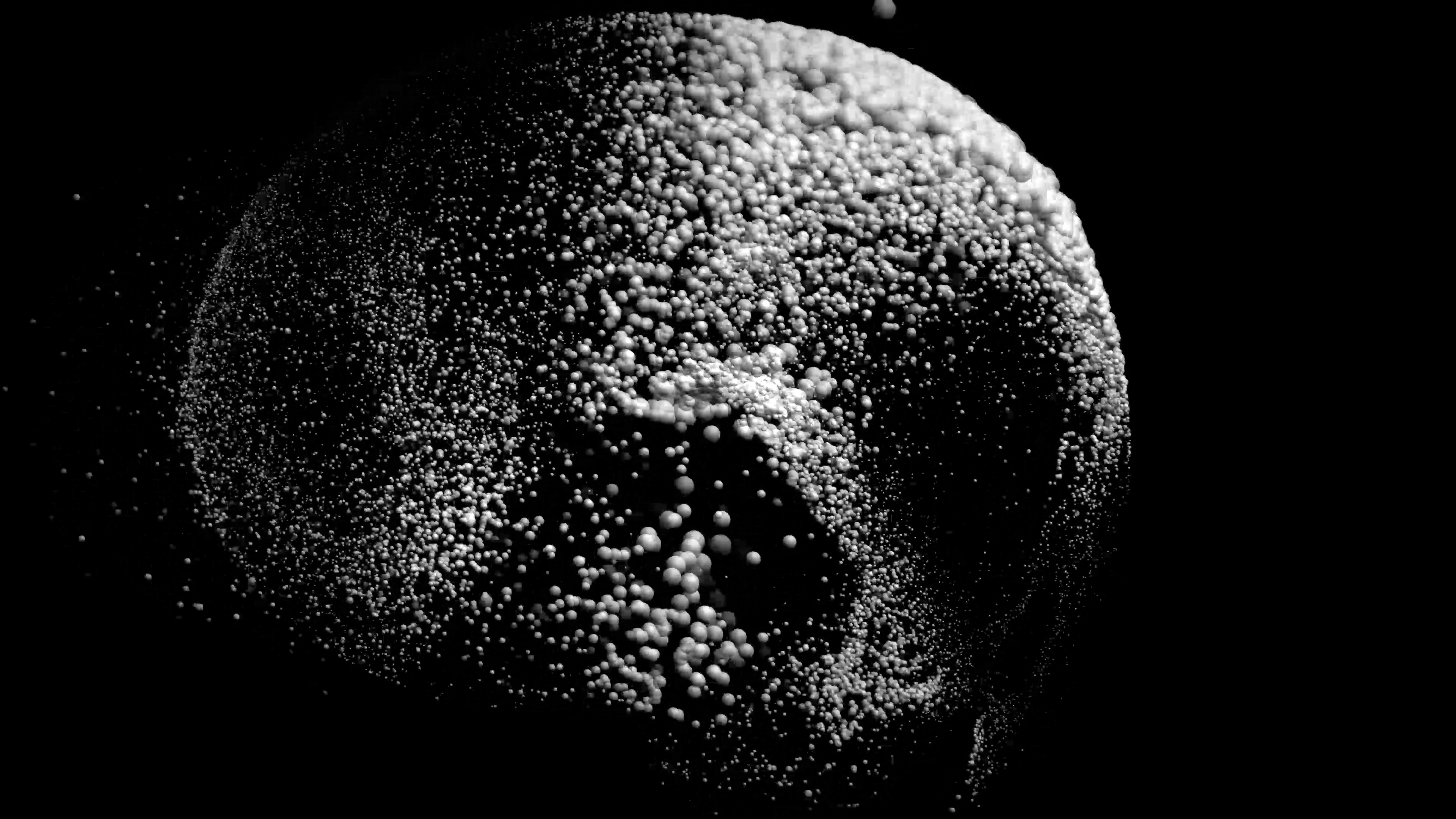

Seeing half of the beautiful seashell buried in the sand at the beach.

We always pick it up subconsciously, place it next to our ear, and, for some unknown reason, say that it’s the “Sound of the Sea.”

Shellfish are like the sculptures of God. From color, material to shape, they all contain pure, yet extremely complex constitution aesthetics.

This “Treasure” project is based on the deconstruction of the beauty of shellfish. These are taken from nature and using new media as the medium, these are re-translated into sounds and visuals.

We always pick it up subconsciously, place it next to our ear, and, for some unknown reason, say that it’s the “Sound of the Sea.”

Shellfish are like the sculptures of God. From color, material to shape, they all contain pure, yet extremely complex constitution aesthetics.

This “Treasure” project is based on the deconstruction of the beauty of shellfish. These are taken from nature and using new media as the medium, these are re-translated into sounds and visuals.

2019 DigiWave - Treasure

Adviser: Kaohsiung City Government, Industrial Development Bureau

Organizer: Economic Development Bureau, Kaohsiung City Government

Implementer: Taiwan academia industry consortium, Institute for Information Industry, 3080s Local Style

Curator: Chiu, Cheng-Han, Ruby Ting

Artwork by Ultra Combos

Live Audiovisual Performance

Producer : Jay Tseng

Generative Artist: Ke Jyun Wu, VJ Youji

Technical Execution: Wei-An Chen (@chwan1)

Visual Jockey: VJ Youji

Composer / Sound Mixing : Lim Giong

Disc Jockey: Lim Giong

Hardware Integration: CDPA

Installation

Producer: Jay Tseng

Generative Artist: Ke Jyun Wu, VJ Youji

Visual Editing: Ting-An Ho

Technical Execution: Wei-An Chen (@chwan1)

Installation Artist: Ta Chung Liu

Composer / Sound Mixing : Lim Giong

Related Works:

Project Type:

Entertainment

Category: Exhibition, Event

Client: Marvel Studio│Beast Kindom

Year: 2018

Location: Singapore ArtScience Museum

#Marvel

#Avengers

Category: Exhibition, Event

Client: Marvel Studio│Beast Kindom

Year: 2018

Location: Singapore ArtScience Museum

#Marvel

#Avengers

Marvel 10th Anniversary Exhibition

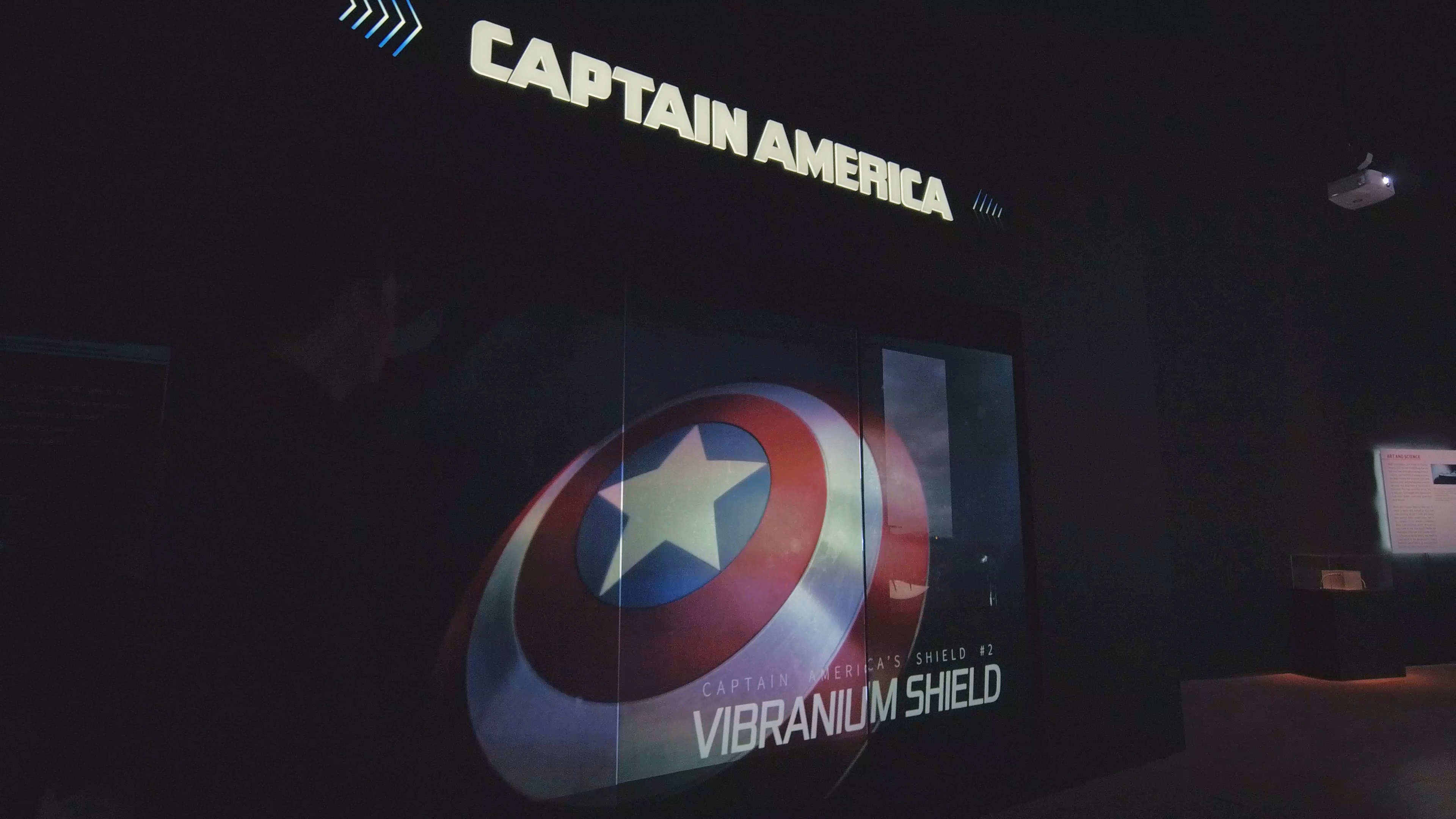

Since Marvel Studios introduced its first movie Iron Man, the movie universe of Marvel Studios was opened. During this past decade, many classic heroes were shaped on the big screen, such as Captain America, Black Widow, The Hulk, Thor, Scarlet Witch, Guardians of the Galaxy, and Ant-Man.

In order to celebrate the 10th anniversary of Marvel Studios, Ultra Combos was entrusted to participate in creating the Marvel Studios: Ten Years of Heroes exhibition, reproducing the classic scenes in Marvel's vast universe. With the popular heroes all standing in a line and through the experience of immersive space, the audience is guided to walk into the first grand decade of Marvel Studios together.

IRON MAN

CAPTAIN AMERICA

DOCTOR STRANGE

AVENGERS: INFINITY WAR

THEATRE

Marvel 10th Anniversary Exhibition

Curation:BEAST KINGDOM CO., LTD.

Producer:Herry Chang

Project Manager:Tim Chen

Creative Director:Tim Chen

Technical Director:Herry Chang

Programmer:Kyosuke Yuan、Hoba Yang、Nate Wu、Herry Chang、Yen-Peng Liao、Wei-Yu Chen

Art Director:Chris Lee

Concept & Storyboard:Chris Lee

UI/UX Design:Chris Lee

Visual Assistant:Jia Rong Tsai

Generative VFX (Dr. Strange / Ant man):Hoba Yang、Herry Chang

Motion Design (Captain America / Helicarrier / Bifrost / Theatre):MUZiXlll

Character Rigging (Theatre):Yoyo Chang

Video Installation Solution:甘樂整合設計有限公司

Hardware Lead:Herry Chang

Hardware Integration:Prolong Lai

Hardware Engineer:Wei-Yu Chen

Sound Design:The Flow Sound Design

Director of Photography:Ray.C

Photography Assistant:Ya-Ping Chang

Related Works:

Project Type:

Entertainment

Category: Event

Client: 3AQUA Entertainment

Year: 2019

Location: Taiper Arena

#Laser

#rollingshutter

#audiovisualization

#webscraping

#opticalflow

#stagesimulator

Category: Event

Client: 3AQUA Entertainment

Year: 2019

Location: Taiper Arena

#Laser

#rollingshutter

#audiovisualization

#webscraping

#opticalflow

#stagesimulator

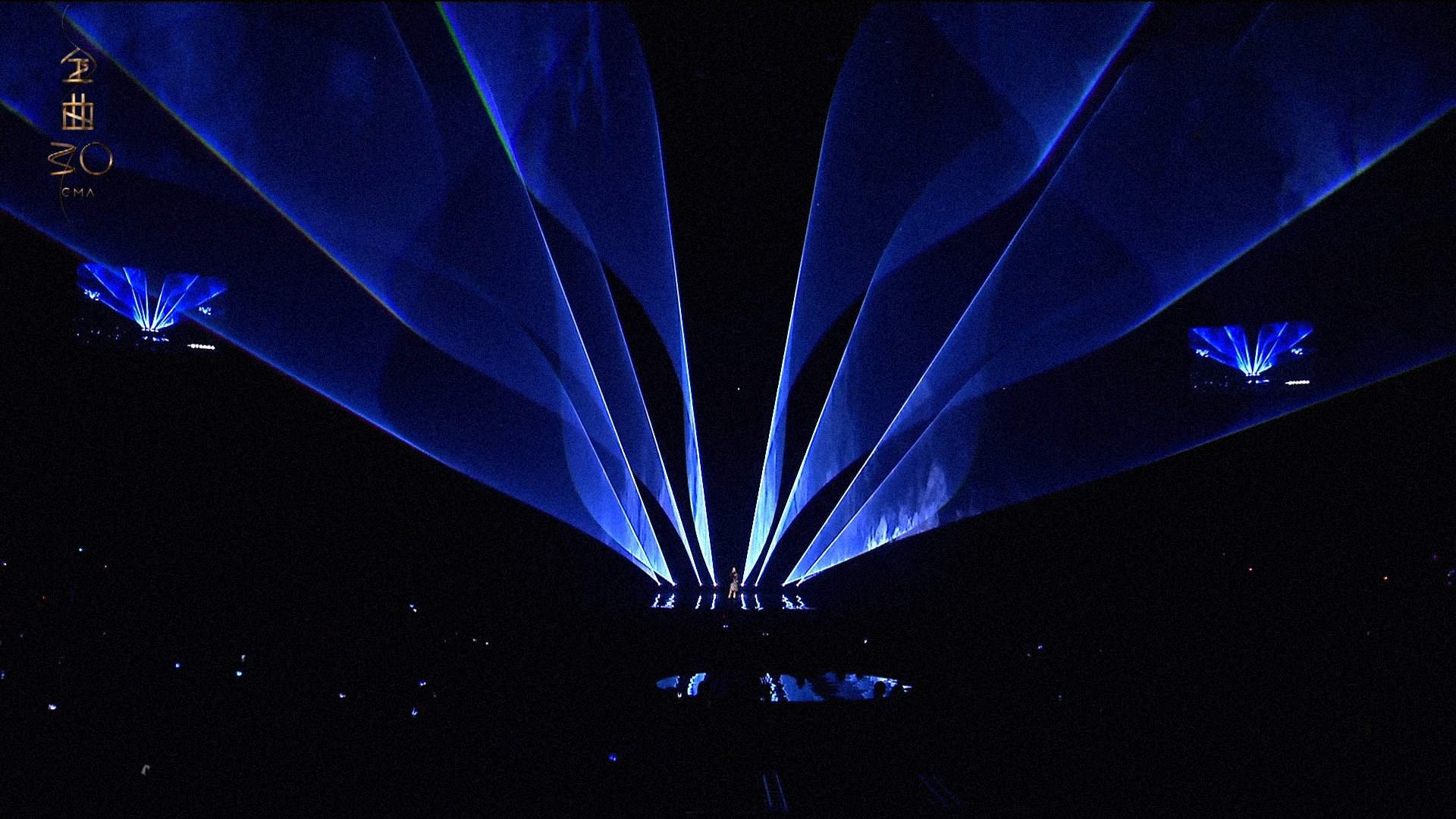

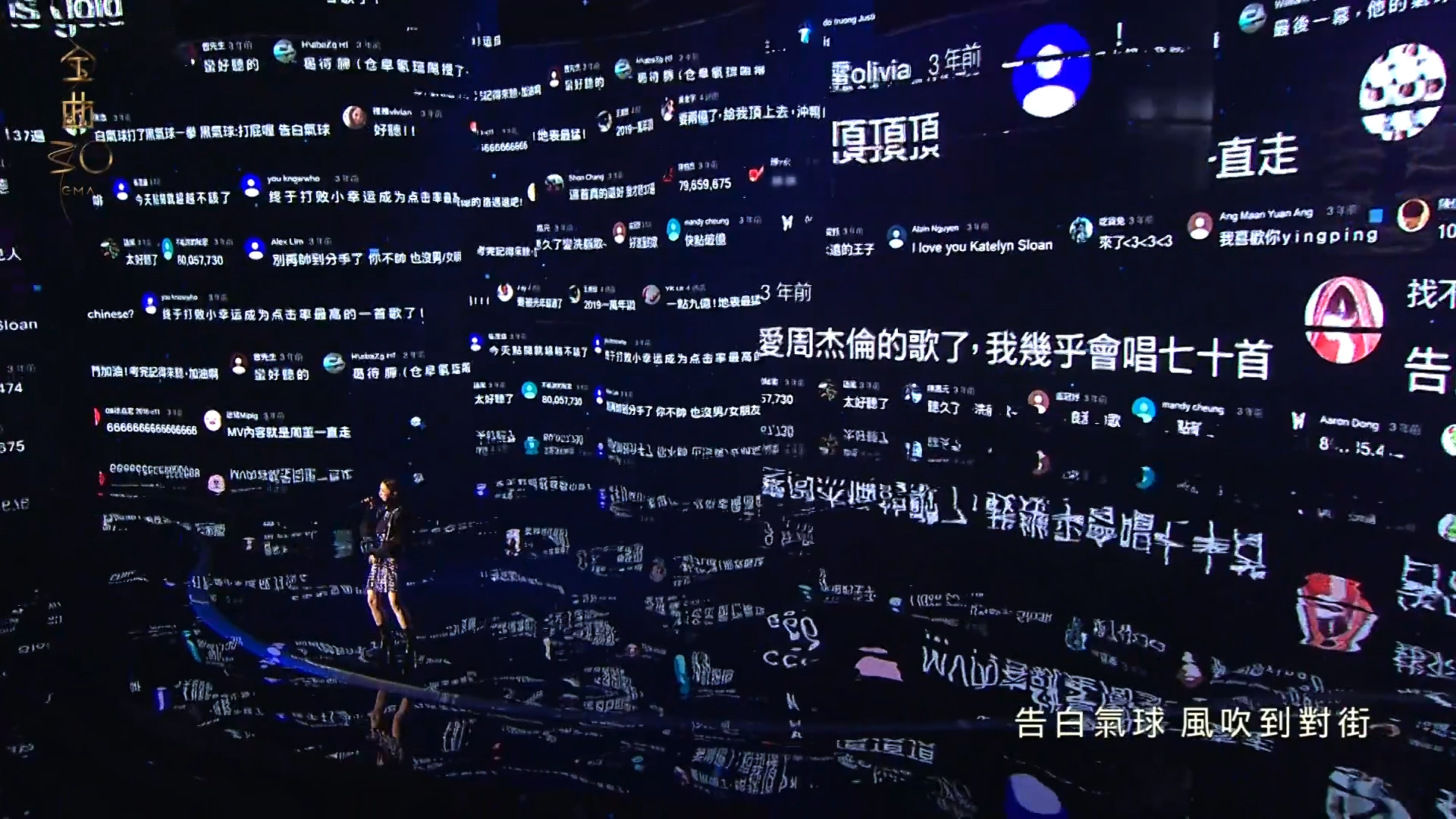

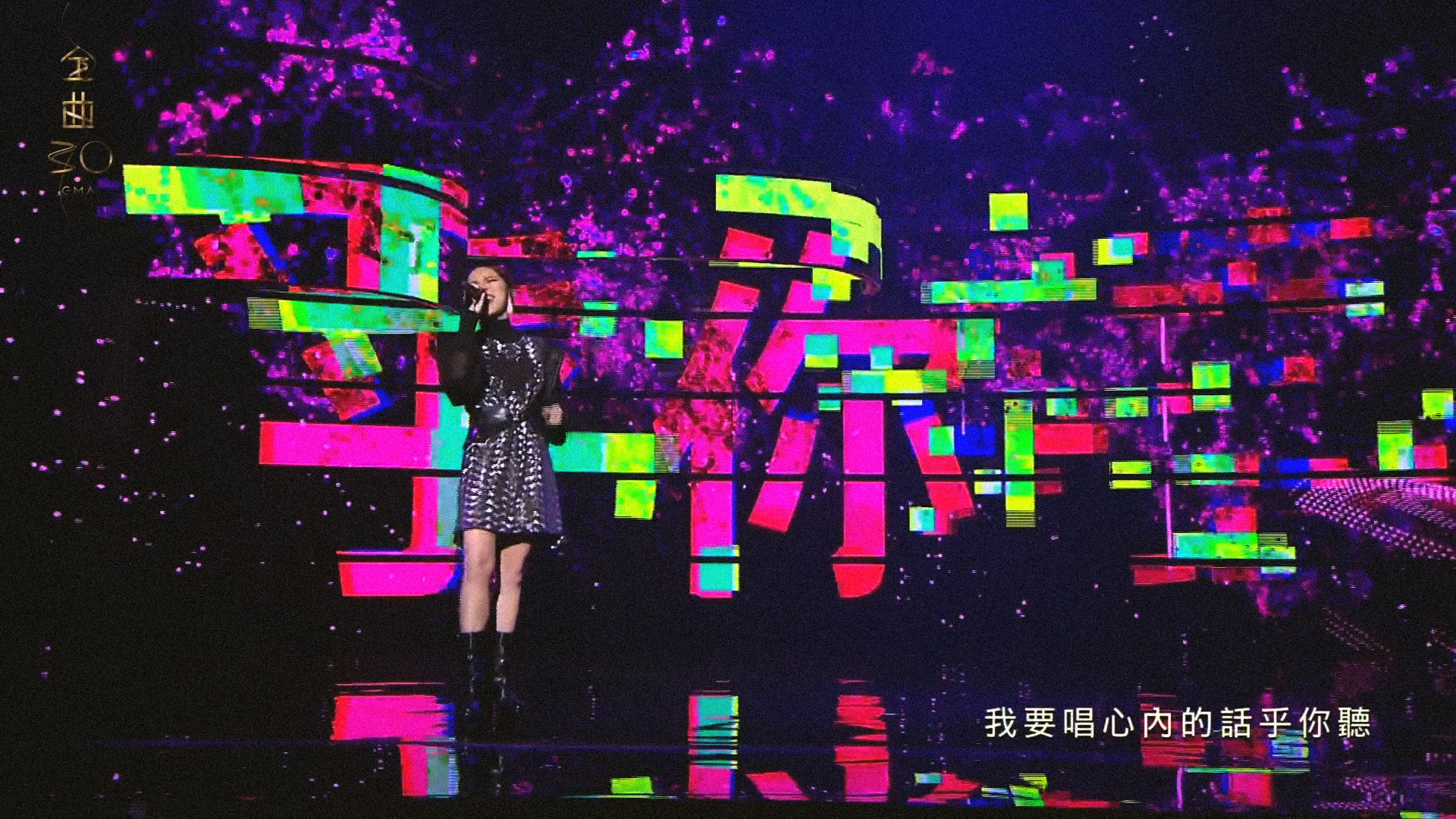

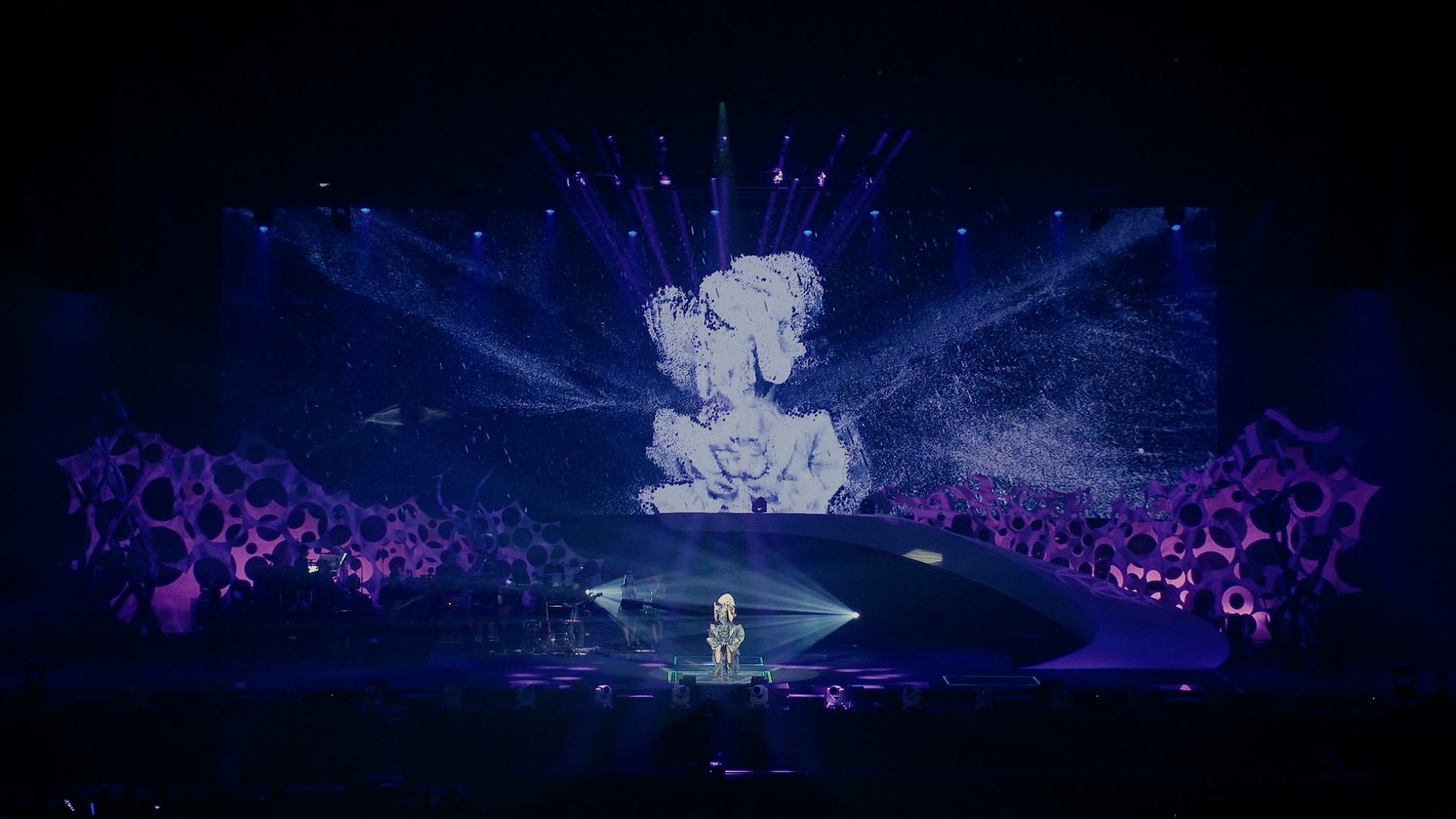

G.E.M. - 30th Golden Melody Awards

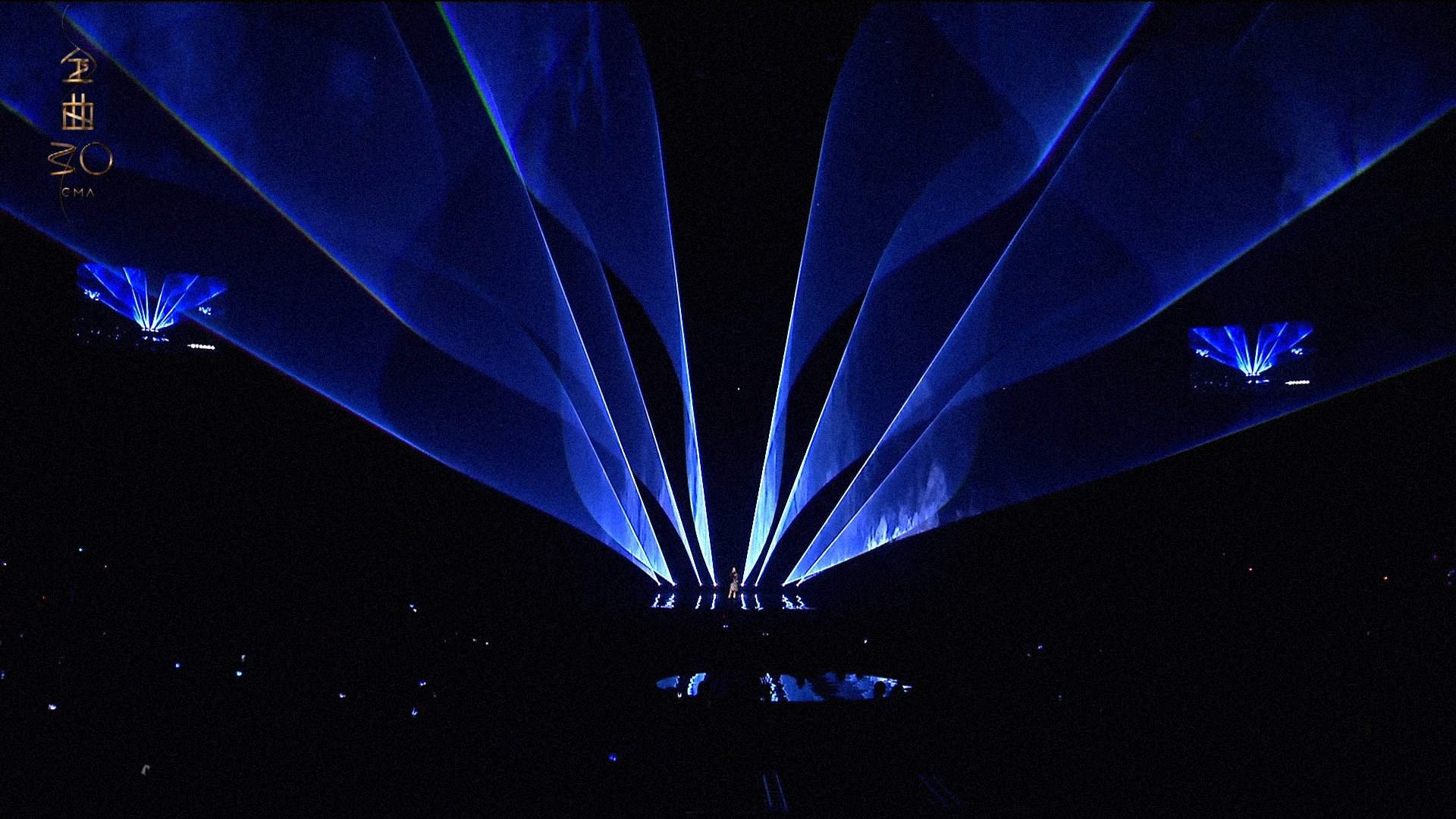

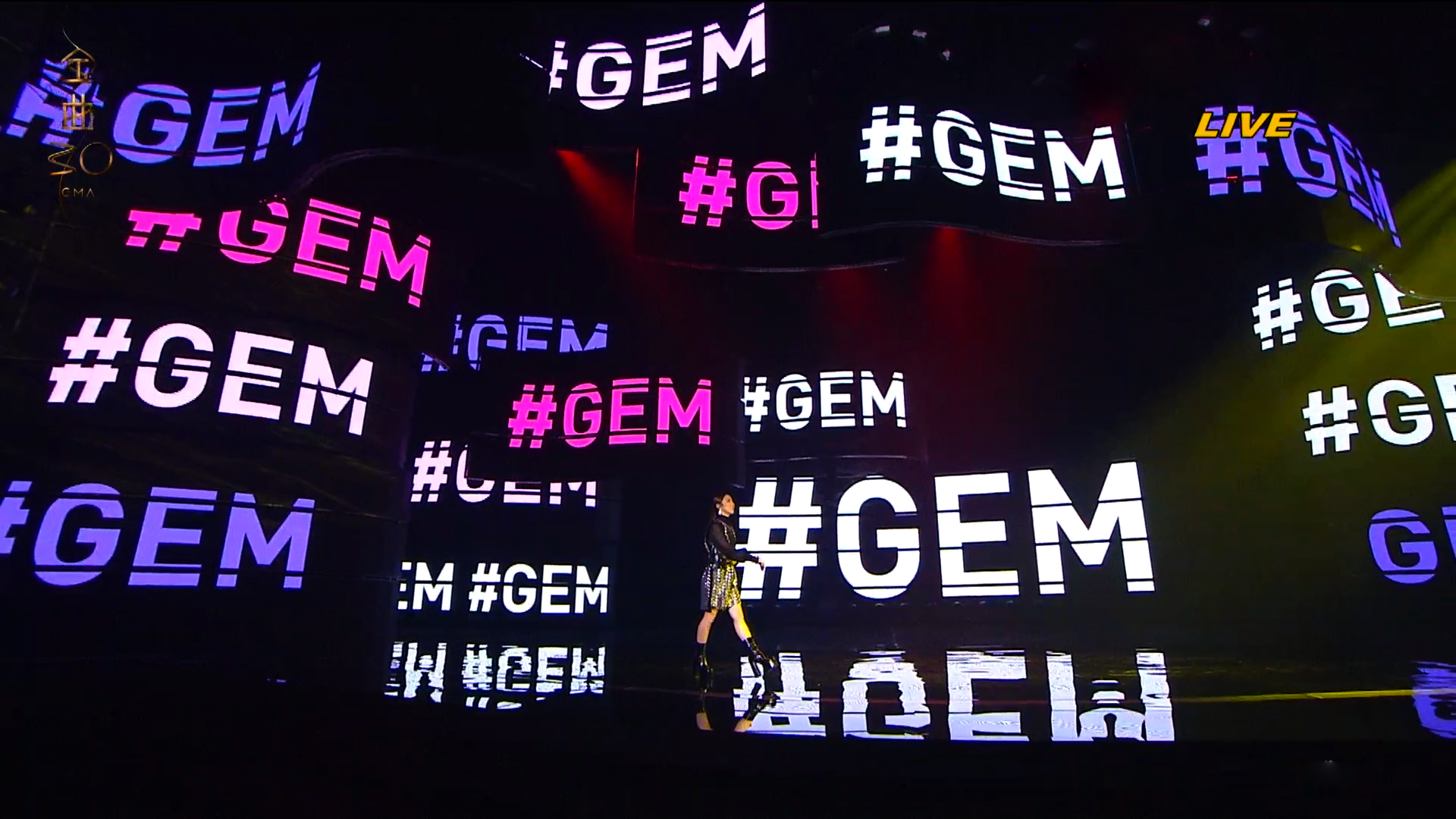

In 2019, which happened to be the 30th Anniversary of the Golden Melody Awards, we were very honored to be invited by 3AQUA Entertainment to participate in the performance production this year. They hoped that there’s the possibility for Ultra Combos Team to import some technical elements.

The theme to be carried out in that segment was “Streaming,” where Chinese superstar G.E.M. interpreted the current TOP 10 most-viewed Chinese music videos on YouTube. With streaming as the theme, there’s no doubt that “Digital,” “Technology,” and “Internet” will be used as the main imagery. The music styles of these ten songs are quite diverse, where a larger proportion is love songs. Having to properly arrange technology as the core form and style into the performance is a subject matter that needed to be well thought out and delicately designed.

Golden Melody Awards (GMA) is an indicative “Ceremony,” where its nature is very different than a “Performance” and a “Concert.” There are a lot of details and restrictions to be aware of and the schedule is quite tight. Fortunately, through communication with Visual Director Guozuo Xu and various collaborations with 3AQUA, in addition to getting advice on visual designs for the performance, we also maintained room to play with technology.

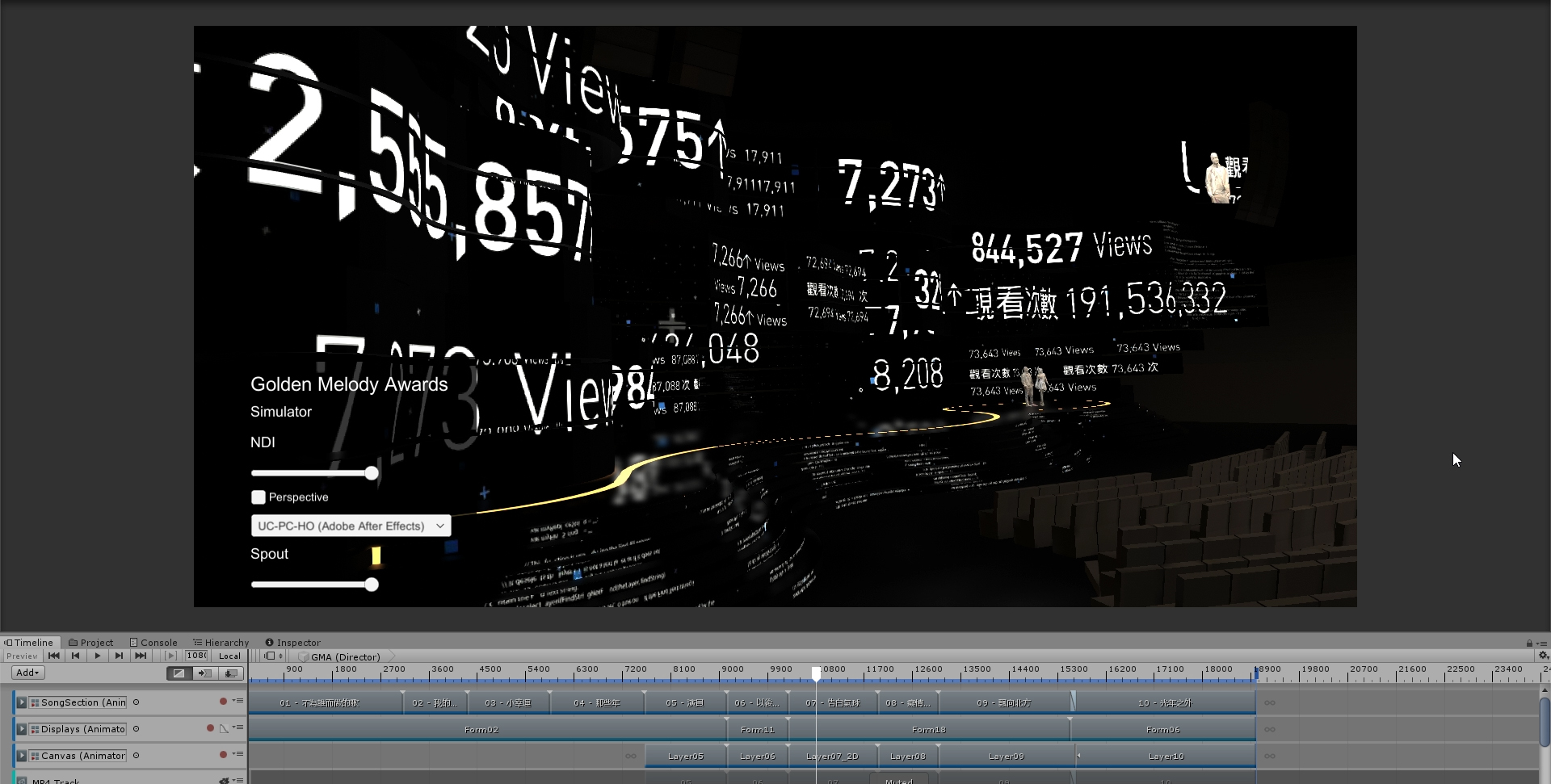

Before having begun the project, we hoped that through the production process, what we see is what we get

── the construction of a tool that is closest to the live experience

The stage used large wavy LED frames as its theme and its irregular form makes it hard for the production personnel to imagine how it looks from different locations. In addition, the resolution is equivalent to seven Full HDs, so any modifications would cost the visual personnel a lot of time. Therefore, it was necessary for us to have a way to effectively cut down on the number of back-and-forth adjustments.

Therefore, we designed a simulator, where the content produced by After Effects is immediately transmitted into the 3D game engine Unity, where, similar to playing a first person shooting game, the visual worker can walk around the GMA site and view how the visual presentation looks like.

Therefore, we designed a simulator, where the content produced by After Effects is immediately transmitted into the 3D game engine Unity, where, similar to playing a first person shooting game, the visual worker can walk around the GMA site and view how the visual presentation looks like.

Twilight

You Exist In My Song

── Composed of diffused light in the air

-

Laser Projector + Rolling Shutter

A strong visual effect filled with digital attributes, which also matched the style of the song, was needed for the opening. After various researches and discussions with 3AQUA, in the end, we decided to use a relevant technique, “Laser Projector” + “Rolling Shutter,” to produce a light screen that surrounds the performer in the space.

This effect is a combination of two principles, one of which is the method of producing graphics with a laser projector and the other is the rolling shutter.

To avoid misunderstanding, here, laser projector refers to the laser projectors that are used to make light shows for general performances, because projectors that use lasers as their light source are also called laser projectors. It operates by a combination of shooting laser beams through mirrors rotating in different directions to achieve high-speed horizontal and vertical movements. Therefore, when we see the laser drawing out a horizontal line, it is actually a persistence of vision produced by a point moving at a high speed back and forth from left to right.

As for rolling shutter, when the photosensitive element of a digital camera is CMOS, one way of using shutter is by controlling the photosensitive element with electronic signals. It would sense row by row, so it would produce the time difference between the top and bottom. This type of electronic shutter will produce a distorted effect when filming fast-moving objects.

With these two attributes and the fine design of the camera and laser content, this effect which can be seen only through “digital transmission” is produced.

In addition, if rolling shutter lacks pre-stage testing, its final effect is completely unpredictable. We are especially grateful for the planning and coordination of 3AQUA, the testing facility and equipment assistance of ERA Television, and technical revision and equipment assistance of laser consultant 陳逸明.

以後別做朋友

── Instant visual effect using optical flow calculations

-

Optical Flow

First, we’d like to thank 3AQUA for assisting us in producing the visual effect for these two songs, 演員 and 以後別做朋友, when we were in a terrible fix, so that we could focus on working with laser and the rest of the songs.

During this song, the director has set for the monitor to show the on-set live broadcast of G.E.M. and hoped to layer some effects that have the same texture as the background visuals. After studying the performance of G.E.M., we realized that she is quite expressive with her body and imagery produced by detecting motion would suit very well. However, there are numerous performances on the GMA stage and the mechanisms are quite complex, setting up any type of sensor would be a huge burden; therefore, we could only process it directly from the live screen. We felt that the Optical Flow calculation method would be the best solution. However, because we were unable to predict the clothing, background, and camera position during the actual ceremony, so, during the early stages, we tested using the other performance segments from YouTube. Fortunately, we obtained pretty good effects during GMA Ceremony.

Using performance segments to test the optical flow effect

Using performance segments to test the optical flow effectCredit: KKBOX

Love Confession

── Collect huge amounts of data from the Internet

-

Web Crawler

This song is a segment where the change in the music style is a lot more obvious and, up until the production, it was also the song with highest views on YouTube. The director designed for the distinguishing feature of these 10 songs having over 100 million hits to be presented in this segment. In addition to the very direct and massive rise of running numbers, another important trait of this new media, the streaming platform, is the participation of the comments from the audience. In the design of the image, we hoped to layer a barrage of comments.